| ID |

Date |

Author |

Category |

Subject |

|

268

|

Tue May 19 13:43:55 2020 |

Andrey Starodumov | Module transfer | 9 modules shipped to PSI |

Quick check: Leakage current, set Vana, VthrCompCalDel and PixelAlive

Module Current@-150V Programmable Readout

M1623 -0.335uA OK OK

M1630 -0.430uA OK OK

M1632 -0.854uA OK OK

M1634 -0.243uA OK OK

M1636 -0.962uA OK OK

M1637 -0.452uA OK OK

M1638 -0.440uA OK OK

M1639 -0.760uA OK OK

M1640 -0.354uA OK OK |

|

267

|

Mon May 18 14:05:01 2020 |

Andrey Starodumov | Module transfer | 8 modules shipped to ETHZ |

M1555, M1556, M1557,

M1558, M1559, M1560,

M1561, M1564 |

|

266

|

Fri May 15 17:15:34 2020 |

Andrey Starodumov | XRay HR tests | Analysis of HRT: M1630, M1632, M1636, M1638 |

Krunal proved test result of four modules and Dinko analised them.

M1630: Grade A, VCal calibration: Slope=43.5e-/Vcal, Offset=-145.4e-

M1632: Grade A, VCal calibration: Slope=45.4e-/Vcal, Offset=-290.8e-

M1636: Grade A, VCal calibration: Slope=45.9e-/Vcal, Offset=-255.2e-

M1638: Grade A, VCal calibration: Slope=43.3e-/Vcal, Offset=-183.1e-

A few comments:

1) Rates. One should distinguish X-rays rate and the hit rate seen/measured by a ROC (as correctly Maren mentioned).

X-rays rate vs tube current has been calibrated and the histogramm titles roughly reflect the X-rays rate. One could notice that

number of hits per pixel, again roughly, scaled with the X-rays rate (histo title)

2) M1638 ROC7 and ROC10 show that we see new pixel failures that were not observed in cold box tests. In this case it's not critical, since only

65/25 pixels are not responcive already at lowest rate. But we may have cases with more not responcive pixels.

3) M1638 ROC0: number of defects in cold box test is 3 but with Xrays in the summary table it's only 1. At the same time if one looks at ROC0

summary page in all Efficiency Maps and even in Hit Maps one could see 3 not responcive pixels. We should check in MoreWeb why it's so.

4) It's not critical but it would be good to understand why "col uniformity ratio" histogramm is not filled properly. This check has been introduced

to identify cases when a column performace degrades with hit rate.

5) PROCV4 is not so noisy as PROCV2, but nevertheless I think we should introduce a proper cut on a pixel noise value and activate grading on

the total number of noisy pixels in a ROC (in MoreWeb). For a given threshold and acceptable noise rate one can calculate, pixels with noise

above which level should be counted as defective. |

|

265

|

Wed May 13 23:16:37 2020 |

Dinko Ferencek | Software | Fixed double-counting of pixel defects in the production overview page |

| Dinko Ferencek wrote: | | As a follow-up to this elog, double-counting of pixel defects in the production overview page was fixed in 3a2c6772. |

A few extra adjustments were made in:

38eaa5d6: also removed double-counting of pixel defects in module maps in the production overview page

51aadbd7: adjusted the trimmed threshold selection to the L1 replacement conditions |

|

264

|

Wed May 13 17:57:45 2020 |

Andrey Starodumov | Other | L1_DATA backup |

| L1_DATA files are backed up to the LaCie disk |

|

263

|

Tue May 12 13:29:27 2020 |

Andrey Starodumov | Full test | FT of M1539, M1582, M1606 |

M1539: Grade B due to mean noise >200e in few ROCs

M1582: Grade B due to mean noise >200e in few ROCs and at -20C 137 pixels in ROC1 failed trimming. For P5 could use older test results (trim parameters) of April 27 M20_1 when only 23 pixels in ROC1 failed trimming

M1606: Grade C due to 161 pixels failed trimming in ROC2 and total # of defects in this ROC 169. For P5 could use older test results (trim parameters) of March 19 M20_2 when only 36 pixels in ROC2 failed trimming or April 6 when 40 pixels failed (at all T this test has the best performance). |

|

262

|

Mon May 11 21:41:15 2020 |

Dinko Ferencek | Software | 17 to 10 C changes in the production overview page |

| 0c513ab8: a few more updates on the main production overview page related to the 17 to 10 C change |

|

261

|

Mon May 11 21:37:43 2020 |

Dinko Ferencek | Software | Fixed the BB defects plots in the production overview page |

0407e04c: attempting to fix the BB defects plots in the production overview page (seems mostly related to the 17 to 10 C change)

f2d554c5: it appears that BB2 defect maps were not processed correctly |

|

260

|

Mon May 11 21:32:20 2020 |

Dinko Ferencek | Software | Fixed double-counting of pixel defects in the production overview page |

| As a follow-up to this elog, double-counting of pixel defects in the production overview page was fixed in 3a2c6772. |

|

259

|

Mon May 11 14:40:16 2020 |

danek kotlinski | Other | M1582 |

On Friday I have tested the module M1582 at room temperature in the blue box.

The report in MoreWeb says that this module has problems with trimming 190 pixels in ROC1.

I see not problem in ROC1. The average threshold is 50 with rms=1.37. Only 1 pixel is in the 0 bin.

See the attached 1d and 2d plots.

Also the PH looks good. The vcal 70 PH map is reconstructed at vcal 70.3 with rms of 3.9.

5159 pixels have valid gain calibrations.

I conclude that this module is fine.

Maybe it is again a DTB problem, as reported by Andrey.

D. |

|

258

|

Mon May 11 14:14:05 2020 |

danek kotlinski | Other | M1606 |

On Friday I have tested M1606 at room temperature in the red cold box.

Previously it was reported that trimming does not work for ROC2.

In this test trimming was fine, only 11 pixels failed it.

See the attached 1D and 2D histograms. There is small side peak at about vcal=56 with ~100 pixels.

But this should not be a too big problem?

Also the Pulse height map looks good and the reconstructed pulse height at vcal=70

gives vcal=68.1 with rms=4.2, see the attached plot.

So I conclude that this module is fine. |

|

257

|

Mon May 11 13:19:51 2020 |

Andrey Starodumov | Cold box tests | M1539 |

After several attempts including reconnecting the cable, M1539 had no readout if it's connected to TB3. When connected to TB1, M1539 did not show any problem. M1606 worked properly both with TB1 and TB3.

For FT test the configuration is following:

TB1: M1539

TB3: M1606 |

|

256

|

Thu May 7 01:51:03 2020 |

Dinko Ferencek | Software | Strange bug/feature affecting Pixel Defects info in the Production Overview page |

It was observed that sometimes the Pixel Defects info in the Production Overview page is missing

It turned out this was happening for those modules for which the MoReWeb analysis was run more than once. The solution is to remove all info from the database for the affected modules

python Controller.py -d

type in, e.g. M1668, and when prompted, type in 'all' and press ENTER and confirm you want to delete all entries. After that, run

python Controller.py -m M1668

followed by

python Controller.py -p

The missing info should now be visible. |

|

255

|

Thu May 7 00:56:50 2020 |

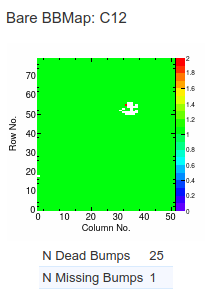

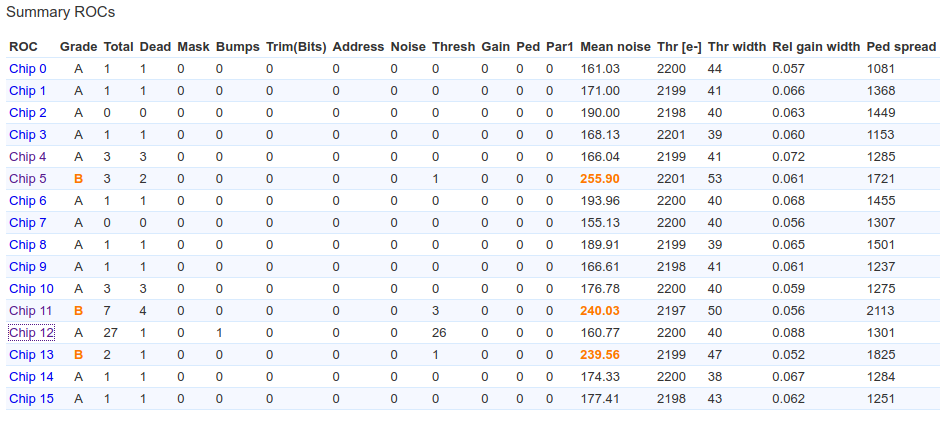

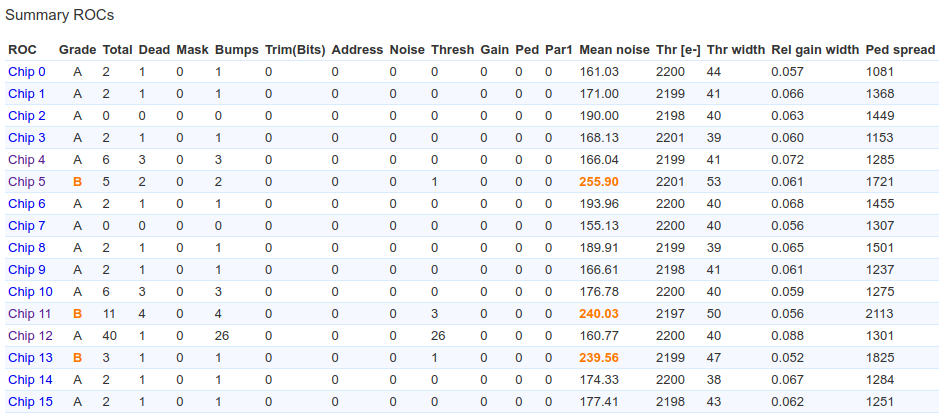

Dinko Ferencek | Software | MoReWeb updates related to the BB2 test |

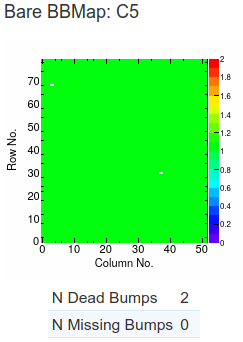

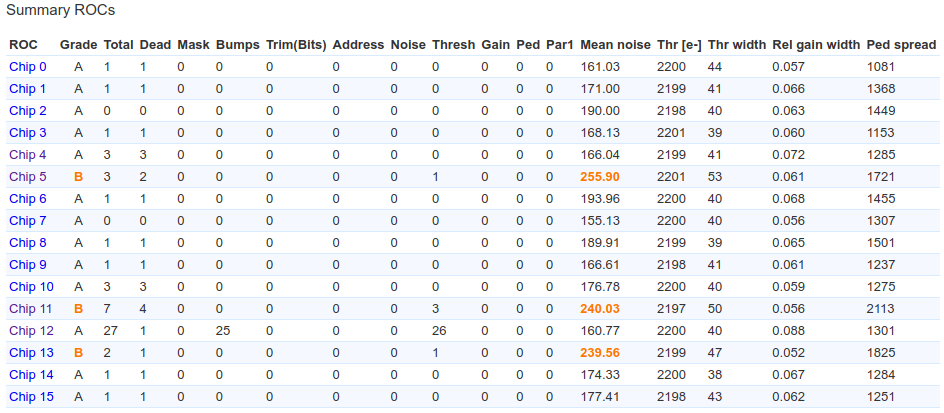

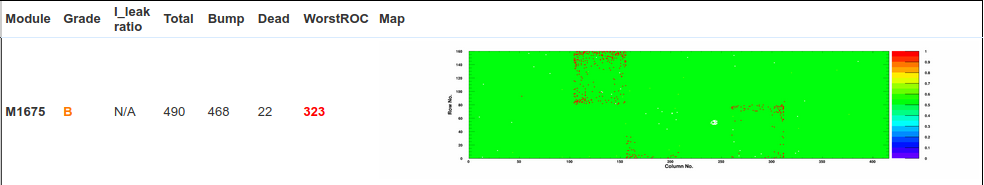

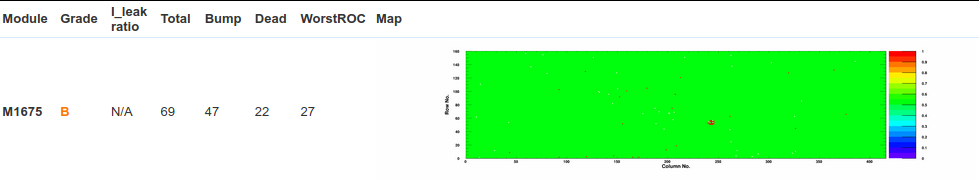

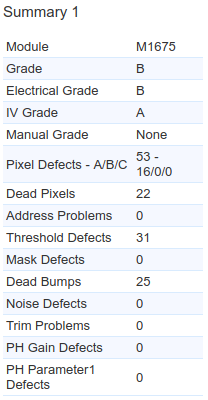

Andrey noticed that results of the BB2 test (here example for ROC 12 in M1675)

were not properly propagated to the ROC Summary

This was fixed in d9a1258a. However, looking at the summary for ROC 5 in the same module after the fix

it became apparent that dead pixels were double-counted under the dead bumps despite the fact they were supposed to be subtracted here. From the following debugging printout

Chip 5 Pixel Defects Grade A

total: 5

dead: 2

inef: 0

mask: 0

addr: 0

bump: 2

trim: 1

tbit: 0

nois: 0

gain: 0

par1: 0

total: set([(5, 4, 69), (5, 3, 68), (5, 37, 30), (5, 38, 31), (5, 4, 6)])

dead: set([(5, 37, 30), (5, 3, 68)])

inef: set([])

mask: set([])

addr: set([])

bump: set([(5, 4, 69), (5, 38, 31)])

trim: set([(5, 4, 6)])

tbit: set([])

nois: set([])

gain: set([])

par1: set([])

it became apparent that the column and row addresses for pixels with bump defects were shifted by one. This was fixed in 415eae00

However, there was still a problem with the pixel defects info in the production overview page which was still using using the BB test results

After switching to the BB2 tests results in ac9e8844, the pixel defects info looked better

but it was still not in a complete sync with the info presented in the FullQualification Summary 1

This is due to double-counting of dead pixels which still needs to be fixed for the Production Overview. |

|

254

|

Thu May 7 00:27:41 2020 |

Dinko Ferencek | Module grading | Comment about TrimBitDifference and its impact on the Trim Bit Test |

To expand on the following elog, on Mar. 24 Andrey changed the TrimBitDifference parameter in Analyse/Configuration/GradingParameters.cfg from 2 to -2

$ diff Analyse/Configuration/GradingParameters.cfg.default Analyse/Configuration/GradingParameters.cfg

45c45

< TrimBitDifference = 2.

---

> TrimBitDifference = -2.

From the way this parameter is used here, one can see from this line that setting the TrimBitDifference to any negative value effectively turns off the test.

More details about problems with the Trim Bit Test can be found in this elog. |

|

253

|

Thu May 7 00:10:15 2020 |

Dinko Ferencek | Software | MoReWeb empty DAC plots |

| Andrey Starodumov wrote: |

| Matej Roguljic wrote: | Some of the DAC parameters plots were empty in the total production overview page. All the empty plots had the number "35" in them (e.g. DAC distribution m20_1 vana 35). The problem was tracked down to the trimming configuration. Moreweb was expecting us to trim to Vcal 35, while we decided to trim to Vcal 50. I "grepped" where this was hardcoded and changed 35->50.

The places where I made changes:

- Analyse/AbstractClasses/TestResultEnvironment.py

'trimThr':35

- Analyse/Configuration/GradingParameters.cfg.default

trimThr = 35

- Analyse/OverviewClasses/CMSPixel/ProductionOverview/ProductionOverviewPage/ProductionOverviewPage.py

TrimThresholds = ['', '35']

- Analyse/OverviewClasses/CMSPixel/ProductionOverview/ProductionOverviewPage/ProductionOverviewPage.py

self.SubPages.append({"InitialAttributes" : {"Anchor": "DACDSpread35", "Title": "DAC parameter spread per module - 35"}, "Key": "Section","Module": "Section"})

It's interesting to note that someone had already made the change in "Analyse/Configuration/GradingParameters.cfg" |

I have changed

1)StandardVcal2ElectronConversionFactorfrom 50 to 44 for VCal calibration of PROC600V4 is 44el/VCal

2)TrimBitDifference from 2 to -2 for not to take into account failed trim bit test that is an artifact from trimbit test SW. |

1) is committed in 74b1038e.

2) was made on Mar. 24 (for more details, see this elog) and is currently left in Analyse/Configuration/GradingParameters.cfg and might be committed in the future depending on what is decided about the usage of the Trim Bit Test in module grading

$ diff Analyse/Configuration/GradingParameters.cfg.default Analyse/Configuration/GradingParameters.cfg

45c45

< TrimBitDifference = 2.

---

> TrimBitDifference = -2.

There were a few other code updates related to a change of the warm test temperature from 17 to 10 C. Those were committed in 3a98fef8. |

|

252

|

Wed May 6 16:24:21 2020 |

Andrey Starodumov | Full test | FT of M1580, M1595, M1606, M1659 |

M1580: Grade B due to mean noise >200e in ROC5/8 and trimming failures for 100+ pixels in the same ROCs at +10C, previous result of April 27 was better

M1595: Grade B due to mean noise >200e in few ROCs, previous result of April 30 was much worse with 80/90 pixels failed trimming in ROC0 and ROC15

M1606: Grade C due to 192 pixels failed trimming in ROC2 at +10C, previous result of April 6 was much better with B grade

M1659: Grade B due to mean noise >200e in few ROCs, previous result of Aplirl 7 was almost the same

M1606 to tray C* for further investigation |

|

251

|

Wed May 6 13:20:28 2020 |

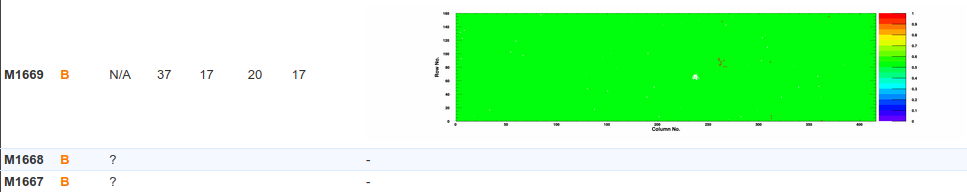

Andrey Starodumov | Full test | FT of M1574, M1581, M1660, M1668 |

Modules tested on May 5th

M1574: Grade B due to mean noise >200e in ROC10 and trimming failures for 89 pixels in ROC0, the same as the first time April 24 (there 104 pixels failed)

M1581: Grade B due to mean noise >200e in ROC8/13, no trimming failures in ROC8/13, as it was on April 27 (120+ pixel in ROC8/13 failed) -> Resalts improved!

M1660: Grade B due to mean noise >200e in few ROCs, no more trimming failure for 172 pixels in ROC7 as it was on April 7 in ROC7 -> Results improved!

M1668: Grade B due to mean noise >200e in few ROCs results are worse than were on April 14: one more ROC with mean noise > 200e

resume: for 2 modules results are improved for 2 others almost the same |

|

250

|

Tue May 5 13:58:45 2020 |

Andrey Starodumov | Full test | FT of M1582, M1649, M1667 |

M1582: Grade C due to trimming failure in ROC1 for 189 pixels at +10C. This is third time module restesed:

1) February 26 (trimming for VCal 40 and old PH optimization): Grade B, max 29 failed pixels and in few ROCs mean noise

2) April 27: Grade C due to trimming failure in ROC1 for 167 pixels at +10C, at -20C still max 45 failed pixels and in few ROCs mean noise

3) March 5: Grade C due to trimming failure in ROC1 for 189 pixels at +10C, at -20C trimming failure in ROC1 for 157 pixels

The module quality getting worse.

M1649: Grade B due to mean noise >200e in ROC11

M1667: Grade B due to mean noise >200e in few ROCs

M1582 is in C* tray. To be investigated. |

|

249

|

Mon May 4 15:28:14 2020 |

Andrey Starodumov | General | M1660 |

M1660 is taken from gel-pak and cabled for retest.

This module was graded C only at second FT at-20C, the first FT at -20C and FT at +10C give grade B. Massive trimming failure of pixels in ROC7 was not observed.

The module will be retested. |