| ID |

Date |

Author |

Category |

Subject |

|

266

|

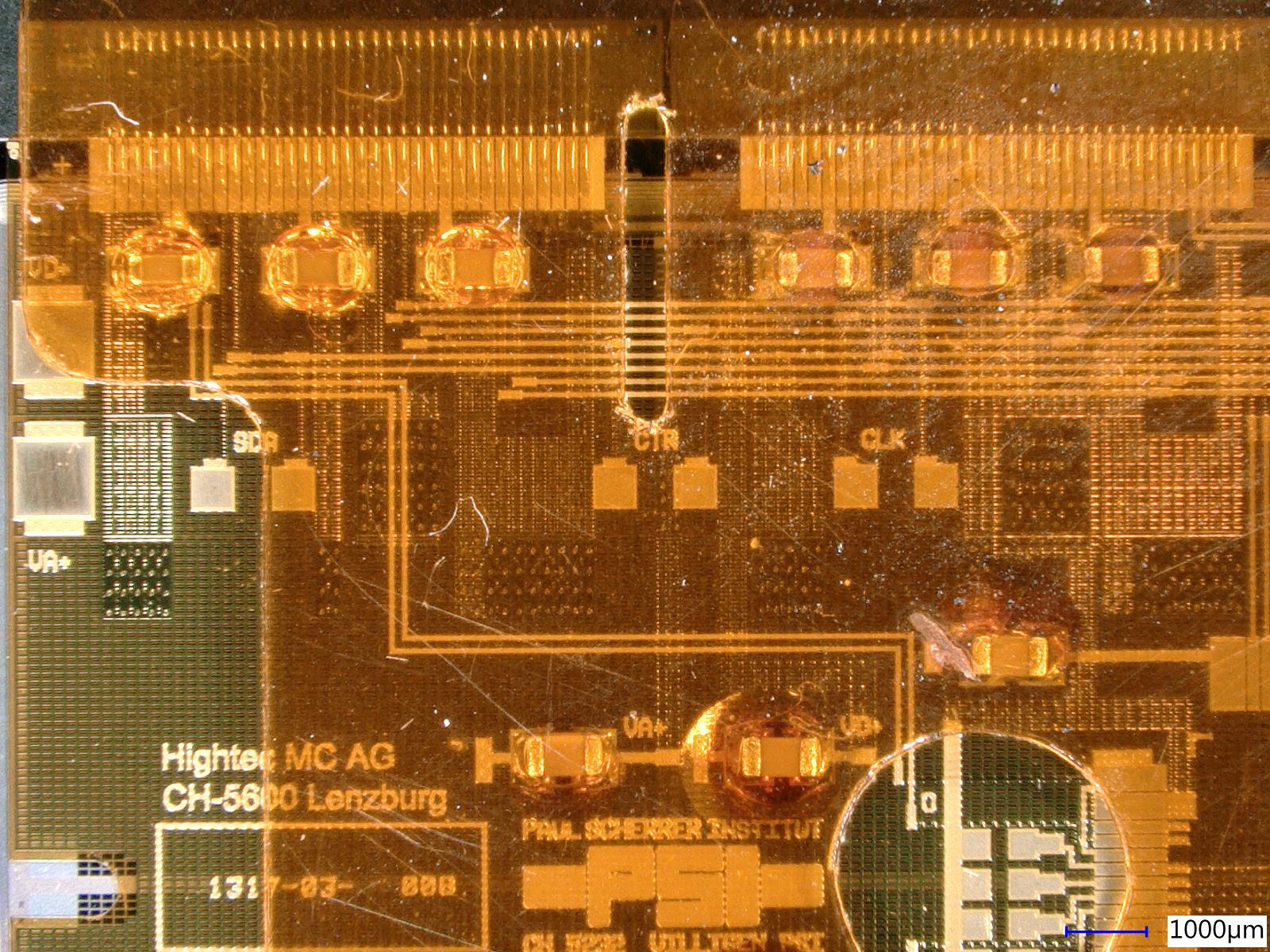

Fri May 15 17:15:34 2020 |

Andrey Starodumov | XRay HR tests | Analysis of HRT: M1630, M1632, M1636, M1638 |

Krunal proved test result of four modules and Dinko analised them.

M1630: Grade A, VCal calibration: Slope=43.5e-/Vcal, Offset=-145.4e-

M1632: Grade A, VCal calibration: Slope=45.4e-/Vcal, Offset=-290.8e-

M1636: Grade A, VCal calibration: Slope=45.9e-/Vcal, Offset=-255.2e-

M1638: Grade A, VCal calibration: Slope=43.3e-/Vcal, Offset=-183.1e-

A few comments:

1) Rates. One should distinguish X-rays rate and the hit rate seen/measured by a ROC (as correctly Maren mentioned).

X-rays rate vs tube current has been calibrated and the histogramm titles roughly reflect the X-rays rate. One could notice that

number of hits per pixel, again roughly, scaled with the X-rays rate (histo title)

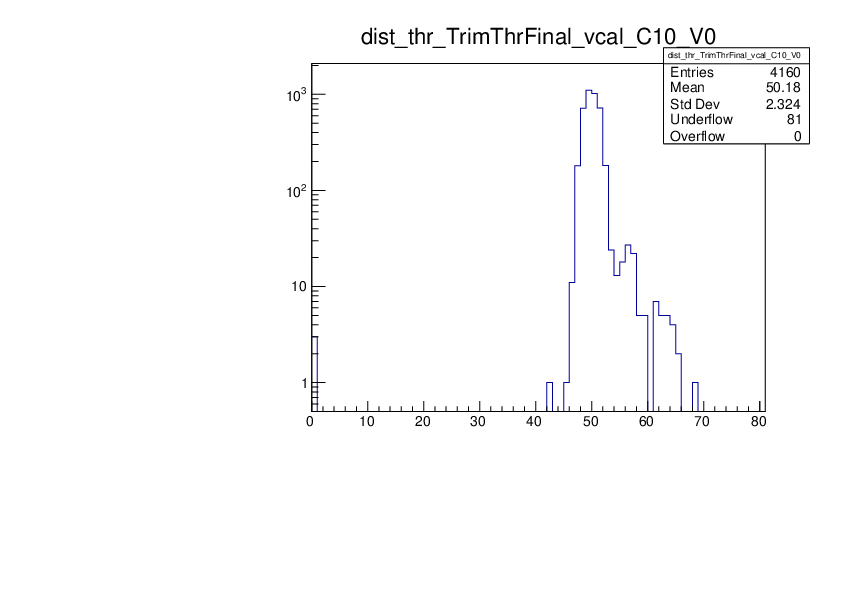

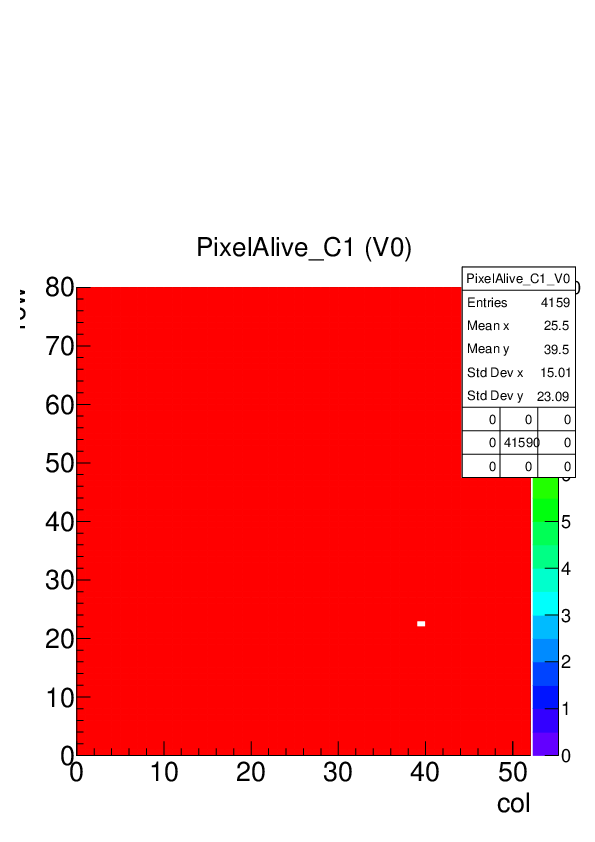

2) M1638 ROC7 and ROC10 show that we see new pixel failures that were not observed in cold box tests. In this case it's not critical, since only

65/25 pixels are not responcive already at lowest rate. But we may have cases with more not responcive pixels.

3) M1638 ROC0: number of defects in cold box test is 3 but with Xrays in the summary table it's only 1. At the same time if one looks at ROC0

summary page in all Efficiency Maps and even in Hit Maps one could see 3 not responcive pixels. We should check in MoreWeb why it's so.

4) It's not critical but it would be good to understand why "col uniformity ratio" histogramm is not filled properly. This check has been introduced

to identify cases when a column performace degrades with hit rate.

5) PROCV4 is not so noisy as PROCV2, but nevertheless I think we should introduce a proper cut on a pixel noise value and activate grading on

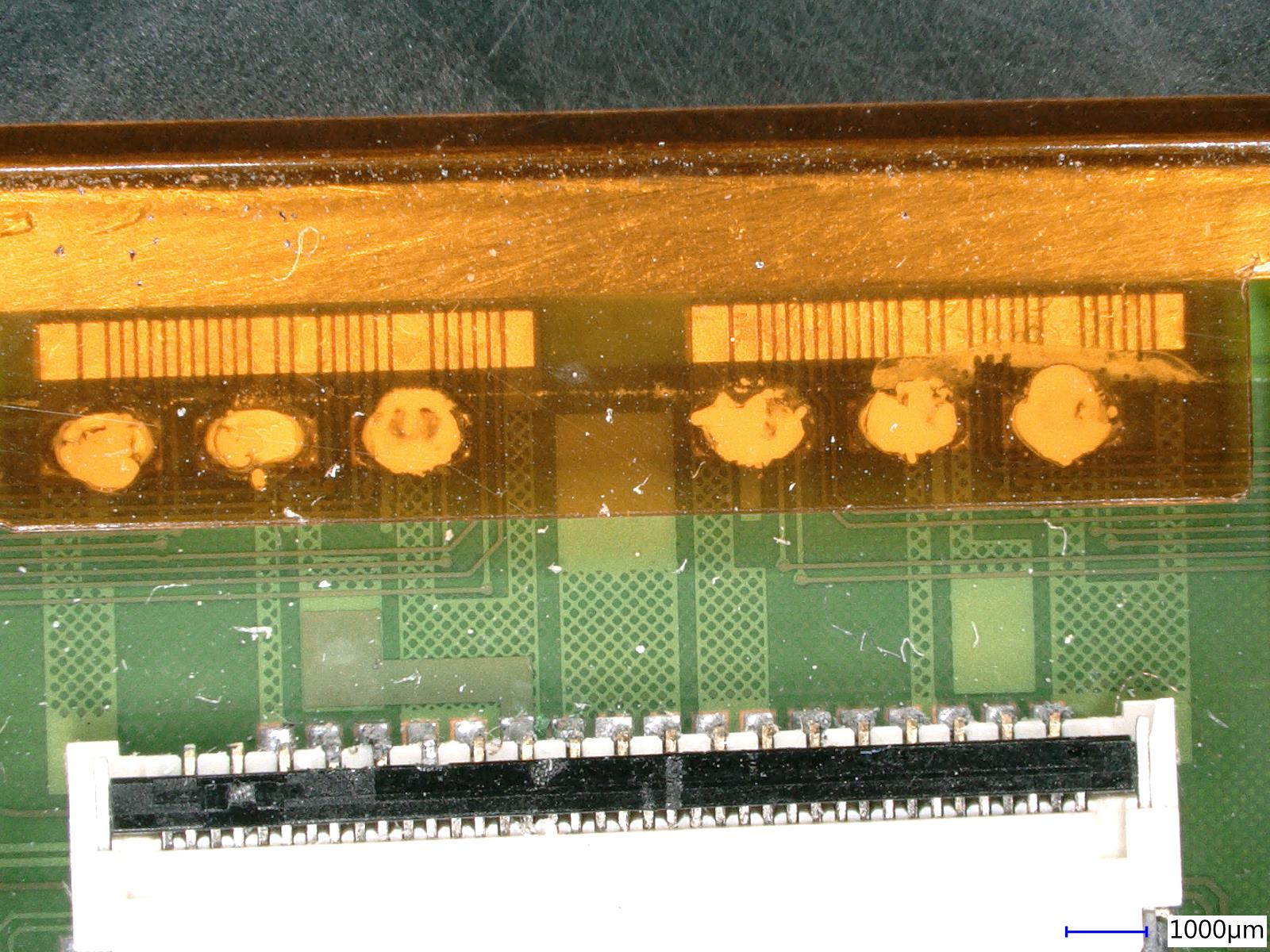

the total number of noisy pixels in a ROC (in MoreWeb). For a given threshold and acceptable noise rate one can calculate, pixels with noise

above which level should be counted as defective. |

|

269

|

Fri May 22 16:06:43 2020 |

Andrey Starodumov | XRay HR tests | Analysis of HRT: M1623, M1632, M1634, M1636-M1639,M1640 |

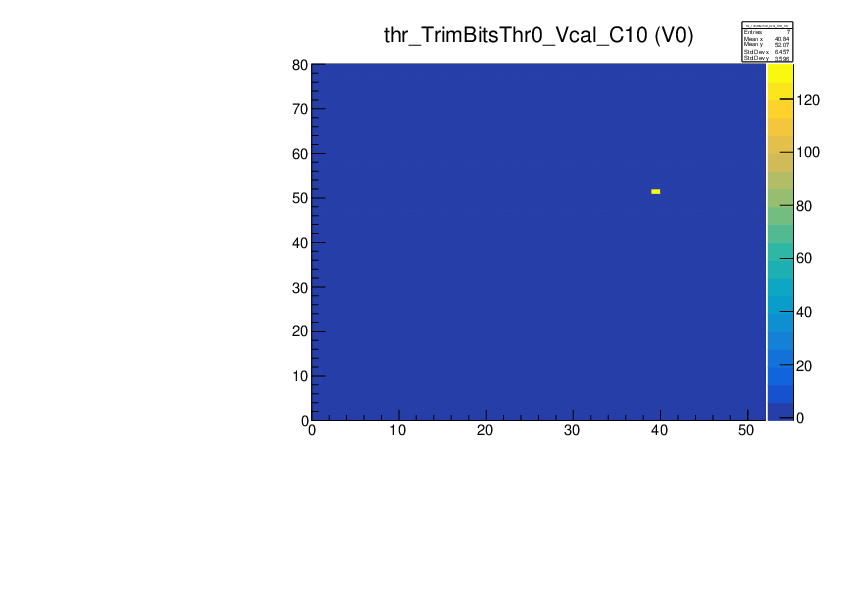

Module HRtest VCal calibration Grade

#defects max #noisy pix

ColdBox XRay

M1623 130 151 91 45xVCal-67e- B

M1630 80 1 385 43xVcal-290e- A

M1632 14 9 124 45xVcal-145e- A

M1634 33 81 339 43xVcal-347e- B

M1636 10 14 109 46xVcal-255e- A

M1637 71 45 175 45xVcal-182e- A

M1638 12 95 269 43xVcal-183e- B

M1639 21 96 482 43xVcal-441e- B

M1640 30 22 115 44xVcal-134e- A |

|

272

|

Mon May 25 16:58:23 2020 |

Andrey Starodumov | XRay HR tests | a few commments |

These is just to record the information:

1. measured hit rates at which Efficiency and Xray hits Maps are taken are 40-50% of the 50-400MHz/cm2 that in the titles of corresponding plots

2. in 2016 the maximum rate was 400MHz/cm2 but with such rate (or better corresponding settings of HV and current of Xray tube) in double columns of certain modules (likely depending on a module position with respect to Xray beam spot) the measured rate was smaller than 300MHz/cm2 that is target rate. Somtimes extrapolation of efficiency curve to 300MHz/cm2 is too large. I think this is not correct. Unfortunately higher rates cause too many readout errors that prevent a proper measurement of the hit efficiency. May be a new DTB with than 1.2A maximum digital current will help.

3. |

|

279

|

Fri May 29 15:04:04 2020 |

Andrey Starodumov | XRay HR tests | Analysis of HRT: M1555-M1561 and M1564 |

Module HRtest VCal calibration Grade

#defects max #noisy pix

ColdBox XRay

M1555: 24 44 375 44xVcal-388e- A

M1556: 54 74 507 43xVcal-370e- B

M1557: 109 63 145 43xVcal-216e- A

M1558: 127 113 103 44xVcal-145e- B

M1559: 69 59 92 46xVcal-119e- A

M1560: 35 54 66 46xVcal-123e- A

M1561: 33 44 129 45xVcal-244e- A

M1564: 26 36 93 47xVcal- 29e- A

|

|

299

|

Fri Aug 28 12:02:48 2020 |

Andrey Starodumov | XRay HR tests | M1599 |

| ROC5 has eff=93.65% and should be graded C. Somehow efficiency was not taken into account for HR test grading??? |

|

3

|

Tue Aug 6 16:01:17 2019 |

Matej Roguljic | Software | Wrong dac settings - elComandante |

| If it seems that elComandante is taking wrong dac settings for tests like Reception test or full qualification, one should remember that it does NOT read values from module specific folders like "M1523", but rather from tbm-specific folders like "tbm10d". The folders from which the dacs are taken are listed in "elComandante.config", the lines which look like "tbm10d:tbm10d". |

|

14

|

Thu Sep 26 15:51:30 2019 |

Dinko Ferencek | Software | pXar code updated |

pXar code in /home/l_tester/L1_SW/pxar/ on the lab PC was updated yesterday from https://github.com/psi46/pxar/tree/15b956255afb6590931763fd07ed454fb9837fc0 to the latest version https://github.com/psi46/pxar/tree/e17df08c7bbeb8472e8f56ccd2b9d69a113ccdc3 which among other things contains updated DAC settings for ROCs.

All the configs will have to be regenerated before the start of the module qualification. |

|

17

|

Thu Sep 26 22:09:14 2019 |

Dinko Ferencek | Software | DAC configuration update |

In accordance with the agreement made in an email thread initiated by Danek, the following changes to DAC settings for ROCs

vsh: 30 -> 8

vclorbias: 30 -> 120

ctrlreg: 0 -> 9

were propagated into existing configuration files in

/home/l_tester/L1_SW/pxar/data/tbm10c/

/home/l_tester/L1_SW/pxar/data/tbm10d/

/home/l_tester/L1_SW/pxar/data/M1522/

on the lab PC at PSI.

It should be noted that ctrlreg was changed to the recommended value for PROC V3. For PROC V4 ctrlreg needs to be set to 17 so this is important to keep in mind when using configuration files and modules built using different versions of PROC. |

|

21

|

Wed Oct 2 12:50:52 2019 |

Dinko Ferencek | Software | Problem with elComandante Keithley client during full qualification |

Full qualification was attempted for M1532 on Oct. 1. After the second Fulltest at -20 C finished, the Keitley client crashed with the following error

File "/home/l_tester/L1_SW/elComandante/keithleyClient/keithleyInterface.py", line 147, in check_busy

self.check_busy(data[1:])

File "/home/l_tester/L1_SW/elComandante/keithleyClient/keithleyInterface.py", line 147, in check_busy

self.check_busy(data[1:])

File "/home/l_tester/L1_SW/elComandante/keithleyClient/keithleyInterface.py", line 147, in check_busy

self.check_busy(data[1:])

File "/home/l_tester/L1_SW/elComandante/keithleyClient/keithleyInterface.py", line 147, in check_busy

self.check_busy(data[1:])

File "/home/l_tester/L1_SW/elComandante/keithleyClient/keithleyInterface.py", line 147, in check_busy

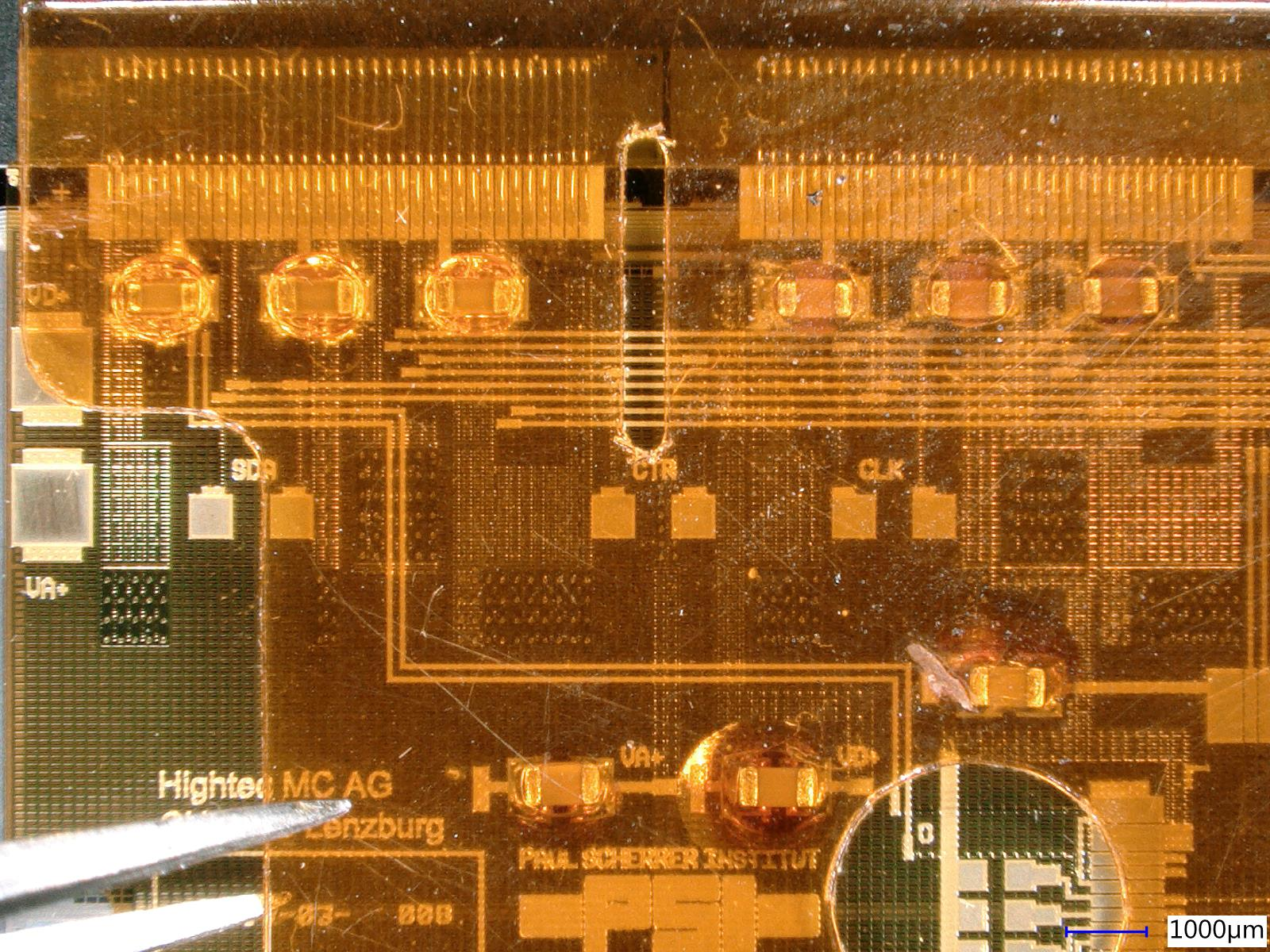

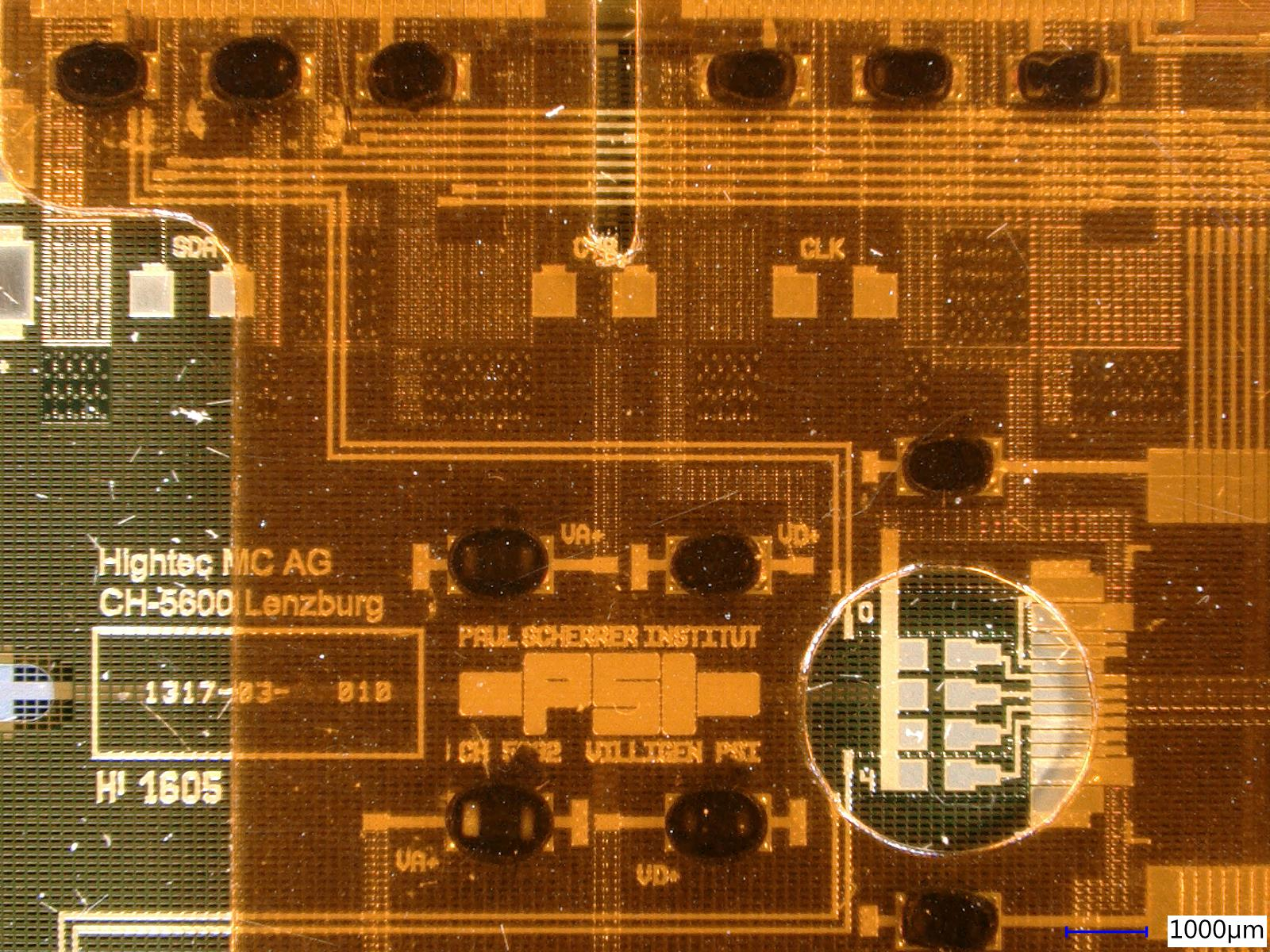

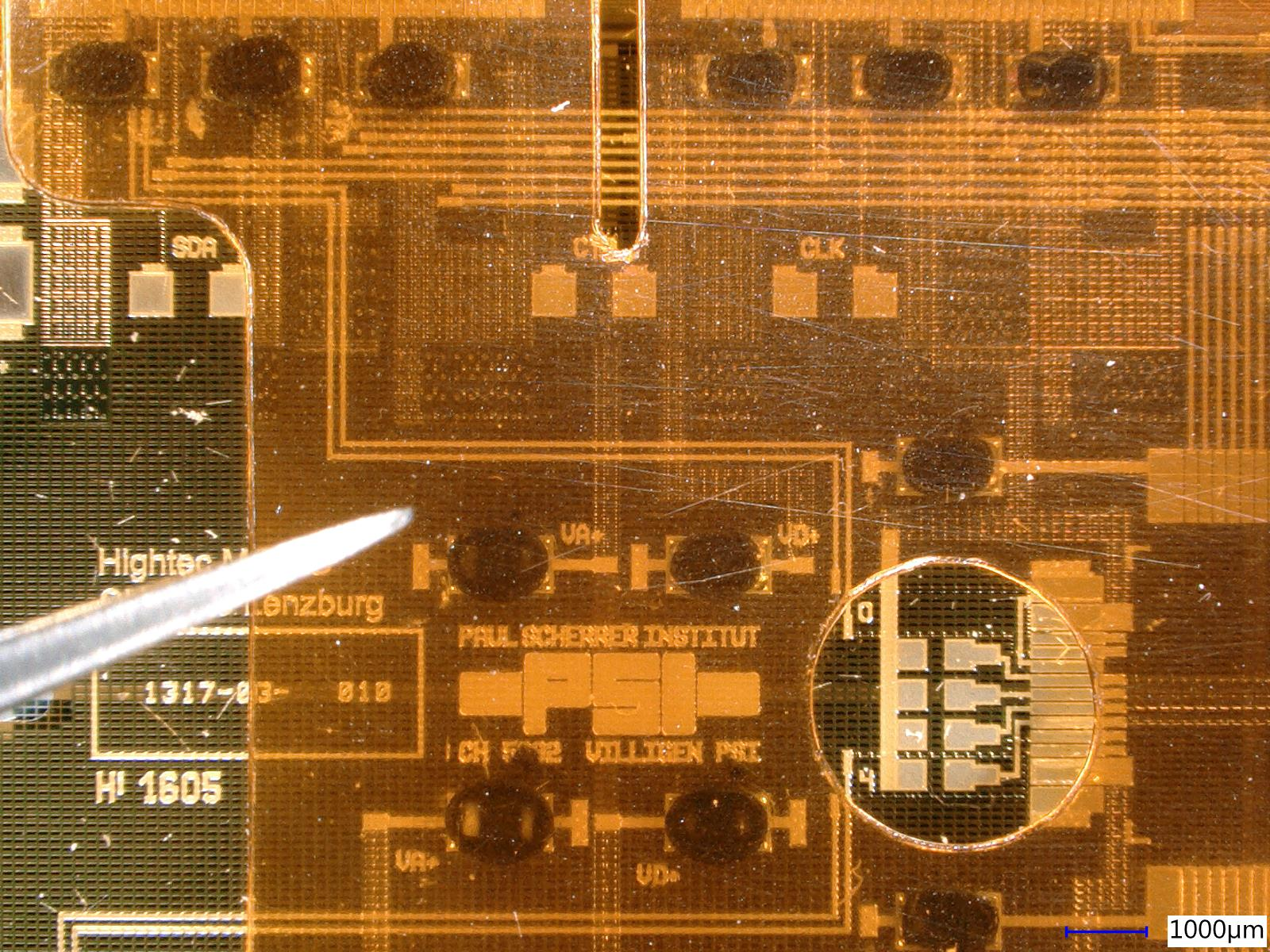

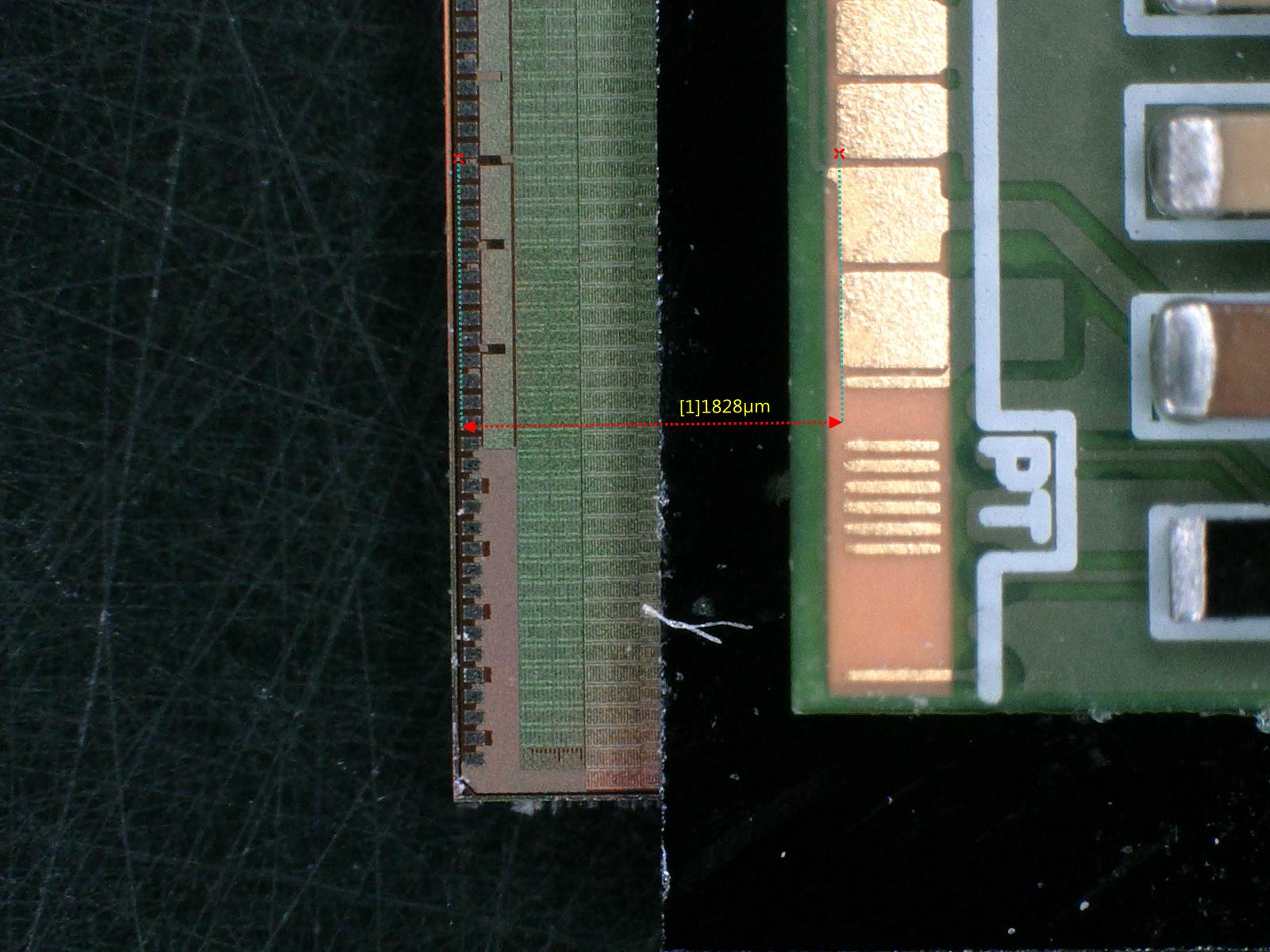

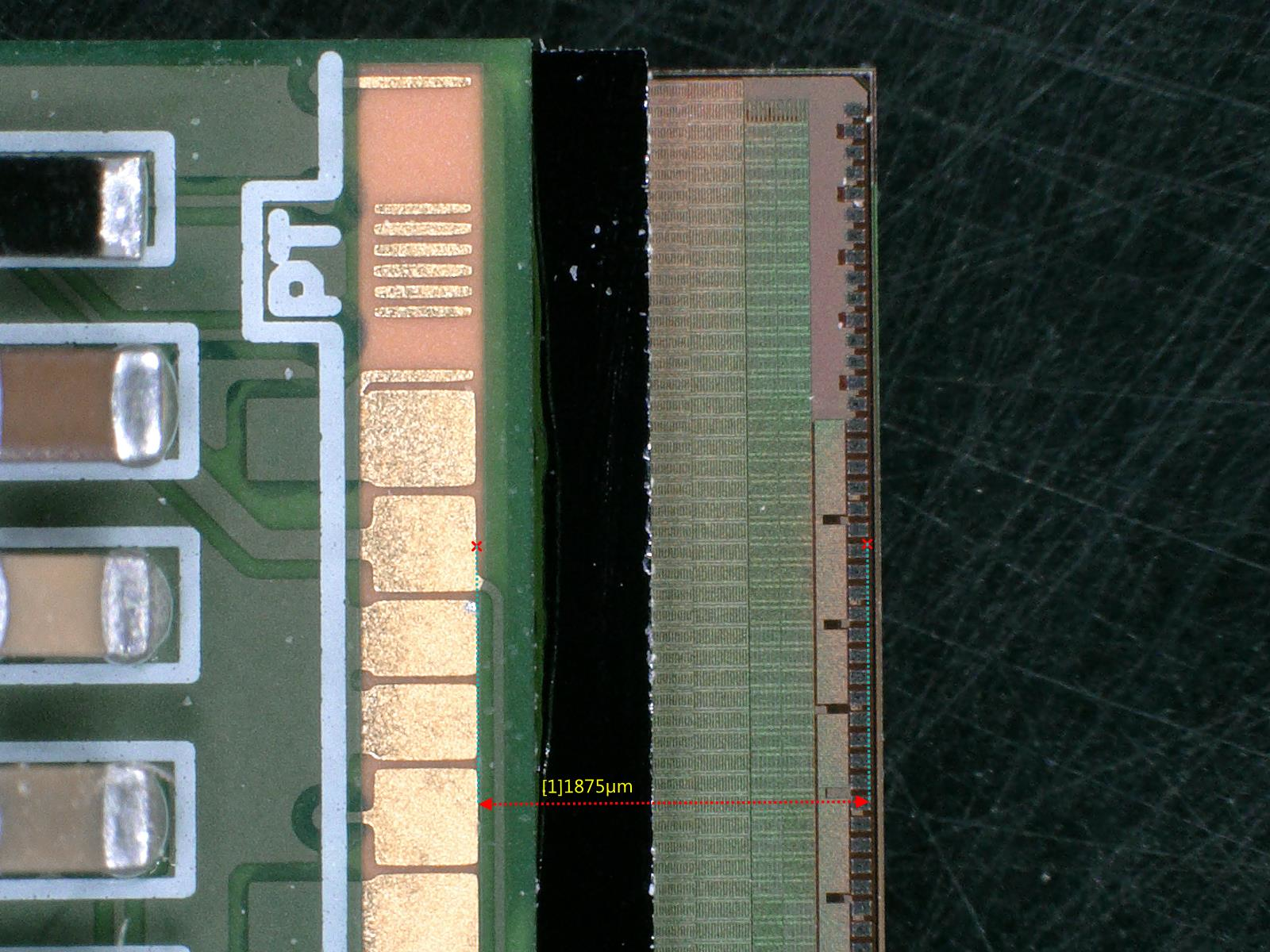

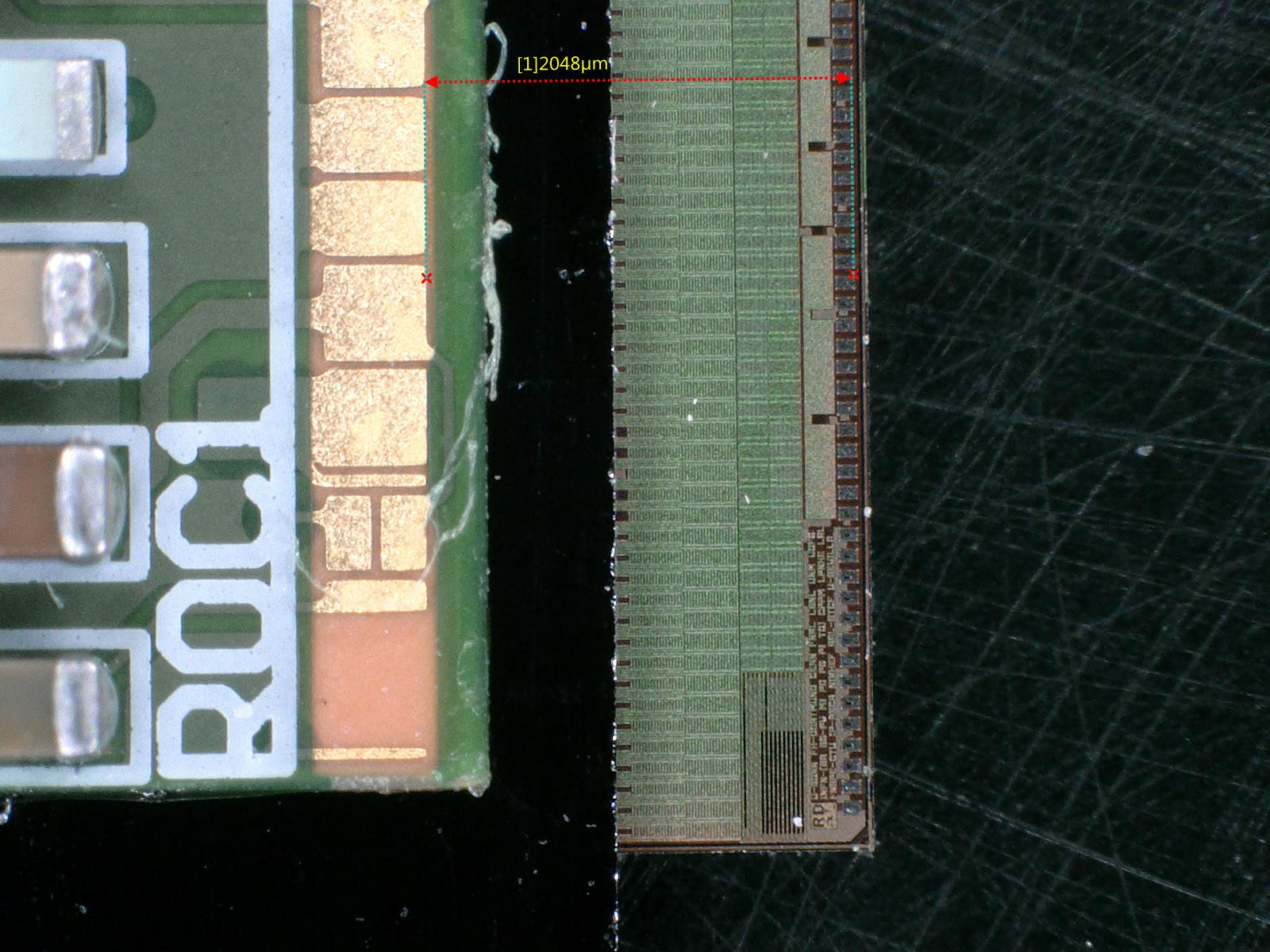

self.check_busy(data[1:])

File "/home/l_tester/L1_SW/elComandante/keithleyClient/keithleyInterface.py", line 147, in check_busy

self.check_busy(data[1:])

File "/home/l_tester/L1_SW/elComandante/keithleyClient/keithleyInterface.py", line 139, in check_busy

if data[0] == '\x11': # XON

RuntimeError: maximum recursion depth exceeded in cmp

Because of this, the IV measurement never started (the main elComandante process was simply hanging and waiting for the Keithley client to report it's ready) and the main elComandante process had to be interrupted. |

|

22

|

Sat Oct 5 22:59:58 2019 |

Dinko Ferencek | Software | Problem with elComandante Keithley client during full qualification |

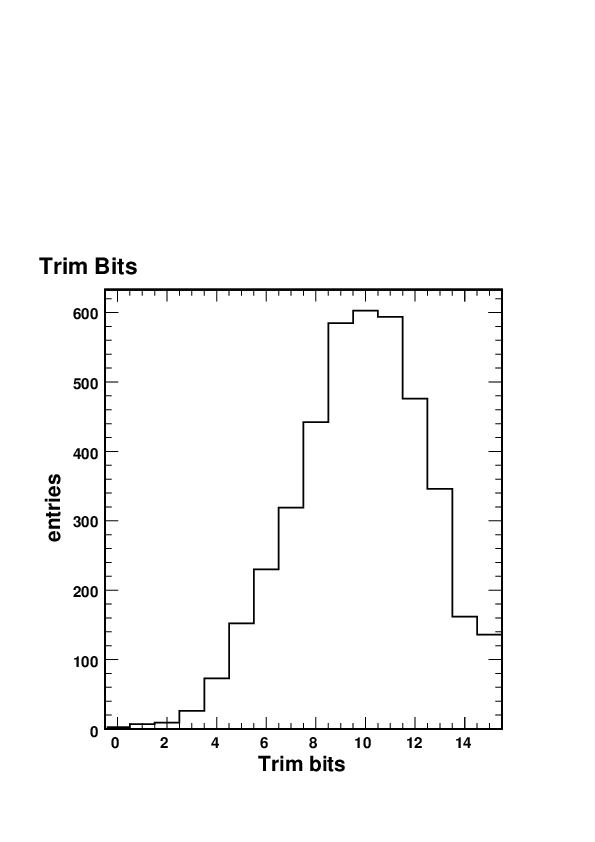

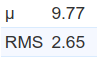

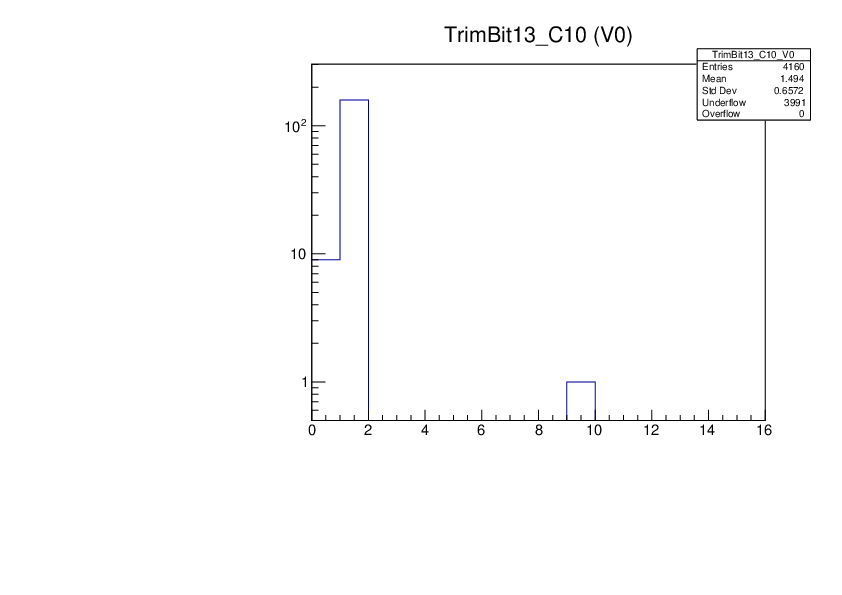

| A new attempt to run the full qualification for M1532 was made on Friday, Oct. 4, but the Keithely client crashed with the same error message. This time we managed to see from log files that the crash happened after the first IV measurement at -20 C was complete and Keithley was reset to -150 V. Unfortunately, the log files were now saved for the test on Tuesday so we couldn't confirm that the crash occurred at the same point. |

|

23

|

Mon Oct 28 17:01:05 2019 |

Matej Roguljic | Software | Investigating the bug with the Keithley client |

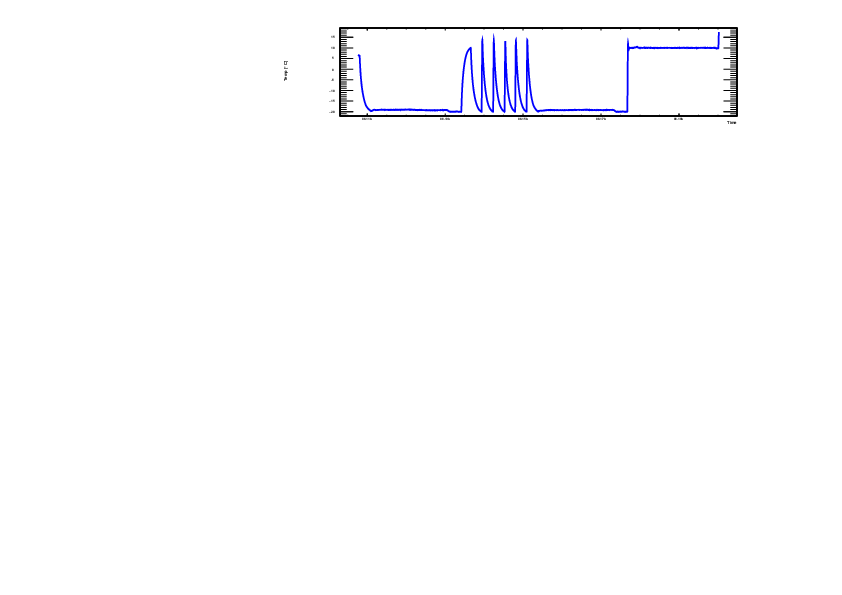

We took module 1529 and tried recreating the issue observed in the beginning of October. To do this in a reasonable amount of time, a "shorttest" procedure was defined which consists only of pretest and pixelalive. Three runs were taken

Run number 1: Shorttest@10,IV@10

Run number 2: Shorttest@10, IV@10,Cycle(n=1, between 10 and -10), Shorttest@10, IV@10

Run number 3: Shorttest@10, IV@10, Cycle(n=5, between 10 and -10), Shorttest@10, IV@10

In runs 1 and 2, IV was done from -5 to -155 in steps of 10

In run 3, IV was done from -5 to -405 in steps of 10

No issues were observed during the tests themselves.

Running MoReWeb shows the Temperature, Humidity and Sum of Currents plots while individual tests show only Pixel Alive map. IV plot is missing in the MoReWeb output, however, it is present in the ivCurve.log file. Tried investigating why IV is not shown, but couldn't get to the bottom of it.

Tomorrow we'll use M1529 and M1530 in a FullTest to check if the problem would appear. |

|

26

|

Tue Oct 29 16:38:32 2019 |

Matej Roguljic | Software | Investigating the bug with the Keithley client |

| Matej Roguljic wrote: | We took module 1529 and tried recreating the issue observed in the beginning of October. To do this in a reasonable amount of time, a "shorttest" procedure was defined which consists only of pretest and pixelalive. Three runs were taken

Run number 1: Shorttest@10,IV@10

Run number 2: Shorttest@10, IV@10,Cycle(n=1, between 10 and -10), Shorttest@10, IV@10

Run number 3: Shorttest@10, IV@10, Cycle(n=5, between 10 and -10), Shorttest@10, IV@10

In runs 1 and 2, IV was done from -5 to -155 in steps of 10

In run 3, IV was done from -5 to -405 in steps of 10

No issues were observed during the tests themselves.

Running MoReWeb shows the Temperature, Humidity and Sum of Currents plots while individual tests show only Pixel Alive map. IV plot is missing in the MoReWeb output, however, it is present in the ivCurve.log file. Tried investigating why IV is not shown, but couldn't get to the bottom of it.

Tomorrow we'll use M1529 and M1530 in a FullTest to check if the problem would appear. |

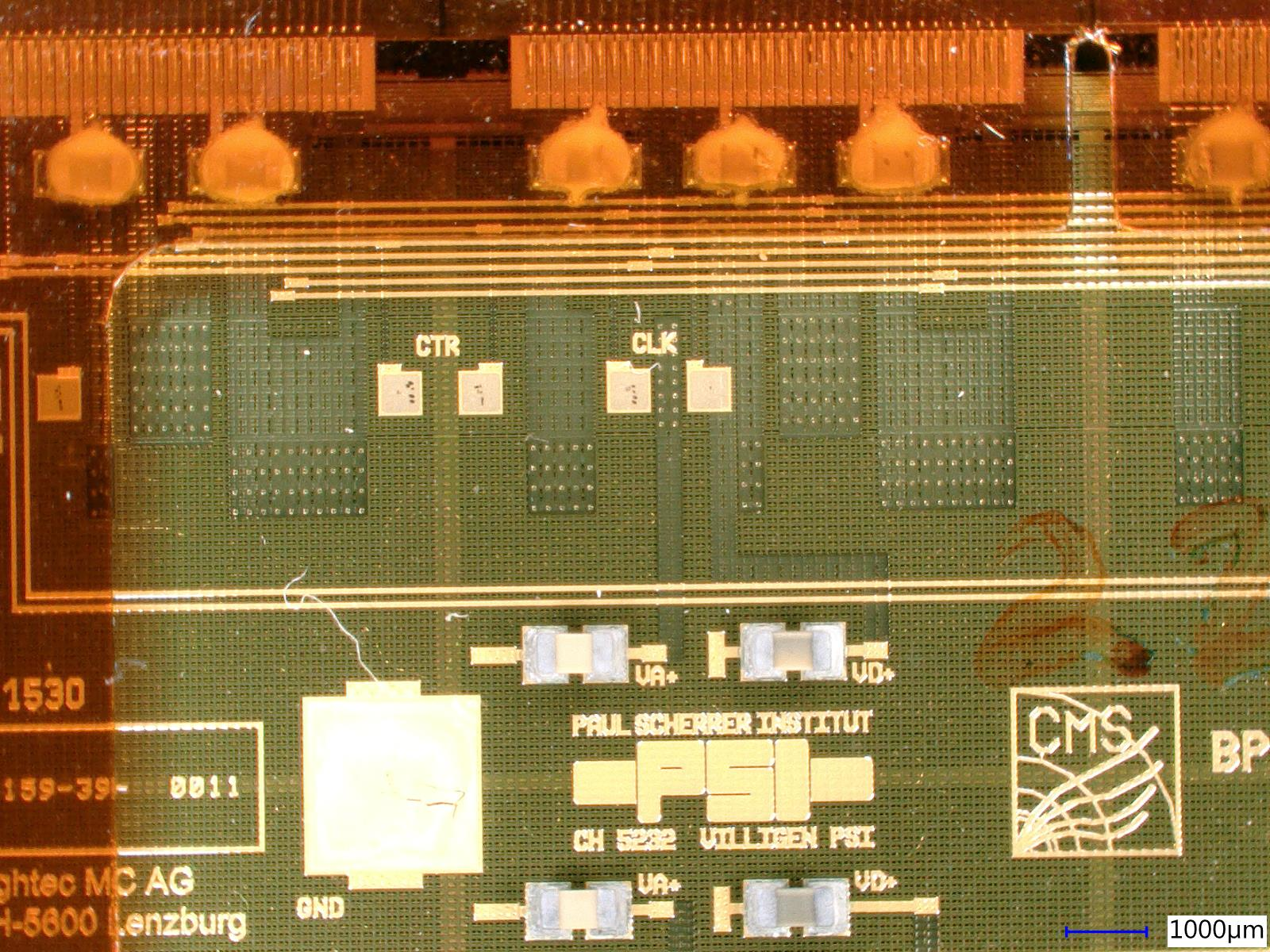

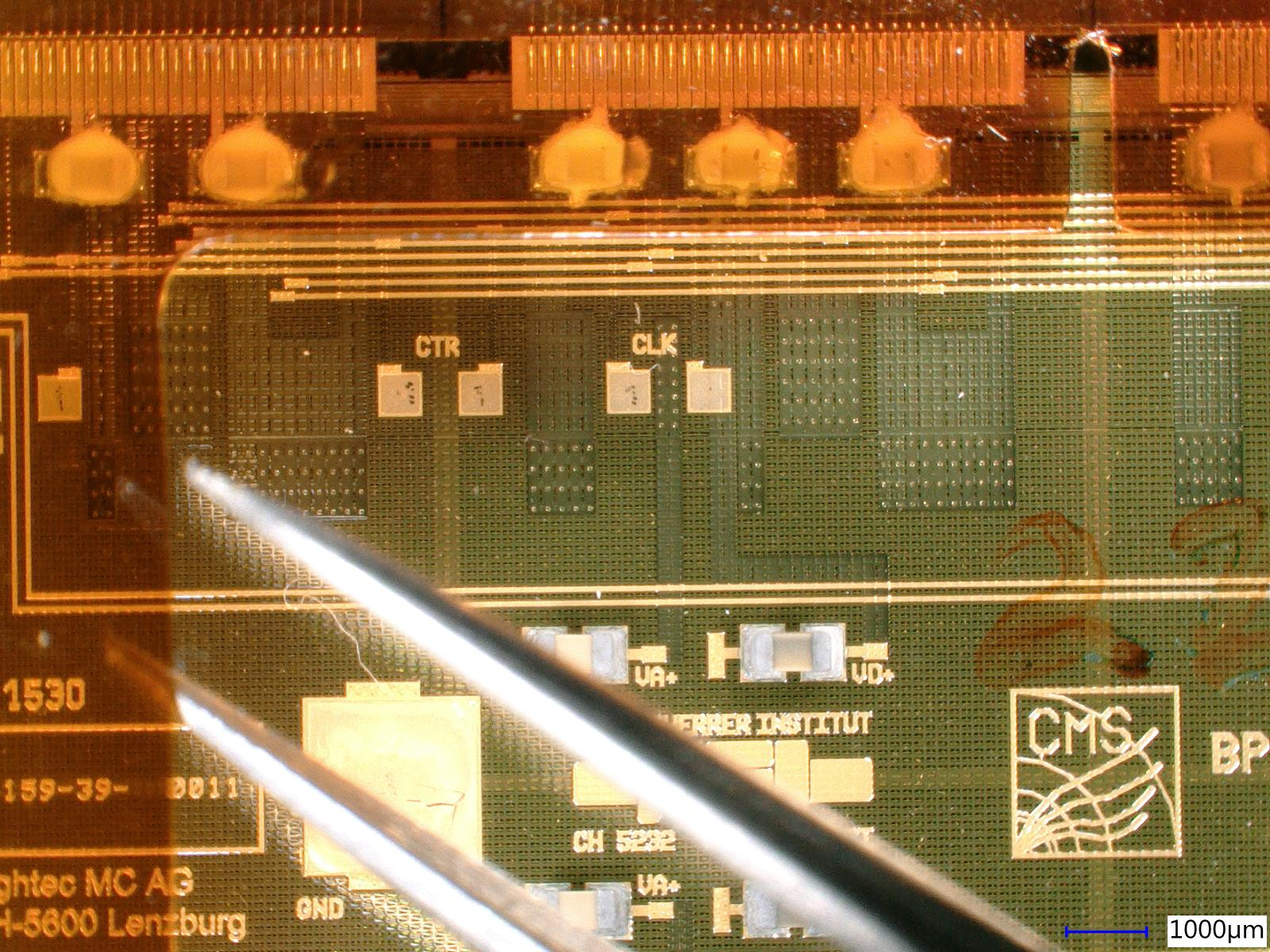

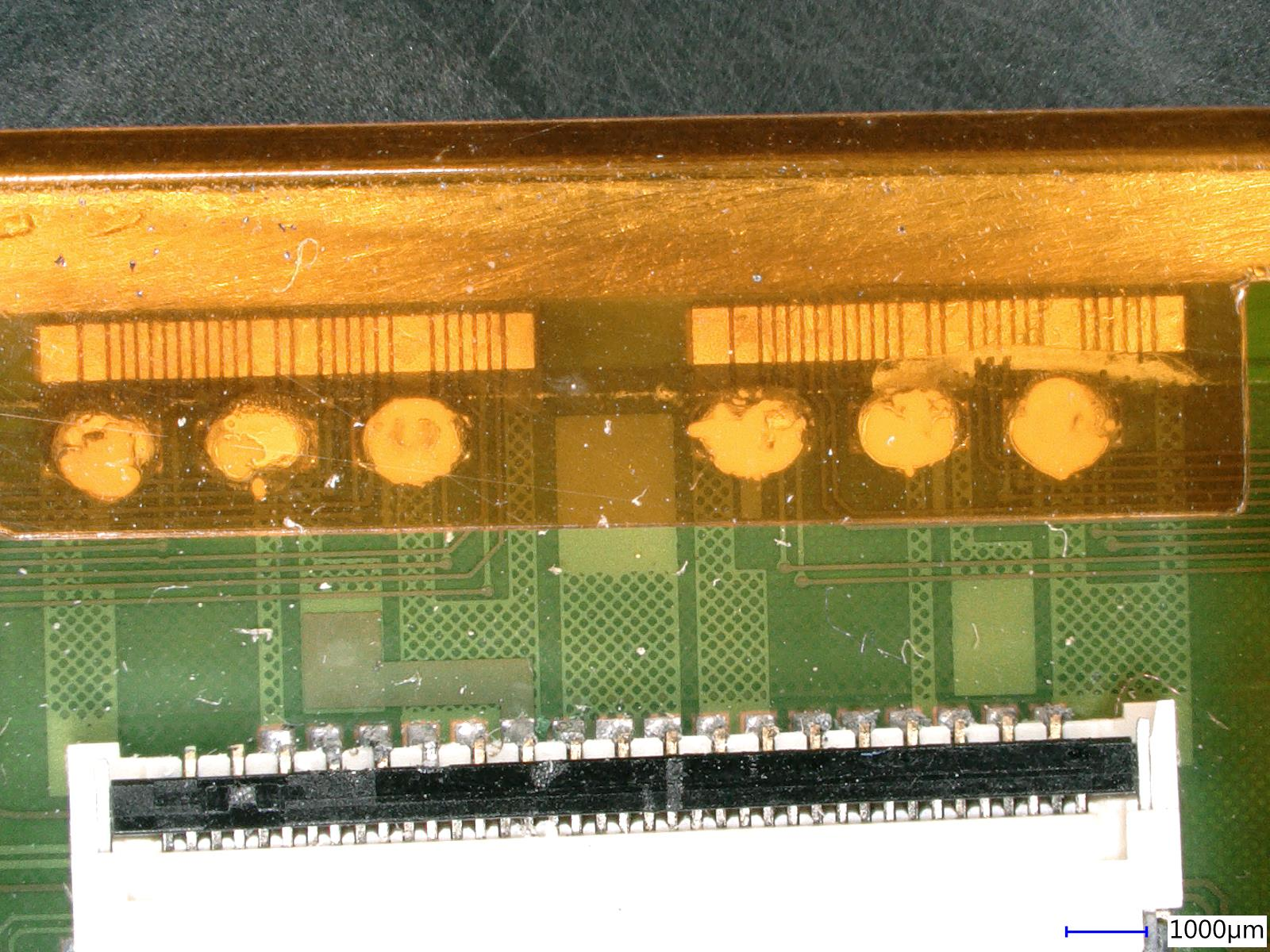

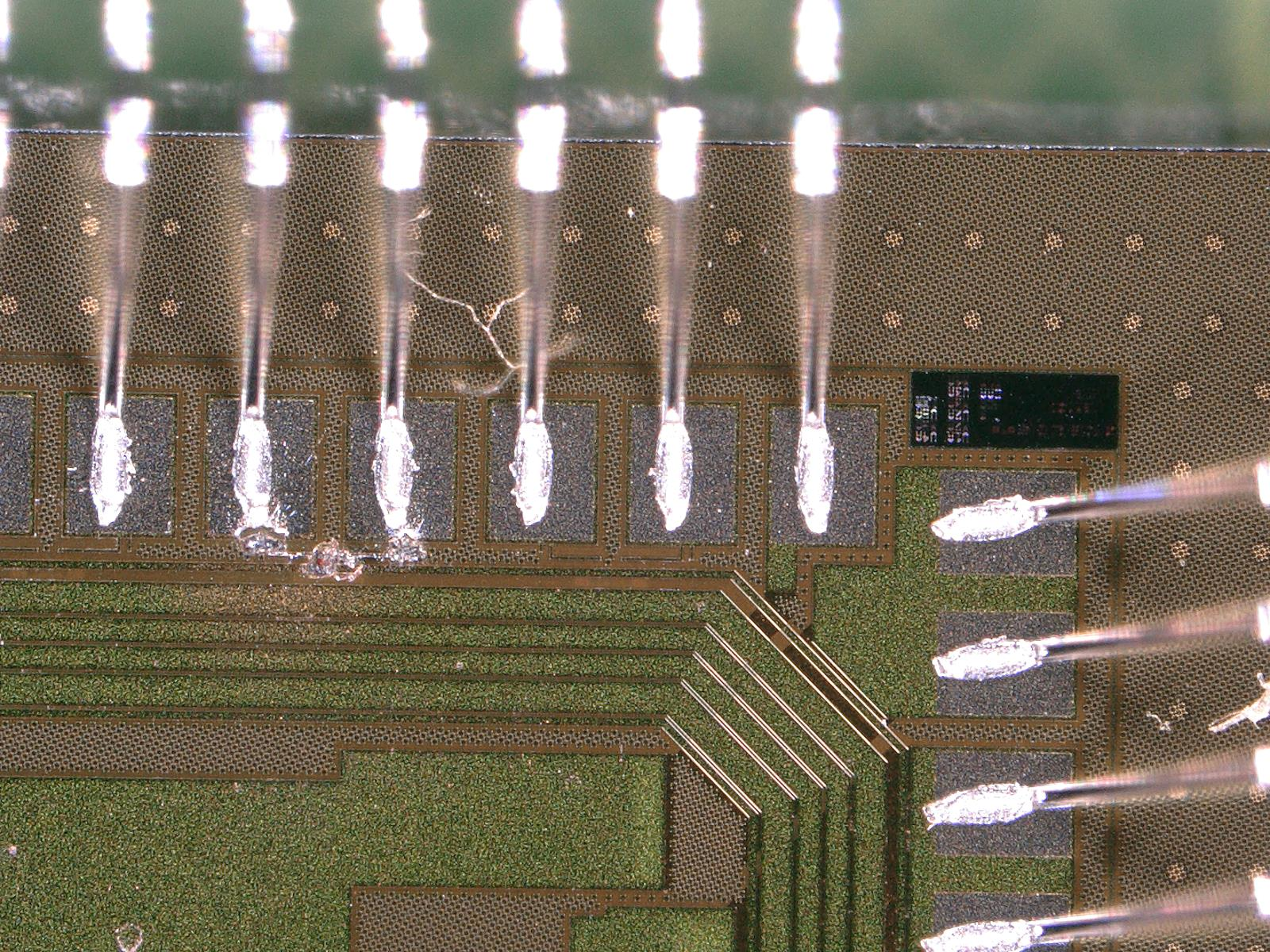

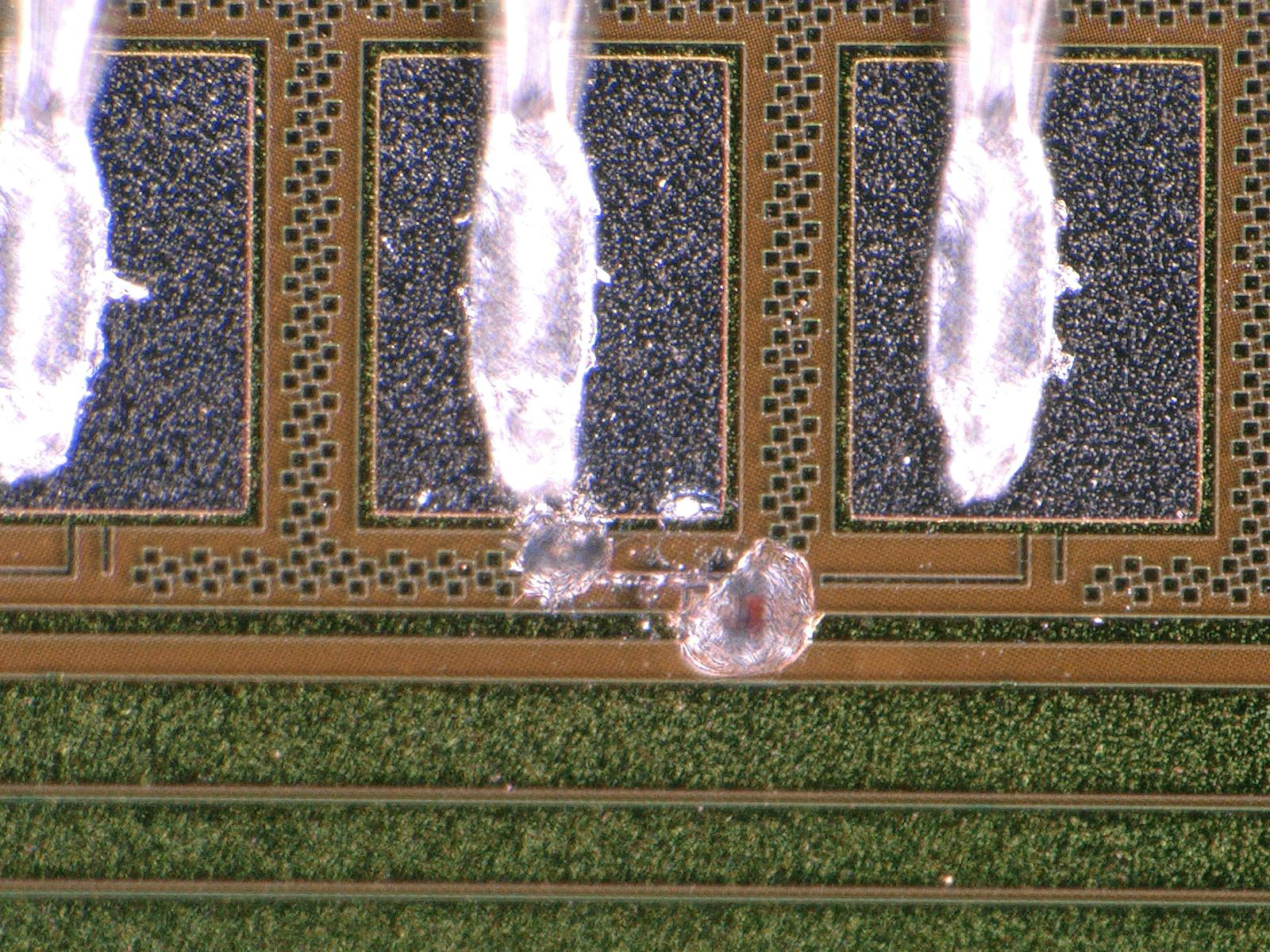

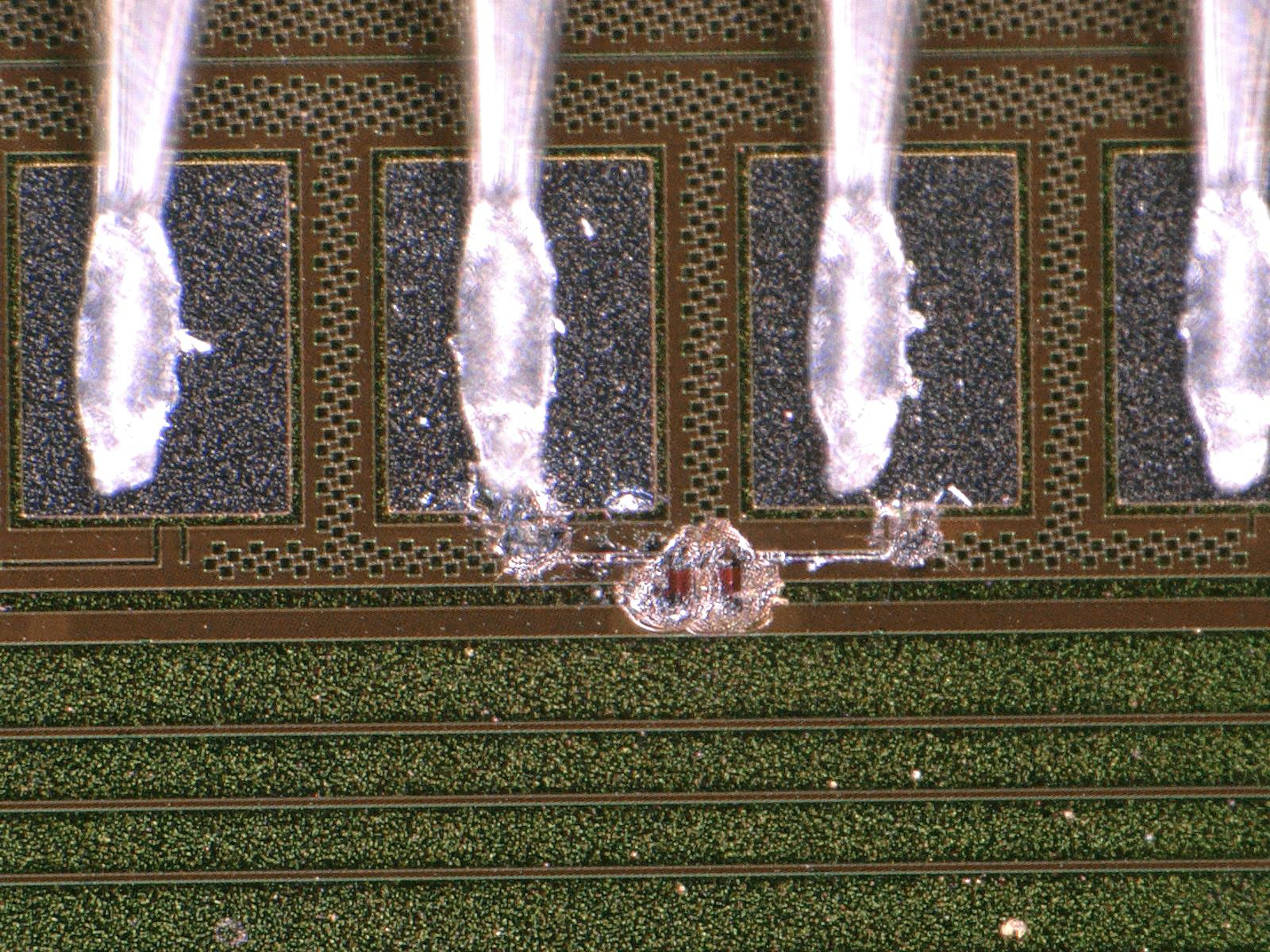

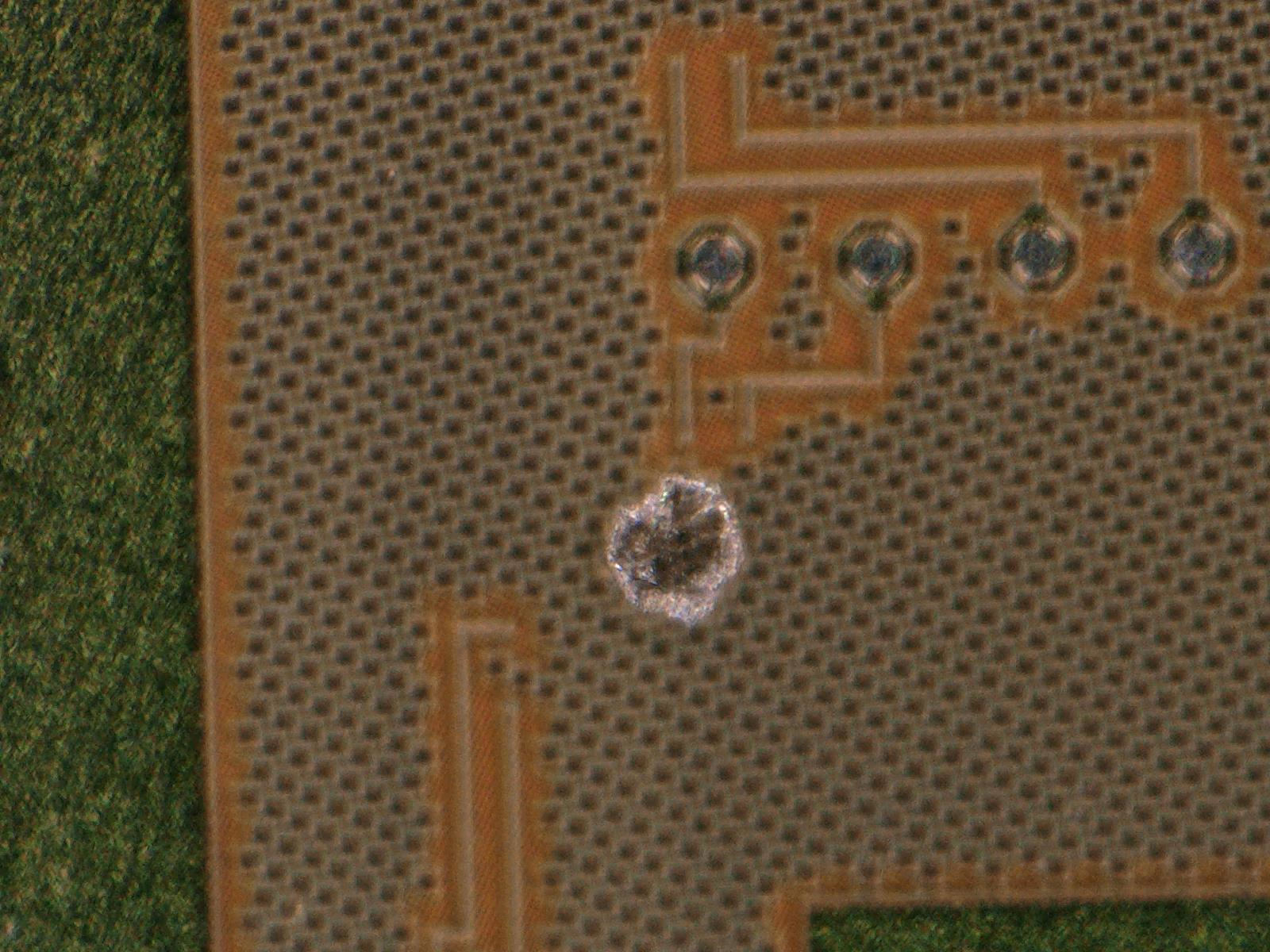

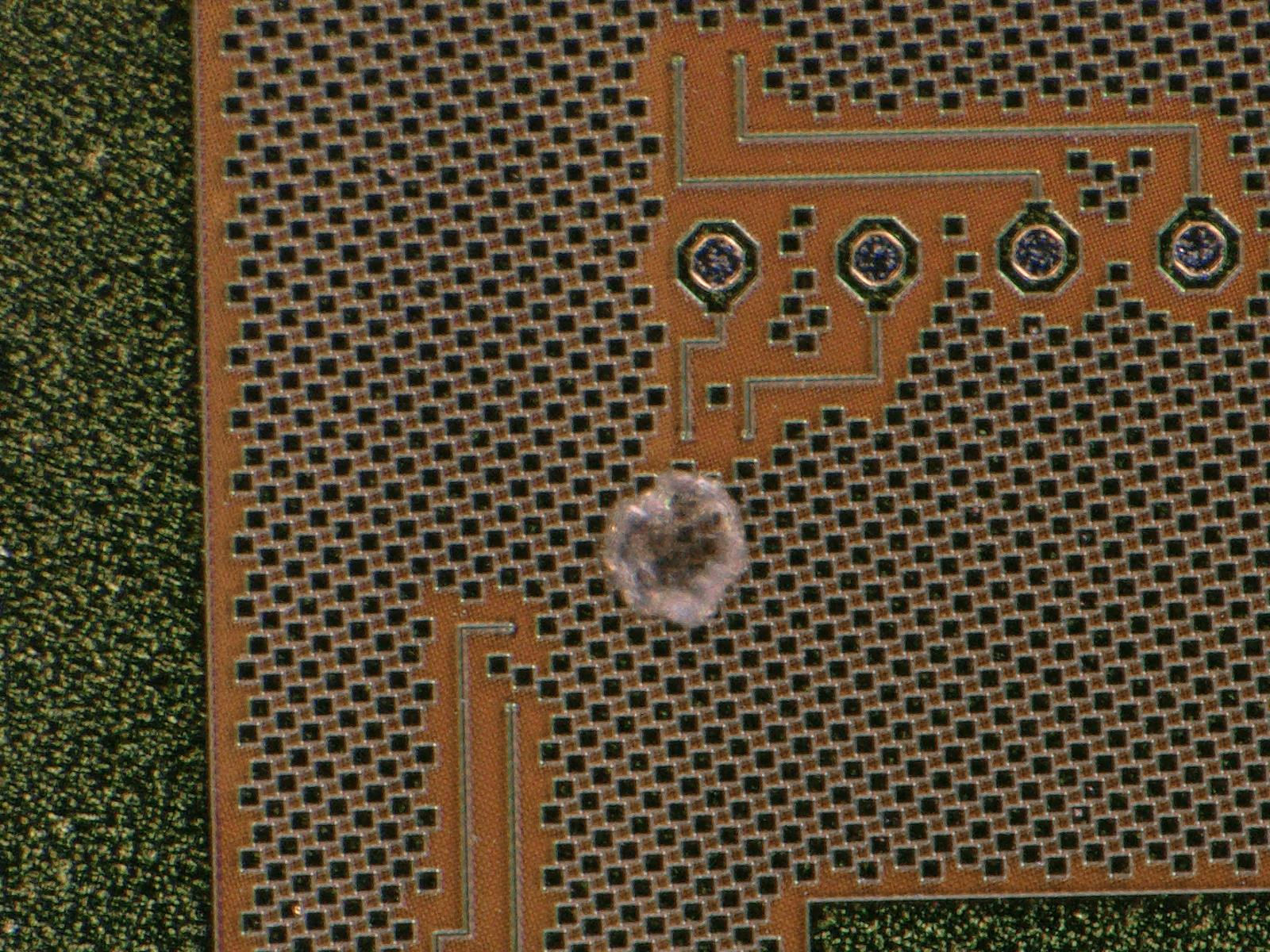

M1530 was put in the coldbox and qualification was started, but the pxar output was full of deserializer errors. The continuous stream of errors made the log file pretty large and slowed down the execution of the qualification so it was aborted. Later it was noticed that there are green depositions on the module like it was on the HDIs irradiated at 60Co facility in Zagreb which is probably why there were so many errors.

M1521 was used instead of M1530 along with M1529. Full qualification was launched around 11:00. |

|

30

|

Wed Oct 30 16:54:58 2019 |

Matej Roguljic | Software | Investigating the bug with the Keithley client |

| Matej Roguljic wrote: |

| Matej Roguljic wrote: | We took module 1529 and tried recreating the issue observed in the beginning of October. To do this in a reasonable amount of time, a "shorttest" procedure was defined which consists only of pretest and pixelalive. Three runs were taken

Run number 1: Shorttest@10,IV@10

Run number 2: Shorttest@10, IV@10,Cycle(n=1, between 10 and -10), Shorttest@10, IV@10

Run number 3: Shorttest@10, IV@10, Cycle(n=5, between 10 and -10), Shorttest@10, IV@10

In runs 1 and 2, IV was done from -5 to -155 in steps of 10

In run 3, IV was done from -5 to -405 in steps of 10

No issues were observed during the tests themselves.

Running MoReWeb shows the Temperature, Humidity and Sum of Currents plots while individual tests show only Pixel Alive map. IV plot is missing in the MoReWeb output, however, it is present in the ivCurve.log file. Tried investigating why IV is not shown, but couldn't get to the bottom of it.

Tomorrow we'll use M1529 and M1530 in a FullTest to check if the problem would appear. |

M1530 was put in the coldbox and qualification was started, but the pxar output was full of deserializer errors. The continuous stream of errors made the log file pretty large and slowed down the execution of the qualification so it was aborted. Later it was noticed that there are green depositions on the module like it was on the HDIs irradiated at 60Co facility in Zagreb which is probably why there were so many errors.

M1521 was used instead of M1530 along with M1529. Full qualification was launched around 11:00. It ended around 6:30 without any issues. |

Running full qualification on 30.10. in the morning on modules 1510, 1529, 1521. Fourth module was not included since there was an issue with the 4th DTB which will be investigated late. Like the previous day, the qualification ended around 17:00 without issues. In conclusion, the issue is not present in the qualification setup at the moment. |

|

36

|

Wed Nov 27 18:25:08 2019 |

Dinko Ferencek | Software | pXar code updated |

pXar code in /home/l_tester/L1_SW/pxar/ on the lab PC was updated on Monday, Nov. 25 from https://github.com/psi46/pxar/tree/e17df08c7bbeb8472e8f56ccd2b9d69a113ccdc3 to https://github.com/psi46/pxar/tree/9c3b81791738e1b8ec7dd9f0d1b68f8800f8416c which pulled in the latest updates to the pulse height optimization test. Today a few remaining updates were pulled in by going to the current HEAD of the master branch https://github.com/psi46/pxar/tree/5d358c5ebbf095a7d118cdde9e1e509c41ccc615.

The sequence of commands to update the code was the following:

cd /home/l_tester/L1_SW/pxar/

git pull origin master

cd build

cmake ..

make -j6 install

|

|

37

|

Wed Nov 27 21:50:02 2019 |

Dinko Ferencek | Software | Reorganized pXar configuration files for pixel modules |

Due to different DAC settings needed for PROC V3 and V4, a single folder containing module configuration files cannot cover both ROC types. To address this problem, the existing folder 'tbm10d' in /home/l_tester/L1_SW/pxar/data/ containing configuration for PROC V4 was renamed to 'tbm10d_procv4' and a new folder for PROC V3, 'tbm10d_procv3', was created. For backward compatibility, a symbolic link 'tbm10d' was created that points to 'tbm10d_procv4'.

The difference in the configurations is in the CtrlReg DAC value which is 9 for V3 and 17 for V4.

The following lines were also added in the [defaultParameters] section in /home/l_tester/L1_SW/elComandante/config/elComandante.conf

tbm10d_procv3: tbm10d_procv3

tbm10d_procv4: tbm10d_procv4 |

|

39

|

Thu Nov 28 18:23:31 2019 |

Dinko Ferencek | Software | Reorganized pXar configuration files for pixel modules |

| Dinko Ferencek wrote: | Due to different DAC settings needed for PROC V3 and V4, a single folder containing module configuration files cannot cover both ROC types. To address this problem, the existing folder 'tbm10d' in /home/l_tester/L1_SW/pxar/data/ containing configuration for PROC V4 was renamed to 'tbm10d_procv4' and a new folder for PROC V3, 'tbm10d_procv3', was created. For backward compatibility, a symbolic link 'tbm10d' was created that points to 'tbm10d_procv4'.

The difference in the configurations is in the CtrlReg DAC value which is 9 for V3 and 17 for V4.

The following lines were also added in the [defaultParameters] section in /home/l_tester/L1_SW/elComandante/config/elComandante.conf

tbm10d_procv3: tbm10d_procv3

tbm10d_procv4: tbm10d_procv4 |

Since the new PH optimization code was added under the already existing PH test, the old configuration files would have to be updated to have the correct parameter values set for the expanded PH test. For this purpose, the old configuration folders were renamed

tbm10d_procv3 --> tbm10d_procv3_old

tbm10d_procv4 --> tbm10d_procv4_old

and new configuration files were re-generated from scratch

./mkConfig -d ../data/tbm10d_procv3 -t TBM10D -r proc600v3 -m

./mkConfig -d ../data/tbm10d_procv4 -t TBM10D -r proc600v4 -m

In addition, in both sets of configurations files, the configuration for the BB2 tab in the pXar GUI was moved from moreTestParameters.dat to testParameters.dat to have the BB2 tab available by default when starting pXar using these configuration files. |

|

40

|

Thu Nov 28 18:34:00 2019 |

Dinko Ferencek | Software | pXar code updated |

pXar code updated to the current HEAD of the master branch https://github.com/psi46/pxar/tree/9eb0f3844e9c7f98d7701629c5af339632c5d84a to pick up the latest update that allows running of the fullTest() method of each of the tests from the command line and consequently also from elComandante. |

|

41

|

Thu Nov 28 18:58:30 2019 |

Dinko Ferencek | Software | Updated Fulltest configuration |

The Fulltest definition used on the lab PC and stored in /home/l_tester/L1_SW/elComandante/config/tests/Fulltest had the following content

pretest

FullTest

bb2

exit

where FullTest is defined in https://github.com/psi46/pxar/blob/9eb0f3844e9c7f98d7701629c5af339632c5d84a/tests/PixTestFullTest.cc#L82-L121. For added flexibility, we would like to have individual tests specified in the definition file. However, by default this implies calling the doTest() method for each of the tests while FullTest actually calls the fullTest() method. For Scurves and GainPedestal the two methods are distinct. After the latest updates from Urs to the pXar code, we are now able to change the definition to

pretest

readback

alive

bb

bb2

scurves:fulltest

trim

ph

gainpedestal:fulltest

exit

Note that readback was placed first because in the past it was observed that pXar had a tendency to crash when this test was run last. |

|

45

|

Mon Jan 20 13:47:33 2020 |

Dinko Ferencek | Software | pXar code updated |

pXar code updated to the current HEAD of the master branch https://github.com/psi46/pxar/tree/f6e42c17c0bb3a44bdb3fa13d8f8afb6cae62a81 to pick up the latest PH optimization updates. |

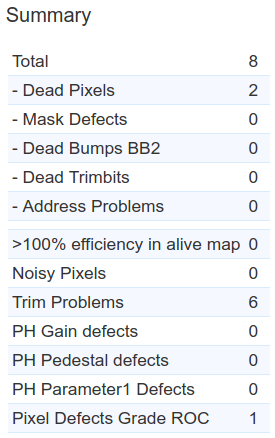

|

49

|

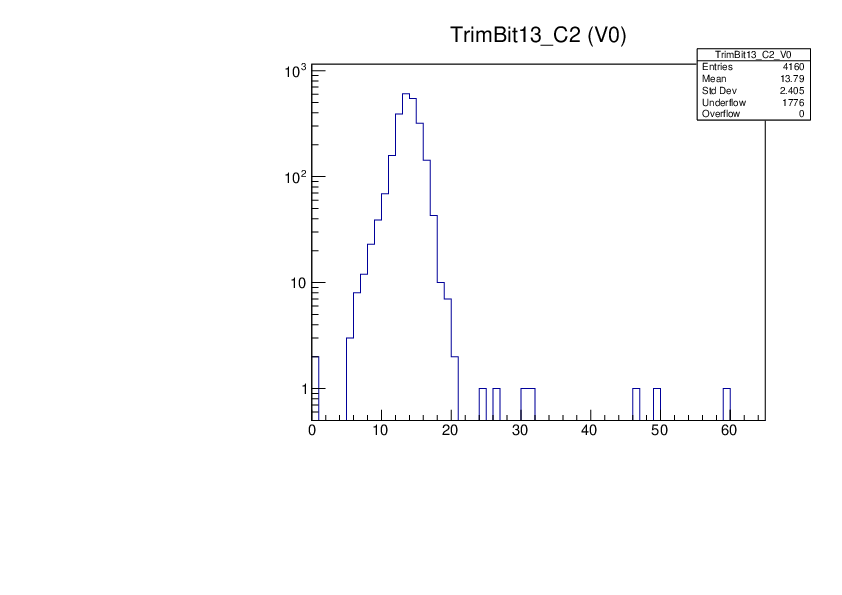

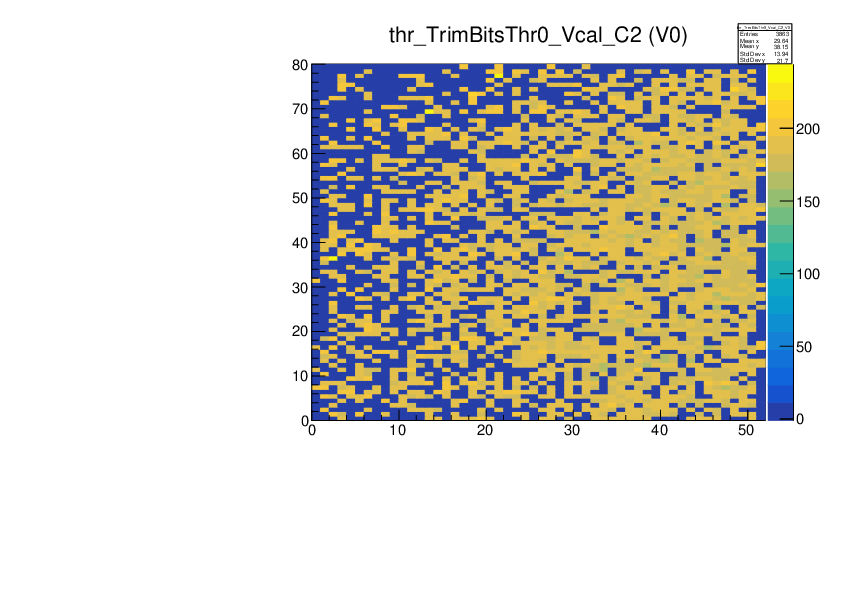

Wed Jan 22 11:39:48 2020 |

Dinko Ferencek | Software | BB2 configuration |

| When attempting to run the FullQualification this morning, pXar crashed while running the BB2 test. After some investigation, we realized the source of the problem was the BB2 configuration missing from testParameters.dat. The configuration is available in moreTestParameters.dat but by default the mkConfig script does not put it in testParameters.dat. Since we run BB2 in the FullQualification, the configuration needs to be copied by hand every time the configuration files are regenerated. |

|

50

|

Wed Jan 22 11:50:20 2020 |

Dinko Ferencek | Software | Module configuration files for interactive tests |

There are two module configuration folders set up for elComandante

/home/l_tester/L1_SW/pxar/data/tbm10d_procv3/

/home/l_tester/L1_SW/pxar/data/tbm10d_procv4/

These folder can also be used for interactive tests with pXar. However, in that case these folders get filled with pxar.log and pxar.root files. For some reason, elComandante when copying the module configuration files also copies all pxar.log files. To prevent unnecessary duplication of junk files, two new module configuration folders were set up for interactive tests with pXar

/home/l_tester/L1_SW/pxar/data/tbm10d_procv3_pxar/

/home/l_tester/L1_SW/pxar/data/tbm10d_procv4_pxar/

The configurations are identical to those used by elComandante. However, one needs to remember to keep the tests folders in sync with the elComandante folders once they get updated. This can be done as follows

rsync -avPSh /home/l_tester/L1_SW/pxar/data/tbm10d_procv3/ /home/l_tester/L1_SW/pxar/data/tbm10d_procv3_pxar/

rsync -avPSh /home/l_tester/L1_SW/pxar/data/tbm10d_procv4/ /home/l_tester/L1_SW/pxar/data/tbm10d_procv4_pxar/

To check if there are any extraneous pXar files present in the configuration folders, run

find /home/l_tester/L1_SW/pxar/data/tbm10d_procv?/ -type f \( -name 'pxar*.log' -o -name 'pxar*.root' \)

If any, they can be deleted by running

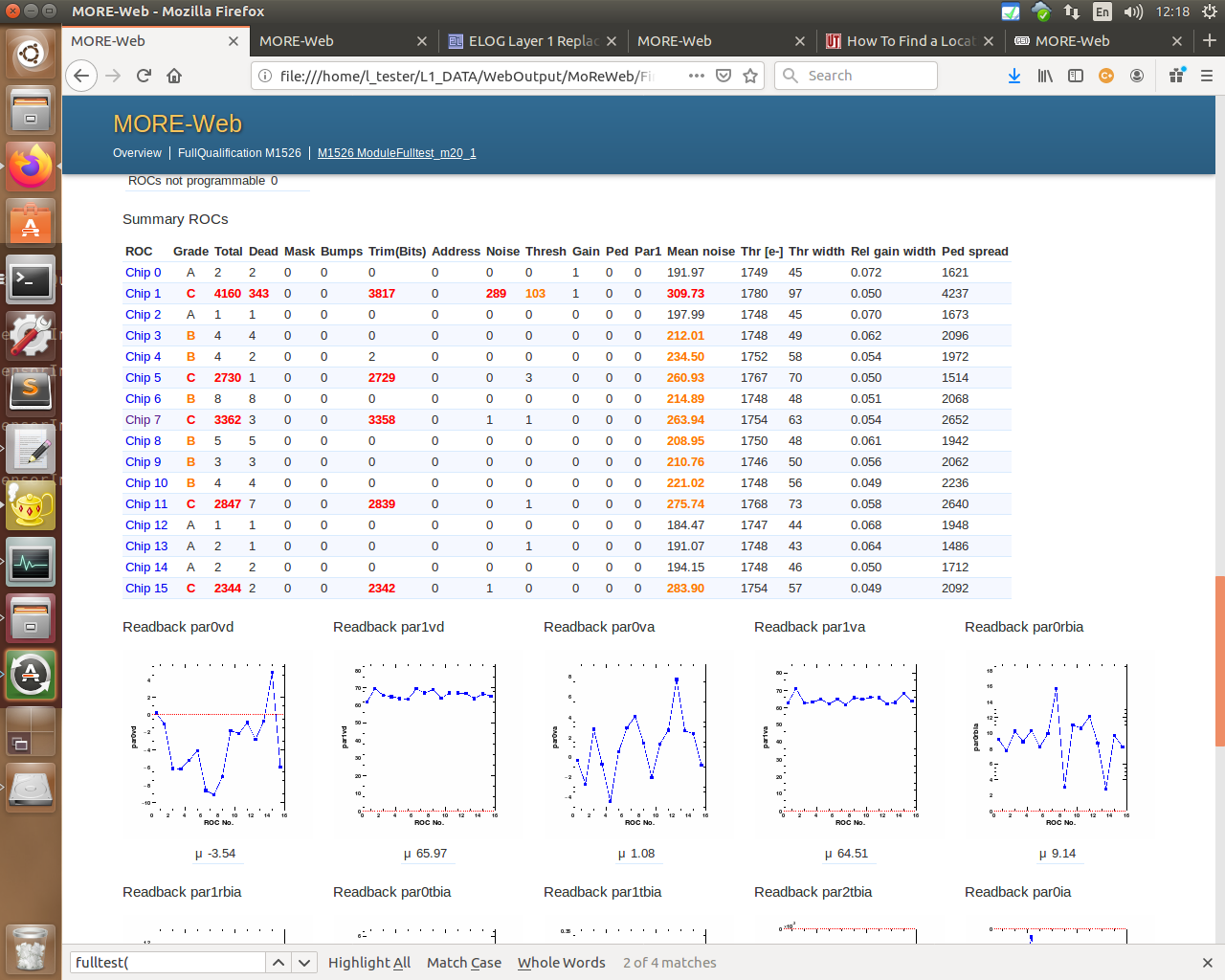

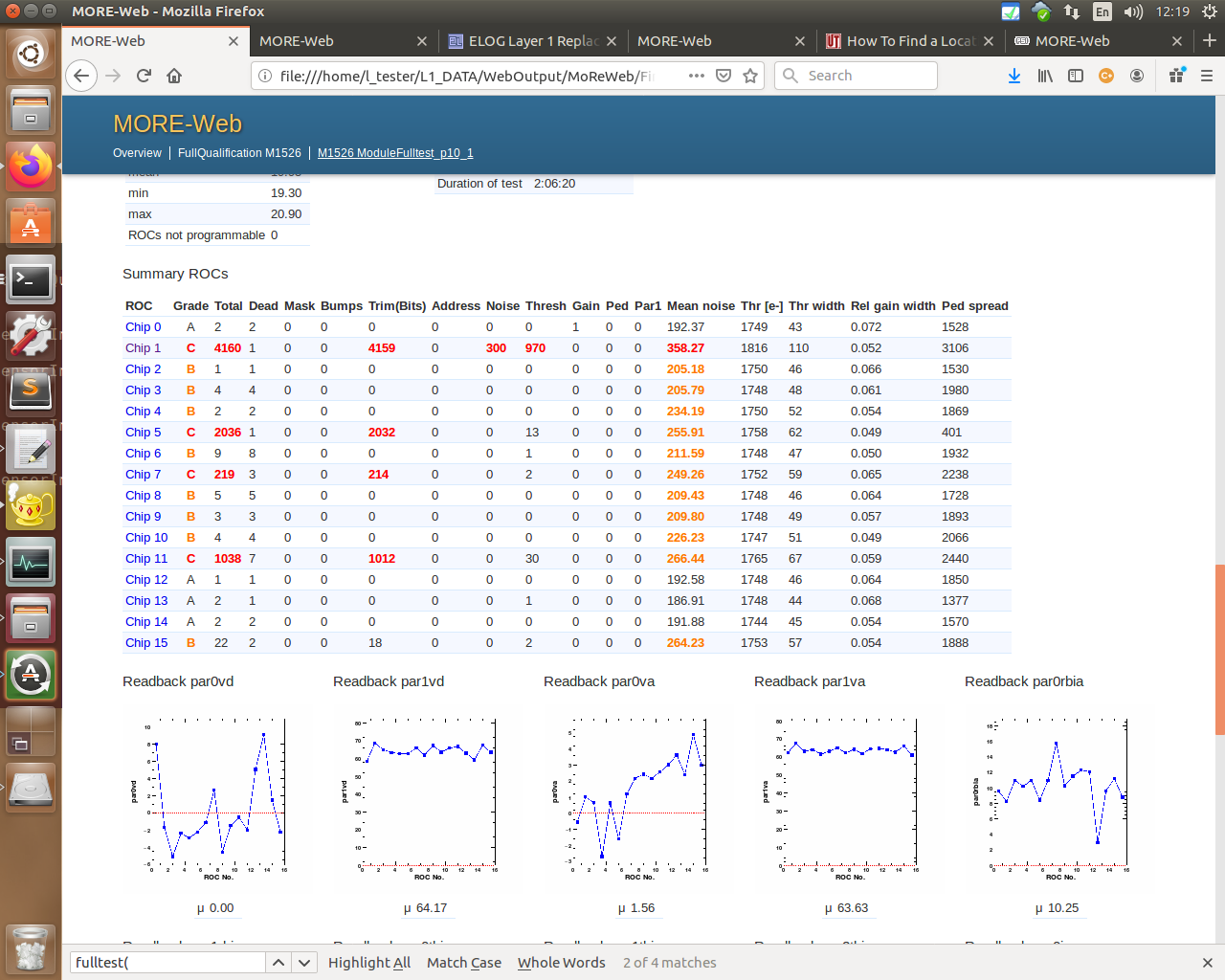

find /home/l_tester/L1_SW/pxar/data/tbm10d_procv?/ -type f -name \( -name 'pxar*.log' -o -name 'pxar*.root' \) -delete |

|

52

|

Thu Jan 23 15:15:45 2020 |

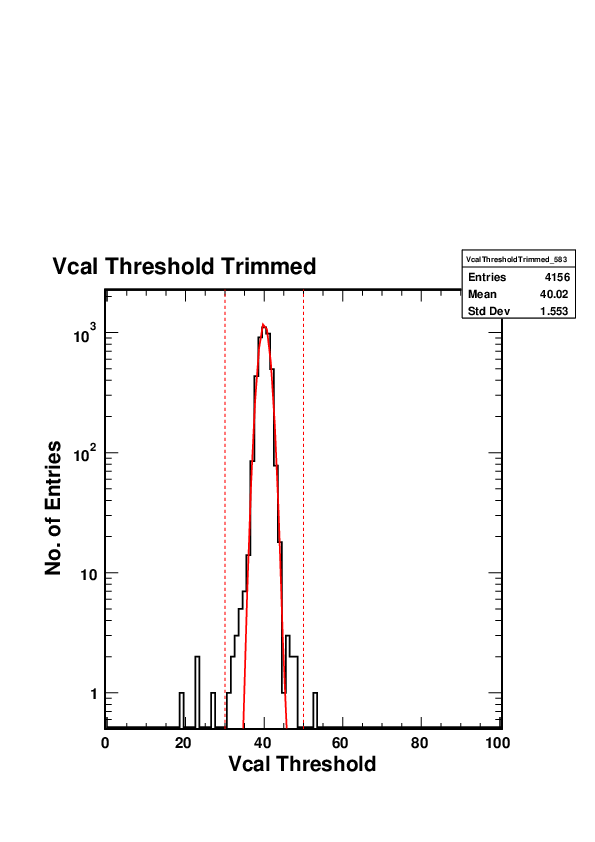

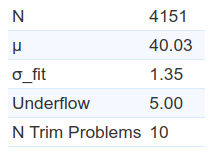

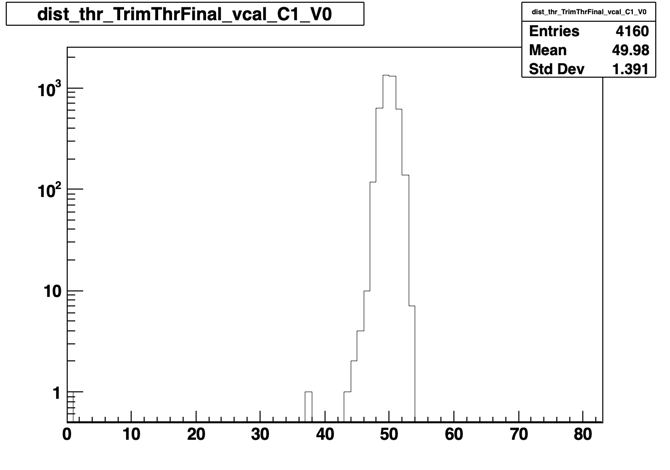

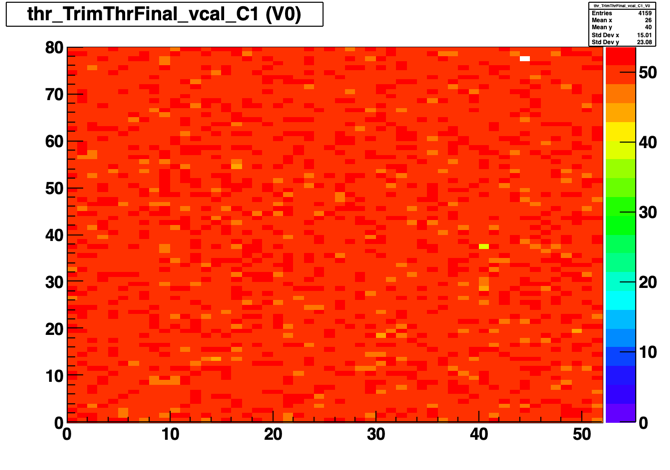

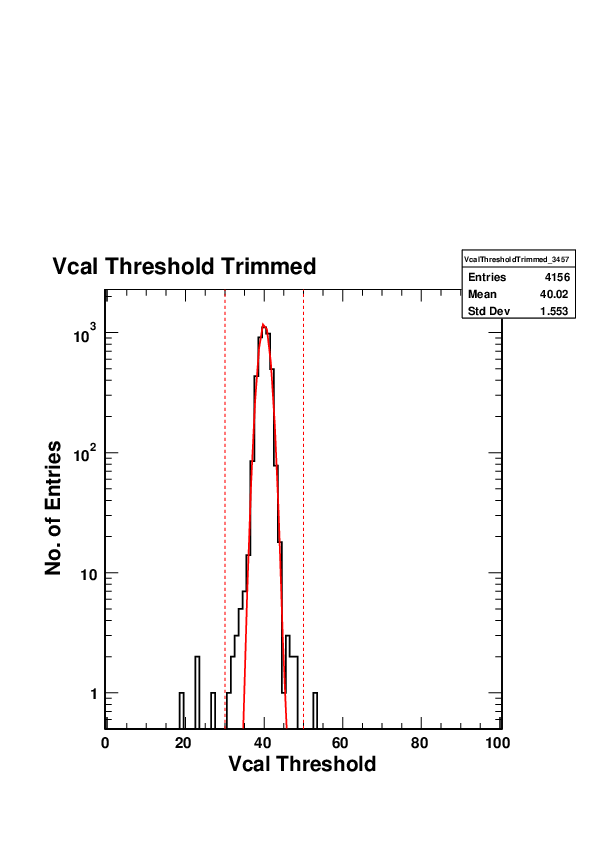

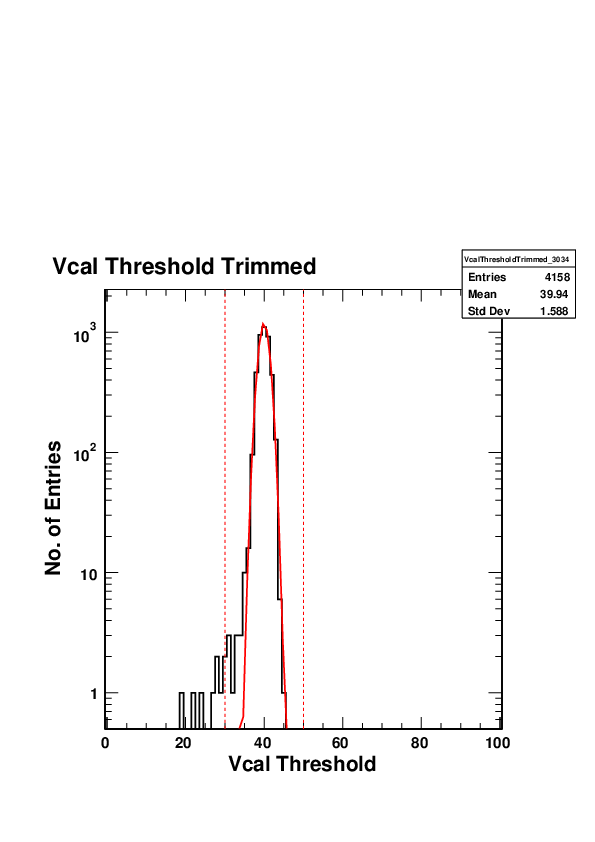

Dinko Ferencek | Software | Trimming Vcal value changed from 35 to 40 |

Trimming Vcal value changed from 35 to 40 in the testParameters.dat files stored in

/home/l_tester/L1_SW/pxar/data/tbm10d_procv3/

/home/l_tester/L1_SW/pxar/data/tbm10d_procv3_test/

/home/l_tester/L1_SW/pxar/data/tbm10d_procv4/

/home/l_tester/L1_SW/pxar/data/tbm10d_procv4_test/

This is the value we will use for the FullQualification. |

|

53

|

Fri Jan 24 18:12:00 2020 |

Dinko Ferencek | Software | MoReWeb code updates |

MoReWeb code updated to include the ROC wafer info in the XrayCalibration and XRayHRQualification pages:

https://gitlab.cern.ch/CMS-IRB/MoReWeb/commit/4de8ea39050600367ae0b8e959fcc00f29be45d8

In addition, two BB2 plots with wrong axis labels were fixed:

https://gitlab.cern.ch/CMS-IRB/MoReWeb/commit/3cfa18fc3aab1a29859ba9f0f81aa0ed4c59d5c8

https://gitlab.cern.ch/CMS-IRB/MoReWeb/commit/bb6627e6ab3f4d08eb54970a7e3d3b751983e15a |

|

108

|

Mon Mar 16 10:05:23 2020 |

Matej Roguljic | Software | PhQualification change |

| Urs made a change in pXar, in the PhOptimization algorithm. One of the changes is in the testParameters.dat where vcalhigh is set to 100 instead of 255. This was implemented on the PC used to run full qualification. A separate procedure for elComandante was created, "PhQualification.ini", which runs pretest, pixelalive, trimming, ph and gainpedestal. This procedure will need to be run on all the modules qualified before this change was made and later merged with previous full qualification results. |

|

112

|

Wed Mar 18 15:16:47 2020 |

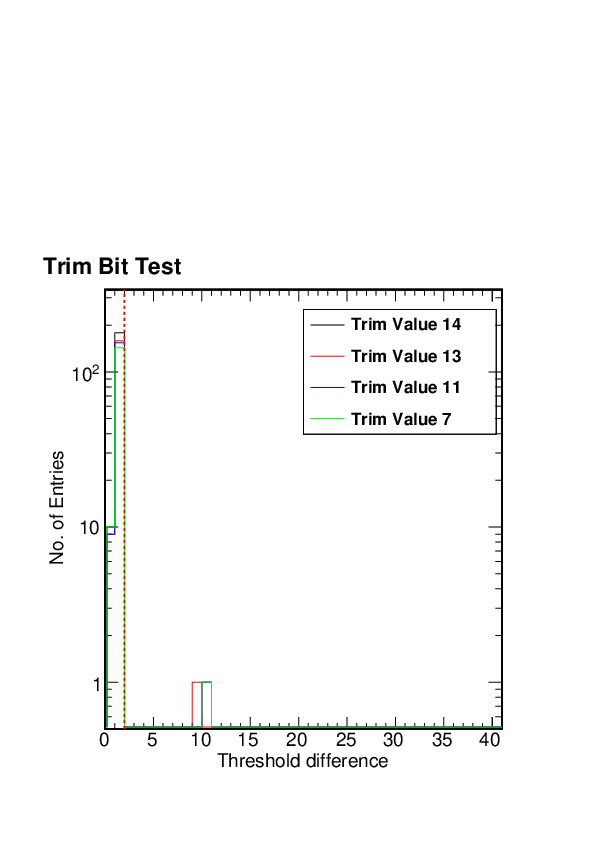

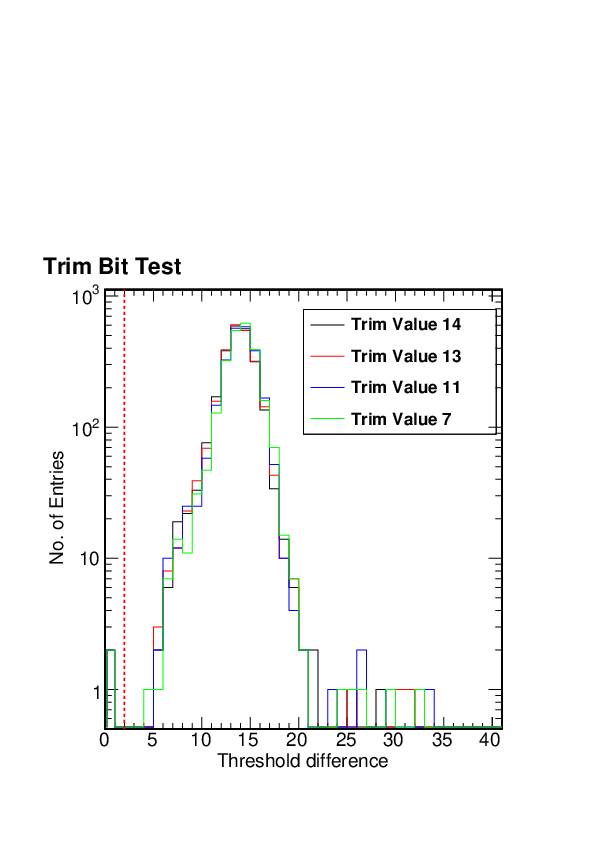

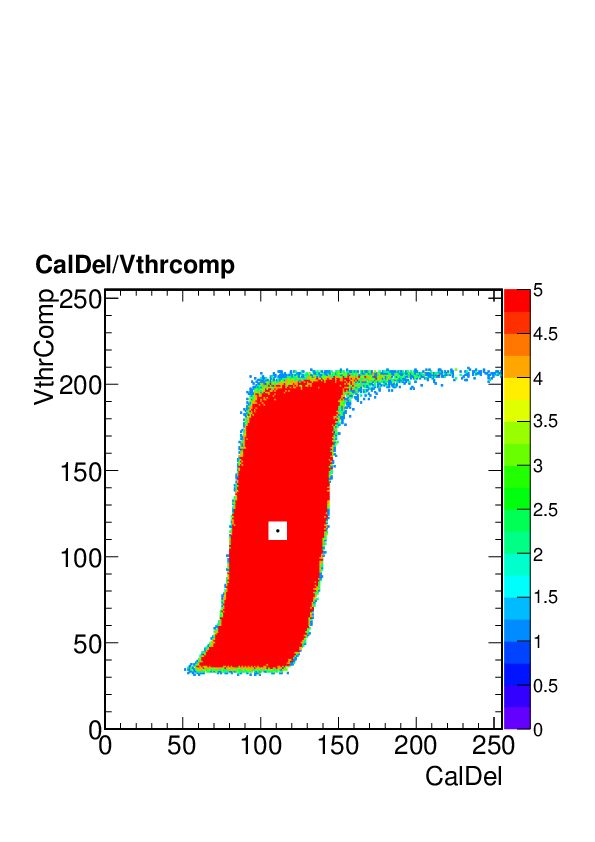

Andrey Starodumov | Software | Change in trimmig algorithm |

Urs yesterday modified the algorithm and tested it. From today we are using it. Main change that threshold for trimming is not any more fixed to VthrComp=79 but calculated based on the bottom tornado value + 20. CalDel is also optimised. This allows us to avoid failures in the trimbit tests due to too high threshold, eg if the bottom of tornado close to 70 a fraction of pixels in a chip could have threshold above 70 and hence fail in the test.

|

|

198

|

Wed Apr 8 15:02:37 2020 |

Andrey Starodumov | Software | Change of CrtlReg for RT |

So far in a blue box for RT tbm10d_procv3 parameters were used.

This is wrong, since CtrlReg=9 is better for -20C while for +10 or higher T

CtrlReg=17 is better.

From now on for RT tbm10d_procv4 will be used. |

|

243

|

Thu Apr 30 16:47:00 2020 |

Matej Roguljic | Software | MoReWeb empty DAC plots |

Some of the DAC parameters plots were empty in the total production overview page. All the empty plots had the number "35" in them (e.g. DAC distribution m20_1 vana 35). The problem was tracked down to the trimming configuration. Moreweb was expecting us to trim to Vcal 35, while we decided to trim to Vcal 50. I "grepped" where this was hardcoded and changed 35->50.

The places where I made changes:

- Analyse/AbstractClasses/TestResultEnvironment.py

'trimThr':35

- Analyse/Configuration/GradingParameters.cfg.default

trimThr = 35

- Analyse/OverviewClasses/CMSPixel/ProductionOverview/ProductionOverviewPage/ProductionOverviewPage.py

TrimThresholds = ['', '35']

- Analyse/OverviewClasses/CMSPixel/ProductionOverview/ProductionOverviewPage/ProductionOverviewPage.py

self.SubPages.append({"InitialAttributes" : {"Anchor": "DACDSpread35", "Title": "DAC parameter spread per module - 35"}, "Key": "Section","Module": "Section"})

It's interesting to note that someone had already made the change in "Analyse/Configuration/GradingParameters.cfg" |

|

244

|

Thu Apr 30 17:24:57 2020 |

Dinko Ferencek | Software | MoReWeb empty DAC plots |

| Matej Roguljic wrote: | Some of the DAC parameters plots were empty in the production overview page. All the empty plots had the number "35" in them (e.g. DAC distribution m20_1 vana 35). The problem was tracked down to the trimming configuration. Moreweb was expecting us to trim to Vcal 35, while we decided to trim to Vcal 50. I "grepped" where this was hardcoded and changed 35->50.

The places where I made changes:

- Analyse/AbstractClasses/TestResultEnvironment.py

'trimThr':35

- Analyse/Configuration/GradingParameters.cfg.default

trimThr = 35

- Analyse/OverviewClasses/CMSPixel/ProductionOverview/ProductionOverviewPage/ProductionOverviewPage.py

TrimThresholds = ['', '35']

- Analyse/OverviewClasses/CMSPixel/ProductionOverview/ProductionOverviewPage/ProductionOverviewPage.py

self.SubPages.append({"InitialAttributes" : {"Anchor": "DACDSpread35", "Title": "DAC parameter spread per module - 35"}, "Key": "Section","Module": "Section"})

It's interesting to note that someone had already made the change in "Analyse/Configuration/GradingParameters.cfg" |

As far as I can remember, the changes in Analyse/AbstractClasses/TestResultEnvironment.py, Analyse/Configuration/GradingParameters.cfg.default and Analyse/Configuration/GradingParameters.cfg were there from before, probably made by Andrey. It is possible that you looked at the files when I was preparing logically separate commits affecting the same files which required temporarily undoing and later reapplying some of the changes to be able to separate the commits. The commits are now on GitLab https://gitlab.cern.ch/CMS-IRB/MoReWeb/-/commits/L1replacement, specifically:

435ffb98: grading parameters related to the trimming threshold updated from 35 to 50 VCal units

1987ff18: updates in the production overview page related to a change in the trimming threshold |

|

245

|

Thu Apr 30 17:33:04 2020 |

Andrey Starodumov | Software | MoReWeb empty DAC plots |

| Matej Roguljic wrote: | Some of the DAC parameters plots were empty in the total production overview page. All the empty plots had the number "35" in them (e.g. DAC distribution m20_1 vana 35). The problem was tracked down to the trimming configuration. Moreweb was expecting us to trim to Vcal 35, while we decided to trim to Vcal 50. I "grepped" where this was hardcoded and changed 35->50.

The places where I made changes:

- Analyse/AbstractClasses/TestResultEnvironment.py

'trimThr':35

- Analyse/Configuration/GradingParameters.cfg.default

trimThr = 35

- Analyse/OverviewClasses/CMSPixel/ProductionOverview/ProductionOverviewPage/ProductionOverviewPage.py

TrimThresholds = ['', '35']

- Analyse/OverviewClasses/CMSPixel/ProductionOverview/ProductionOverviewPage/ProductionOverviewPage.py

self.SubPages.append({"InitialAttributes" : {"Anchor": "DACDSpread35", "Title": "DAC parameter spread per module - 35"}, "Key": "Section","Module": "Section"})

It's interesting to note that someone had already made the change in "Analyse/Configuration/GradingParameters.cfg" |

I have changed

1)StandardVcal2ElectronConversionFactorfrom 50 to 44 for VCal calibration of PROC600V4 is 44el/VCal

2)TrimBitDifference from 2 to -2 for not to take into account failed trim bit test that is an artifact from trimbit test SW. |

|

253

|

Thu May 7 00:10:15 2020 |

Dinko Ferencek | Software | MoReWeb empty DAC plots |

| Andrey Starodumov wrote: |

| Matej Roguljic wrote: | Some of the DAC parameters plots were empty in the total production overview page. All the empty plots had the number "35" in them (e.g. DAC distribution m20_1 vana 35). The problem was tracked down to the trimming configuration. Moreweb was expecting us to trim to Vcal 35, while we decided to trim to Vcal 50. I "grepped" where this was hardcoded and changed 35->50.

The places where I made changes:

- Analyse/AbstractClasses/TestResultEnvironment.py

'trimThr':35

- Analyse/Configuration/GradingParameters.cfg.default

trimThr = 35

- Analyse/OverviewClasses/CMSPixel/ProductionOverview/ProductionOverviewPage/ProductionOverviewPage.py

TrimThresholds = ['', '35']

- Analyse/OverviewClasses/CMSPixel/ProductionOverview/ProductionOverviewPage/ProductionOverviewPage.py

self.SubPages.append({"InitialAttributes" : {"Anchor": "DACDSpread35", "Title": "DAC parameter spread per module - 35"}, "Key": "Section","Module": "Section"})

It's interesting to note that someone had already made the change in "Analyse/Configuration/GradingParameters.cfg" |

I have changed

1)StandardVcal2ElectronConversionFactorfrom 50 to 44 for VCal calibration of PROC600V4 is 44el/VCal

2)TrimBitDifference from 2 to -2 for not to take into account failed trim bit test that is an artifact from trimbit test SW. |

1) is committed in 74b1038e.

2) was made on Mar. 24 (for more details, see this elog) and is currently left in Analyse/Configuration/GradingParameters.cfg and might be committed in the future depending on what is decided about the usage of the Trim Bit Test in module grading

$ diff Analyse/Configuration/GradingParameters.cfg.default Analyse/Configuration/GradingParameters.cfg

45c45

< TrimBitDifference = 2.

---

> TrimBitDifference = -2.

There were a few other code updates related to a change of the warm test temperature from 17 to 10 C. Those were committed in 3a98fef8. |

|

255

|

Thu May 7 00:56:50 2020 |

Dinko Ferencek | Software | MoReWeb updates related to the BB2 test |

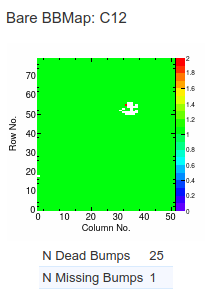

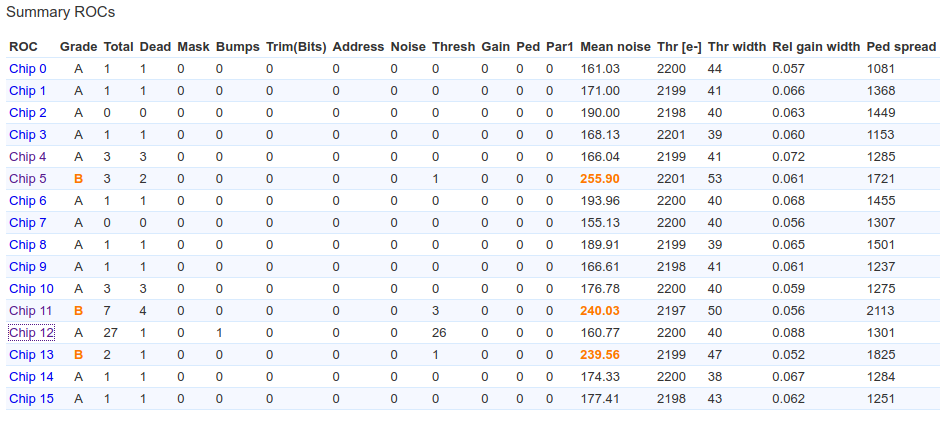

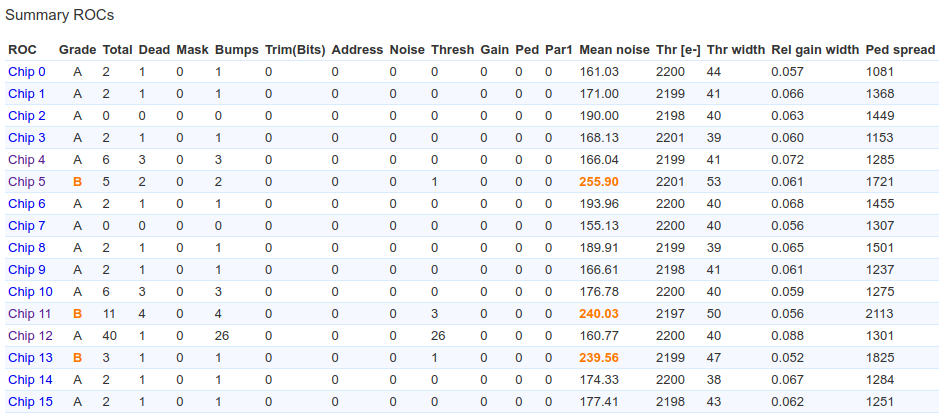

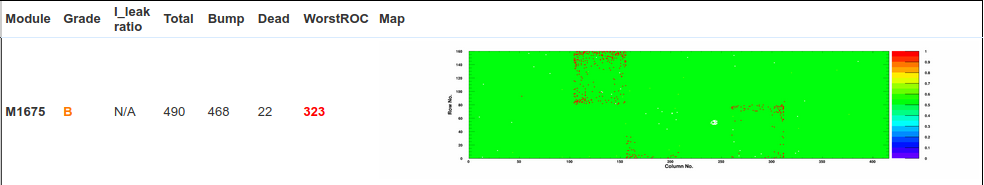

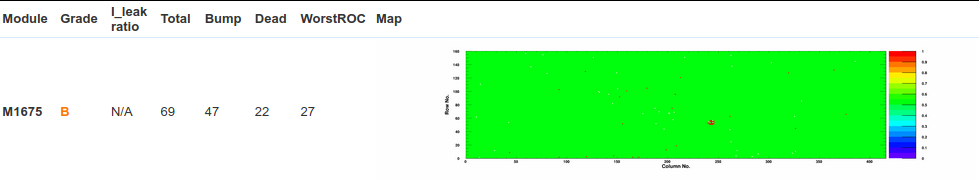

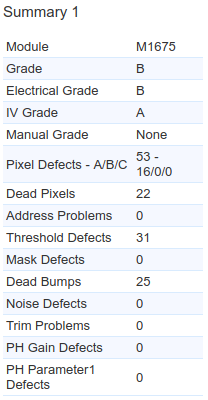

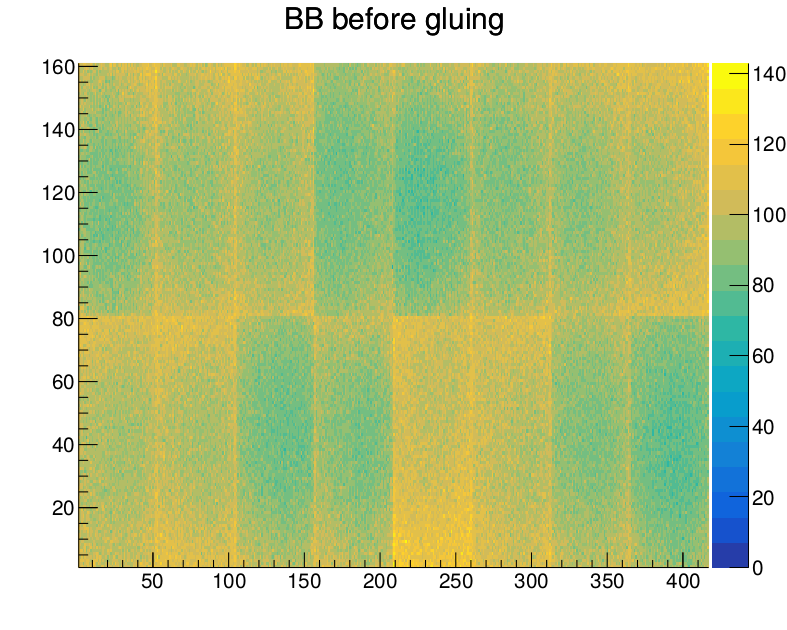

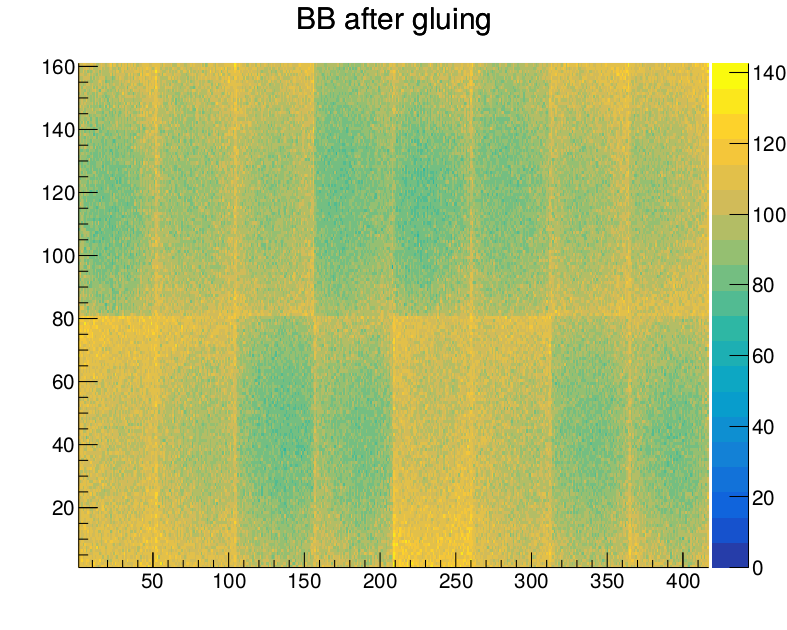

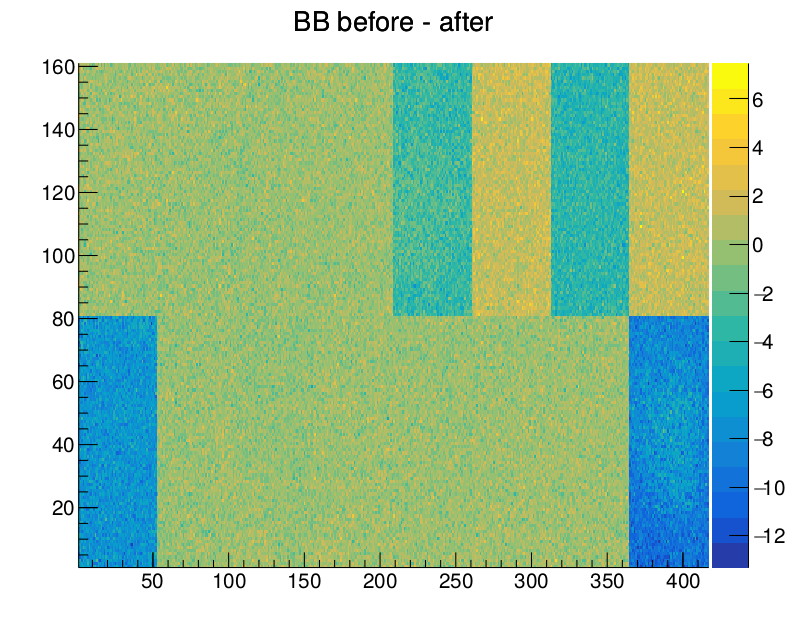

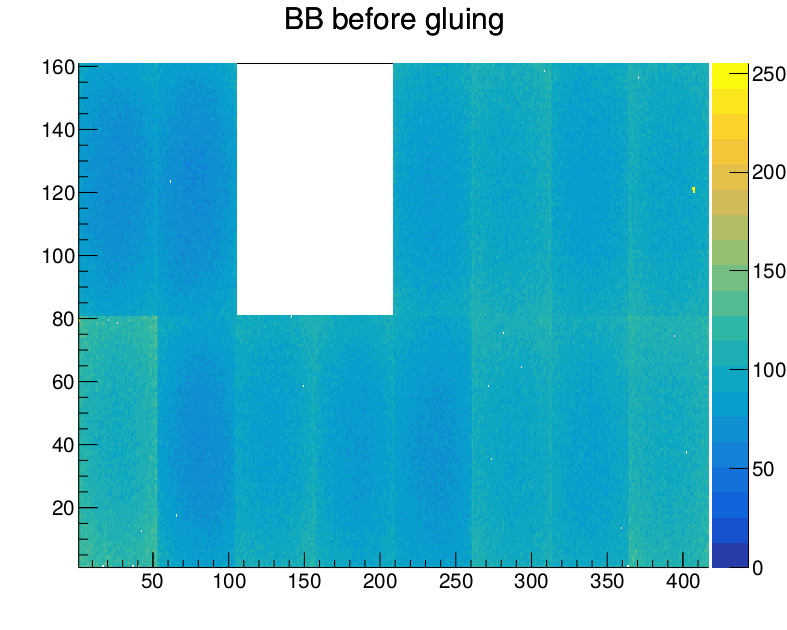

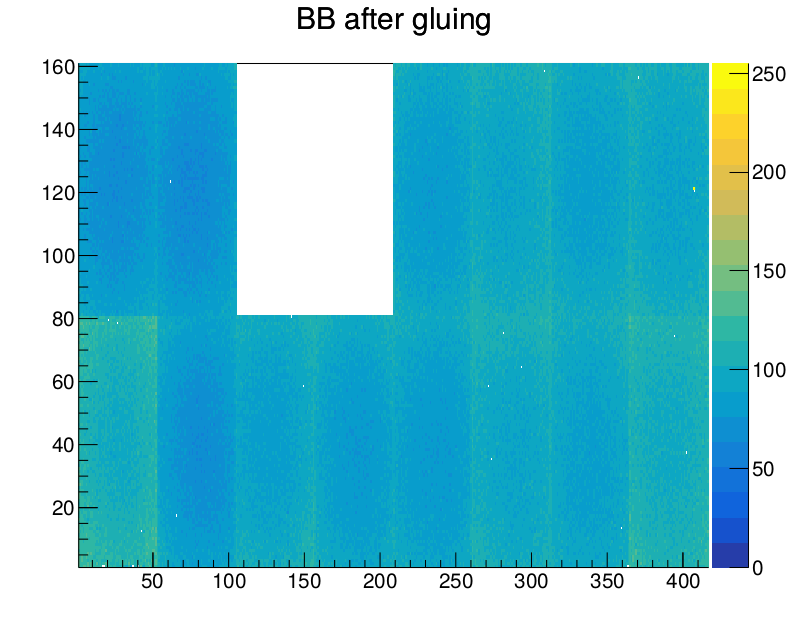

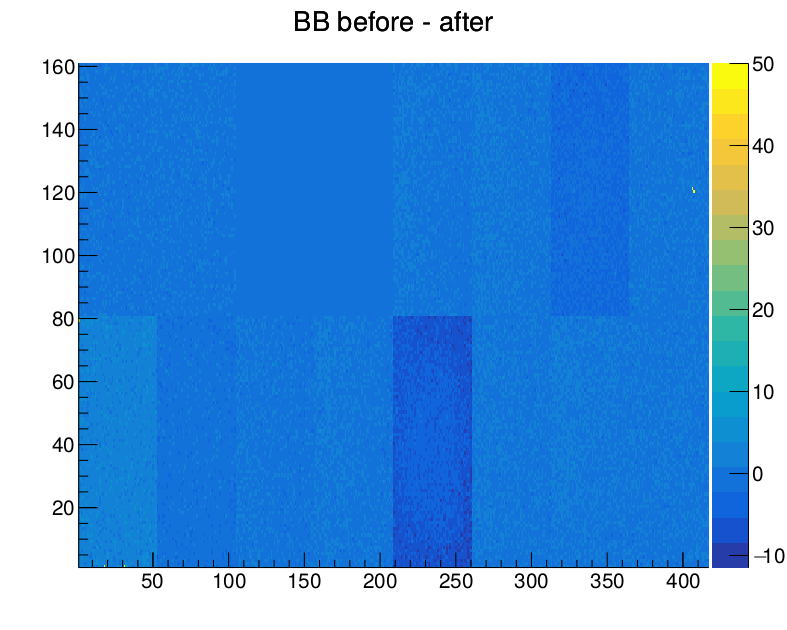

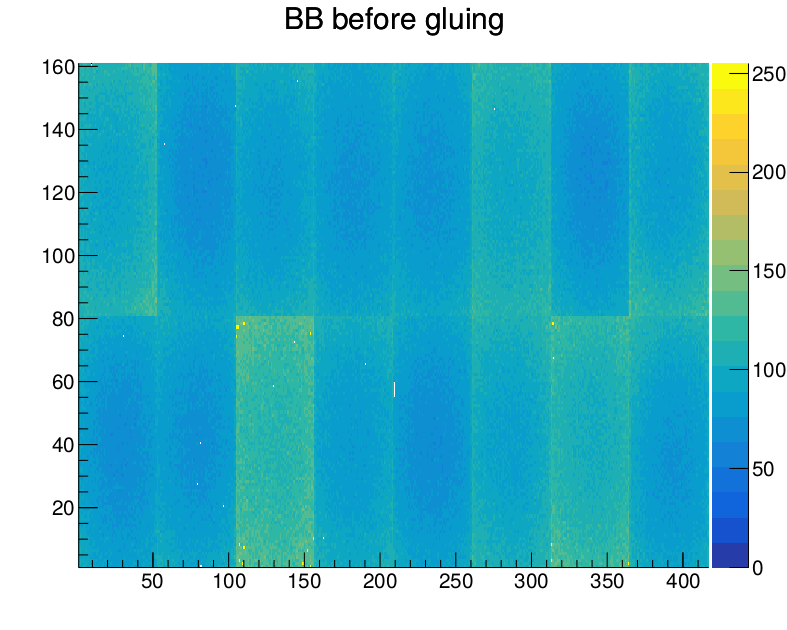

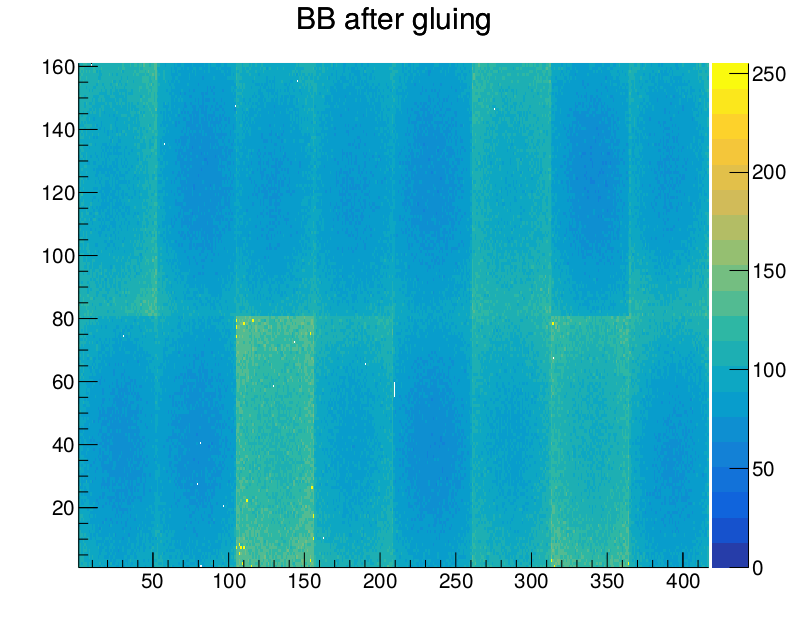

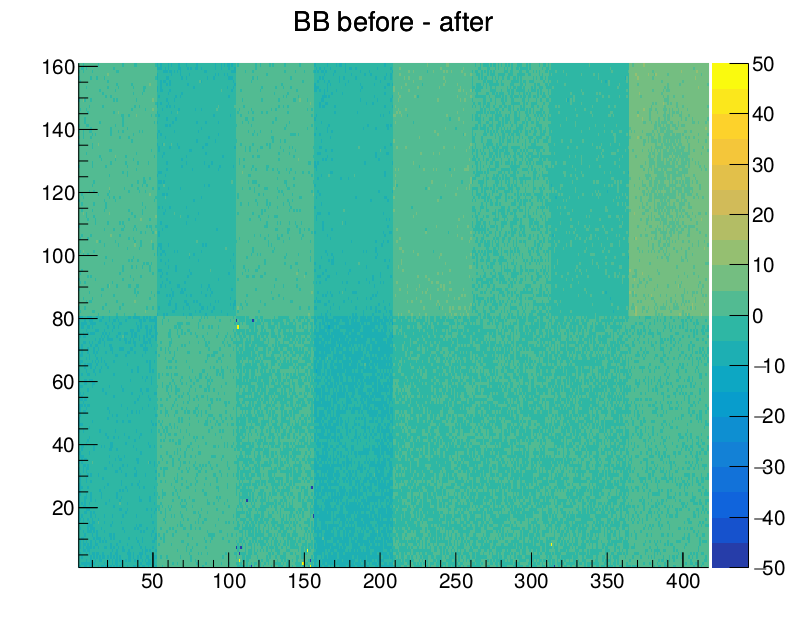

Andrey noticed that results of the BB2 test (here example for ROC 12 in M1675)

were not properly propagated to the ROC Summary

This was fixed in d9a1258a. However, looking at the summary for ROC 5 in the same module after the fix

it became apparent that dead pixels were double-counted under the dead bumps despite the fact they were supposed to be subtracted here. From the following debugging printout

Chip 5 Pixel Defects Grade A

total: 5

dead: 2

inef: 0

mask: 0

addr: 0

bump: 2

trim: 1

tbit: 0

nois: 0

gain: 0

par1: 0

total: set([(5, 4, 69), (5, 3, 68), (5, 37, 30), (5, 38, 31), (5, 4, 6)])

dead: set([(5, 37, 30), (5, 3, 68)])

inef: set([])

mask: set([])

addr: set([])

bump: set([(5, 4, 69), (5, 38, 31)])

trim: set([(5, 4, 6)])

tbit: set([])

nois: set([])

gain: set([])

par1: set([])

it became apparent that the column and row addresses for pixels with bump defects were shifted by one. This was fixed in 415eae00

However, there was still a problem with the pixel defects info in the production overview page which was still using using the BB test results

After switching to the BB2 tests results in ac9e8844, the pixel defects info looked better

but it was still not in a complete sync with the info presented in the FullQualification Summary 1

This is due to double-counting of dead pixels which still needs to be fixed for the Production Overview. |

|

256

|

Thu May 7 01:51:03 2020 |

Dinko Ferencek | Software | Strange bug/feature affecting Pixel Defects info in the Production Overview page |

It was observed that sometimes the Pixel Defects info in the Production Overview page is missing

It turned out this was happening for those modules for which the MoReWeb analysis was run more than once. The solution is to remove all info from the database for the affected modules

python Controller.py -d

type in, e.g. M1668, and when prompted, type in 'all' and press ENTER and confirm you want to delete all entries. After that, run

python Controller.py -m M1668

followed by

python Controller.py -p

The missing info should now be visible. |

|

260

|

Mon May 11 21:32:20 2020 |

Dinko Ferencek | Software | Fixed double-counting of pixel defects in the production overview page |

| As a follow-up to this elog, double-counting of pixel defects in the production overview page was fixed in 3a2c6772. |

|

261

|

Mon May 11 21:37:43 2020 |

Dinko Ferencek | Software | Fixed the BB defects plots in the production overview page |

0407e04c: attempting to fix the BB defects plots in the production overview page (seems mostly related to the 17 to 10 C change)

f2d554c5: it appears that BB2 defect maps were not processed correctly |

|

262

|

Mon May 11 21:41:15 2020 |

Dinko Ferencek | Software | 17 to 10 C changes in the production overview page |

| 0c513ab8: a few more updates on the main production overview page related to the 17 to 10 C change |

|

265

|

Wed May 13 23:16:37 2020 |

Dinko Ferencek | Software | Fixed double-counting of pixel defects in the production overview page |

| Dinko Ferencek wrote: | | As a follow-up to this elog, double-counting of pixel defects in the production overview page was fixed in 3a2c6772. |

A few extra adjustments were made in:

38eaa5d6: also removed double-counting of pixel defects in module maps in the production overview page

51aadbd7: adjusted the trimmed threshold selection to the L1 replacement conditions |

|

270

|

Fri May 22 16:19:01 2020 |

Andrey Starodumov | Software | Change in MoreWeb GradingParameters.cfg |

Xray noise:

grade B moved from 300e to 400e

grade C moved from 400e to 500e |

|

273

|

Mon May 25 17:24:05 2020 |

Andrey Starodumov | Software | Change in MoreWeb ColumnUniformityPerColumn.py |

In file

~/L1_SW/MoReWeb/Analyse/TestResultClasses/CMSPixel/QualificationGroup/XRayHRQualification$ emacs Chips/Chip/ColumnUniformityPerColumn/ColumnUniformityPerColumn.py

the high and low rates at which double column uniformity is checked are hard coded. Rates for L2 was there. Now correction is added:

# Layer2 settings

# HitRateHigh = 150

# HitRateLow = 50

# Layer1 settings

HitRateHigh = 250

HitRateLow = 150 |

|

60

|

Mon Feb 3 15:53:32 2020 |

Andrey Starodumov | Reception test | 5 modules RT: 1545, 1547, 1548, 1549, 1550 |

Feb 03

- RT is done and OK for all modules. All 5 modules graded A. |

|

61

|

Mon Feb 3 17:09:36 2020 |

Andrey Starodumov | Reception test | 2 modules RT failed: 1544, 1546 |

Feb 03

- RT failed

-- 1544:

--- all ROCs are programmable

--- ROC14 no reliable Threshold found, ROC15 no threshold at all

-- 1546

--- all ROCs are programmable

--- no threshold found for all ROCs |

|

69

|

Thu Feb 6 17:19:12 2020 |

Dinko Ferencek | Reception test | RT for 6 modules: 1551, 1552, 1553, 1554, 1555, 1556 |

Today reception test was run for 6 modules and looks OK for all module. The modules were graded as follows:

1551: A

1552: A

1553: B (Electrical grade B)

1554: A

1555: B (IV grade B)

1556: B (IV grade B)

Protective caps were glued to these modules. |

|

71

|

Fri Feb 7 19:40:27 2020 |

Dinko Ferencek | Reception test | RT for 6 modules: 1557, 1558, 1559, 1560, 1561, 1562 |

Reception test is done and all 6 modules were graded A.

Protective caps were glued to 4 modules: 1557, 1558, 1559, 1560 |

|

78

|

Tue Feb 11 10:25:32 2020 |

Dinko Ferencek | Reception test | RT for 6 modules: 1563, 1564, 1565, 1566, 1567, 1568 |

Feb. 10

1563: Grade C, ROCs 8 and 10 not programmable, no obvious problems with wire bonds

1564: Grade B, I > 2 uA (2.17 uA)

Feb. 11

1565: Grade A

1566: Grade A

1567: Grade C, no data from ROCs 8-11, looks like a problem with one TBM1 core

1568: Grade A |

|

82

|

Wed Feb 12 15:40:13 2020 |

Dinko Ferencek | Reception test | RT for 3 modules: 1569, 1570, 1571; 1572 bad |

1569: Grade A

1570: Grade A

1571: Grade B, I > 2 uA (3.09 uA)

1572 from the same batch of 4 assembled modules was not programmable and was not run through the reception test. |

|

83

|

Thu Feb 13 21:44:28 2020 |

Dinko Ferencek | Reception test | RT for 6 modules: 1573, 1574, 1575, 1576, 1577, 1578 |

1573: Grade A

1574: Grade B, I(150)/I(100) > 2 (4.63)

1575: Grade C, problem with one TBM core

1576: Grade A

1577: Grade A

1578: Grade A |

|

86

|

Fri Feb 14 14:40:59 2020 |

Dinko Ferencek | Reception test | RT for 2 modules: 1578, 1580 |

1579: Grade A

1580: Grade A |

|

116

|

Thu Mar 19 17:17:27 2020 |

Andrey Starodumov | Reception test | M1609-M16012 |

Reception test done and caps are glued to modules M1609-M1612.

M1611 graded B due to double column failure on ROC13. Others graded A. |

|

118

|

Fri Mar 20 14:46:31 2020 |

Andrey Starodumov | Reception test | M1615 failed |

M1615 is programmable but "no working phases found"

Visual inspection of wire bonds - OK.

To module doctor! |

|

119

|

Fri Mar 20 14:53:52 2020 |

Andrey Starodumov | Reception test | M1616 failed |

ROC10 of M1616 is not programmable, no readout from ROC10 and ROC11.

Visual inspection of wire bonds - OK

To module doctor! |

|

120

|

Fri Mar 20 17:05:58 2020 |

Andrey Starodumov | Reception test | M1617 failed |

ROC8 of M1617 is programmable but no readout from it.

Silvan noticed that a corner of one ROC of this module is broken,

this is exactly ROC8. |

|

123

|

Sun Mar 22 13:57:25 2020 |

Danek Kotlinski | Reception test | M1617 failed |

| Andrey Starodumov wrote: | ROC8 of M1617 is programmable but no readout from it.

Silvan noticed that a corner of one ROC of this module is broken,

this is exactly ROC8. |

Interesting that the phase finding works fine, the width of the valid region is 4, so quite

good. ROC8 idneed does not give any hits but the token passed through it, so the overall

readout works fine. There re no readout errors.

The crack on the corner of this ROC is clearly visible.

I wonder how this module passed the tests in Helsinki?

|

|

124

|

Sun Mar 22 14:02:20 2020 |

Danek Kotlinski | Reception test | M1616 failed |

| Andrey Starodumov wrote: | ROC10 of M1616 is not programmable, no readout from ROC10 and ROC11.

Visual inspection of wire bonds - OK

To module doctor! |

For me ROC10 is programmable.

It looks like there is not token pass through ROC11.

This affects the readout of ROCs 11 & 10.

Findphases fails because of the missing ROC10&11 readout. |

|

125

|

Sun Mar 22 14:05:20 2020 |

Danek Kotlinski | Reception test | M1615 failed |

| Andrey Starodumov wrote: | M1615 is programmable but "no working phases found"

Visual inspection of wire bonds - OK.

To module doctor! |

For me this module is working fine.

I could run phasefinding and obtained a perfect PixelAlive.

I left this module connected in the blue-box in order to run more advanced tests from home. |

|

127

|

Tue Mar 24 15:18:59 2020 |

Andrey Starodumov | Reception test | M1621 failed Reception |

| On M1621 ROC8 is not programmable. |

|

128

|

Tue Mar 24 15:20:18 2020 |

Andrey Starodumov | Reception test | M1623 failed Reception |

M1623: all ROCs are programmable but no readout from ROC0-ROC3.

Visual inspection is OK.

To module doctor. |

|

130

|

Tue Mar 24 16:08:58 2020 |

Andrey Starodumov | Reception test | M1625 failed Reception |

M1625: all ROCs are programmable but no readout from ROC0-ROC3.

The same symptom as for M1623.

Visual inspection is OK.

To module doctor. |

|

133

|

Wed Mar 25 14:11:35 2020 |

Andrey Starodumov | Reception test | RT of M1613 and M1614 on Mar 20 |

Reception test for these modules have been done on Mar20.

Grading A for both modules. |

|

136

|

Wed Mar 25 14:44:37 2020 |

Andrey Starodumov | Reception test | RT of M1627 and M1628 |

| Both modules graded A. |

|

144

|

Thu Mar 26 17:41:52 2020 |

Andrey Starodumov | Reception test | RT of M1629-1632 |

| All modules M1629, M1630, M1631 and M1632 graded A |

|

148

|

Fri Mar 27 14:28:14 2020 |

Andrey Starodumov | Reception test | M1633 failed Reception |

All ROCs are programmable but permanent DESERALISER ERROR, Ch6 and Ch7 event ID mismatch.

Visual inspection is OK

To module doctor |

|

152

|

Fri Mar 27 17:27:09 2020 |

Andrey Starodumov | Reception test | M1635 failed Reception |

All ROCs are programmable but DESER400 trailer error bits: "NO DATA" or "IDLE DATA".

ROC8-11 are affected (no data)

Visual inspection is OK

To module doctor |

|

160

|

Mon Mar 30 17:49:31 2020 |

Andrey Starodumov | Reception test | RT of M1637-1640 |

All modules: M1637, M1638, M1639, M1649, are graded A.

|

|

165

|

Tue Mar 31 17:32:47 2020 |

Andrey Starodumov | Reception test | RT of M1641-M1644 |

| All modules graded A. |

|

166

|

Tue Mar 31 17:33:37 2020 |

Andrey Starodumov | Reception test | RT of M1546 |

This is a module with a broken TBM. Silvan put a new one on top of the broken TBM.

The module is graded A after reception test.

I'm still not sure that wire-bonds of the new TBM is lower than capacitors. I'll try to glue a cap tomorrow

to see whether we could substitute TBMs on another 6 modules with broken TBMs. |

|

170

|

Wed Apr 1 14:36:37 2020 |

Andrey Starodumov | Reception test | M1646 failed Reception |

M1646 showed Idig=1A, ROC6 is not programmable.

Visual inspection: scratch on a periphery (between bonding pads) of ROC6.

To module doctor! |

|

171

|

Wed Apr 1 15:32:43 2020 |

Andrey Starodumov | Reception test | RT of M1546 |

| Andrey Starodumov wrote: | This is a module with a broken TBM. Silvan put a new one on top of the broken TBM.

The module is graded A after reception test.

I'm still not sure that wire-bonds of the new TBM is lower than capacitors. I'll try to glue a cap tomorrow

to see whether we could substitute TBMs on another 6 modules with broken TBMs. |

Cap has been glued to M1546. No damaged wire-bonds. Vthr-CalDel and PixelAlive are OK.

Module to be (FT) tested tomorrow. |

|

173

|

Thu Apr 2 17:06:19 2020 |

Andrey Starodumov | Reception test | M1652 failed Reception |

ROC1 of M1652 is not programmable.

Put in the "Bad" tray as C module. |

|

177

|

Fri Apr 3 13:55:48 2020 |

Andrey Starodumov | Reception test | M1653 failed Reception |

Roc12-15 are not programmable.

Visual inspection is Ok, nothing found.

To module doctor! |

|

179

|

Fri Apr 3 14:18:15 2020 |

Andrey Starodumov | Reception test | RT of M1651 on April 2 |

Due to damaged module adapter the first Reception test failed and after MoreWeb analysis graded C.

After second Reception test (with proper connected cable) the grade is A.

To keep grade A instead of C in the MoreWeb summary table I removed the directory of the first Reception:

:~/L1_DATA/M1651_Reception_2020-04-02_16h09m_1585836596 (but the .tar file is still there), run python Controller.py -d (and remove raw with C grade from GlobalFinalResult) and rerun python Controller.py -m M1651 |

|

186

|

Mon Apr 6 14:27:31 2020 |

Andrey Starodumov | Reception test | M1593 |

Silvan has substituted the TBM0 of M1593.

I had to substitute a cable that has residuals and with which the Reception test failed completely.

The long cable has been attached.

Reception test grade: A |

|

187

|

Mon Apr 6 14:42:43 2020 |

Andrey Starodumov | Reception test | RT of M1575 failed |

Silvan has substituted the TBM0 of M1575.

Still no hits in ROC0-ROC3: "NO DATA" "IDLE DATA" warnings

The long cable has been attached.

To module doctor! |

|

188

|

Mon Apr 6 14:52:09 2020 |

Andrey Starodumov | Reception test | RT of M1657 failed |

No hits in ROC14 and ROC15.

Here is an error:

"ERROR: <datapipe.cc/CheckEventValidity:L524> Channel 5 Number of ROCs (1) != Token Chain Length (2)"

To module doctor! |

|

191

|

Tue Apr 7 14:26:48 2020 |

Andrey Starodumov | Reception test | RT of M1567 failed |

After exchange of TBM1 the results is the same:

WAS:

1567: Grade C, no data from ROCs 8-11, looks like a problem with one TBM1 core

NOW:

-during ThrComp-CalDel scan: WARNING: Detected DESER400 trailer error bits: "IDLE DATA"

- result: INFO: CalDel: 135 134 126 121 158 141 119 133 _ 124 _ 107 _ 126 _ 108 133 91 138 120

ROC8-ROC11 no hits! |

|

192

|

Tue Apr 7 15:25:21 2020 |

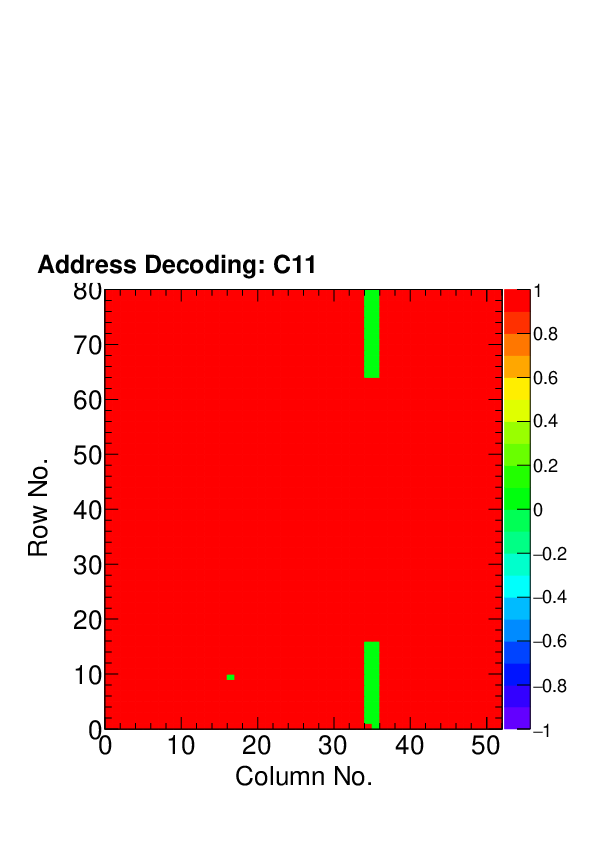

Andrey Starodumov | Reception test | RT of M1662 failed |

Address decoding of M1662 failed in one double column of ROC4.

To be decided what to do with this module.

Currently in C* tray. |

|

195

|

Tue Apr 7 18:02:07 2020 |

Andrey Starodumov | Reception test | RT of 1654 |

After Silvan removed a cap and re-bonded broken and bent wires M1654 again has been tested.

RT grade is A.

Protection cap to be glued and to be (F)tested. |

|

196

|

Wed Apr 8 13:40:45 2020 |

Andrey Starodumov | Reception test | RT of M1625 failed |

Silvan substituted TBM on this module.

Now ROC0 does not have hits.

Grade C, goes to "Bad" tray. |

|

197

|

Wed Apr 8 13:43:15 2020 |

Andrey Starodumov | Reception test | RT of M1623 failed |

M1623: all ROCs are programmable but no readout from ROC8-ROC11.

Visual inspection is OK.

To module doctor. |

|

199

|

Wed Apr 8 15:20:38 2020 |

Andrey Starodumov | Reception test | RT of M1665 |

With CtrlReg=9 RT grade was B due to 90 noisy pixels in ROC5.

Noisy in this case means that one pixel in a 2x2 cluster in a few column got 40 hits instead of 10.

With CtrlReg=17 this problem gone. RT grade is A. |

|

201

|

Wed Apr 8 17:17:13 2020 |

Andrey Starodumov | Reception test | RT of M1662 failed |

| Andrey Starodumov wrote: | Address decoding of M1662 failed in one double column of ROC4.

To be decided what to do with this module.

Currently in C* tray. |

With CtrlReg=17 the grade of module is B. Still in one double column "noisy" pixels: with hits from other 3 pixels of a cluster.

Stay in C* tray. To come back later. |

|

203

|

Thu Apr 9 14:36:10 2020 |

Andrey Starodumov | Reception test | RT of M1669 and M1670 |

| Both modules graded A. |

|

204

|

Thu Apr 9 14:49:07 2020 |

Andrey Starodumov | Reception test | RT of M1650 failed |

Module has been tested on April 2nd.

Under Molex connector in ROC12 471 dead or noisy pixels.

Grade C. |

|

207

|

Thu Apr 9 17:36:31 2020 |

Andrey Starodumov | Reception test | RT of M1671 failed |

ROC12-ROC15 no hits.

A candidate to TBM0 substitution, hence Grade C*.

To Module doctor1 |

|

209

|

Tue Apr 14 15:49:05 2020 |

Andrey Starodumov | Reception test | RT of M1623, M1657, M1673, M1674 |

M1623: Grade A, should be B due to 71 bump defects in ROC4

M1657: Grade B, due to 51 dead pixels in ROC12

M1673: Grade A, again 31 dead bumps in ROC12

M1674: Grade A, again 39 dead bumps in ROC12 |

|

220

|

Mon Apr 20 15:20:07 2020 |

Andrey Starodumov | Reception test | Change TBMs on M1635, M1653, M1671 |

| Andrey Starodumov wrote: | M1635: no data from ROC8-ROC11 => change TBM1

M1653: ROC12-ROC15 not programmable => change TBM0

M1671: no data from ROC12-ROC15 => change TBM0

Modules to be given to Silvan |

After TBMs have been changed:

M1635: the same no data from ROC8-ROC11

M1653: reception test Grade A

M1671: the same no data from ROC12-ROC15

M1635 and M1671 to module doctor for final decision |

|

294

|

Fri Jul 10 11:24:37 2020 |

Urs Langenegger | Reception test | proc600V3 modules |

This week I tested 12 modules built with proc600v3. The module numbers are M1722 - M1733.

The results are summarized at the usual place:

http://cms.web.psi.ch/L1Replacement/WebOutput/MoReWeb/Overview/Overview.html

Cheers,

--U. |

|

95

|

Tue Mar 3 14:05:13 2020 |

Andrey Starodumov | Re-grading | Regrading C modules: due to Noise |

It has been realized by Urs that VCal to electron conversion used by MoreWeb is still 50e/VCal.

While recent calibration done by Maren with a few new modules suggest that this conversion is 43.7electrons per 1 VCal (the number from Danek).

I re-run MoreWeb analysis with 44e/Vcal for modules that are graded C for high noise (>300e).

1) Change 50-->44 in

(1) Analyse/AbstractClasses/TestResultEnvironment.py: 'StandardVcal2ElectronConversionFactor':50,

(2) Analyse/Configuration/GradingParameters.cfg:StandardVcal2ElectronConversionFactor = 50

(3) Analyse/Configuration/GradingParameters.cfg.default:StandardVcal2ElectronConversionFactor = 50

2) remove all SCurve_C*.dat files (otherwise new fit results are not written in these files)

3) run python Controller.py -r -m M1591

M1591:

- all three T grade C due to Mean Noise > 300 for ROC0 and/or both ROC0 and ROC1

- after rerun MoreWeb all but one grades are B on individual FT page but in Summary pages grades still C???

- second time at -20C: grade C is due to trimming fails in ROC0: 211 pixels have too large threshold after trimming

Trimming to be checked!!! |

|

97

|

Wed Mar 4 16:00:05 2020 |

Andrey Starodumov | Re-grading | Regrading C M1591: due to Noise |

| Andrey Starodumov wrote: | It has been realized by Urs that VCal to electron conversion used by MoreWeb is still 50e/VCal.

While recent calibration done by Maren with a few new modules suggest that this conversion is 43.7electrons per 1 VCal (the number from Danek).

I re-run MoreWeb analysis with 44e/Vcal for modules that are graded C for high noise (>300e).

1) Change 50-->44 in

(1) Analyse/AbstractClasses/TestResultEnvironment.py: 'StandardVcal2ElectronConversionFactor':50,

(2) Analyse/Configuration/GradingParameters.cfg:StandardVcal2ElectronConversionFactor = 50

(3) Analyse/Configuration/GradingParameters.cfg.default:StandardVcal2ElectronConversionFactor = 50

2) remove all SCurve_C*.dat files (otherwise new fit results are not written in these files)

3) run python Controller.py -r -m M1591

M1591:

- all three T grade C due to Mean Noise > 300 for ROC0 and/or both ROC0 and ROC1

- after rerun MoreWeb all but one grades are B on individual FT page but in Summary pages grades still C???

- second time at -20C: grade C is due to trimming fails in ROC0: 211 pixels have too large threshold after trimming

Trimming to be checked!!! |

To correct the Summary page one needs to remove rows from DB with C grade from previous data analysis (that for some reason stayed in DB)

using python Controller -d and then run python Controller -m MXXXX

To the directory :~/L1_DATA/WebOutput/MoReWeb/FinalResults/REV001/R001/M1591_FullQualification_2020-02-28_08h03m_1582873436/QualificationGroup/ModuleFulltest_m20_2

file grade.txt with a content "2" (corresponds to grade B) has been added. Hence this test grade has been changed from C to B.The reason is that 211 pixels fail trimming (threshold is outside boundary) is a too low trim threshold: VCal=40. From now on 50 will be used. |

|

103

|

Wed Mar 11 17:59:48 2020 |

Andrey Starodumov | Re-grading | Regrading C M1542: due to Noise |

Follow the instruction from M1591 regrading log:

"To correct the Summary page one needs to remove rows from DB with C grade from previous data analysis (that for some reason stayed in DB)

using python Controller -d and then run python Controller -m MXXXX"

The Mean noise remains the same but threshold scaled according to the new VCal calibration (44 instead of 50).

To have corrected Mean noise one needs to refit SCurves, means run MoreWeb analysis with -r flag: "Controller -m MXXXX -r"

The modules still graded C due to relative gain spread. It will be re-tested tomorrow with new HP optimization/calibration procedure. |

|

132

|

Tue Mar 24 18:11:52 2020 |

Andrey Starodumov | Re-grading | Reanalised test results |

Test resulsts of several modules have been re-analised without grading on trimbit failure.

M1614: C->B

M1613: C->A

M1609: C->B

M1618: B->A

M1612: B->B

M1610: B->B

M1608: C->B

M1606: C->C (too many badly trimmed pixels)

M1605: C->B

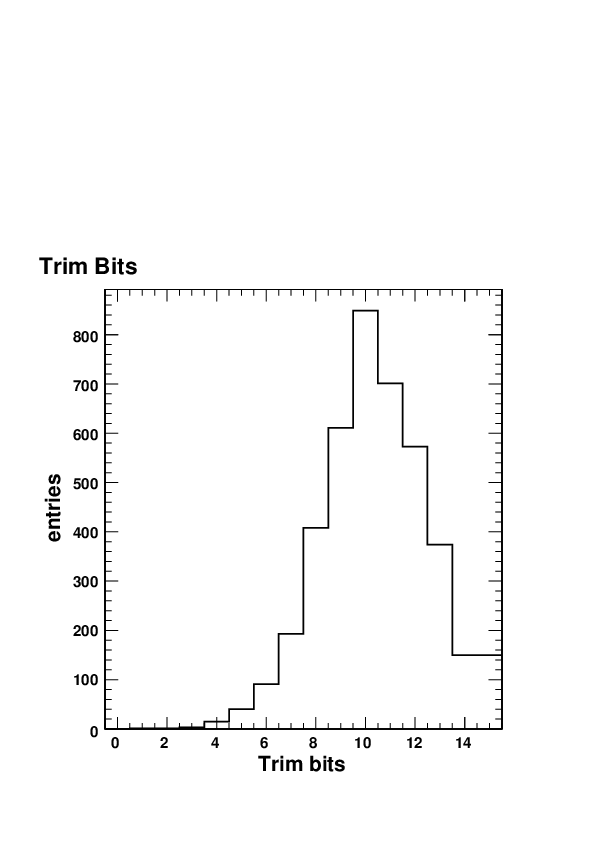

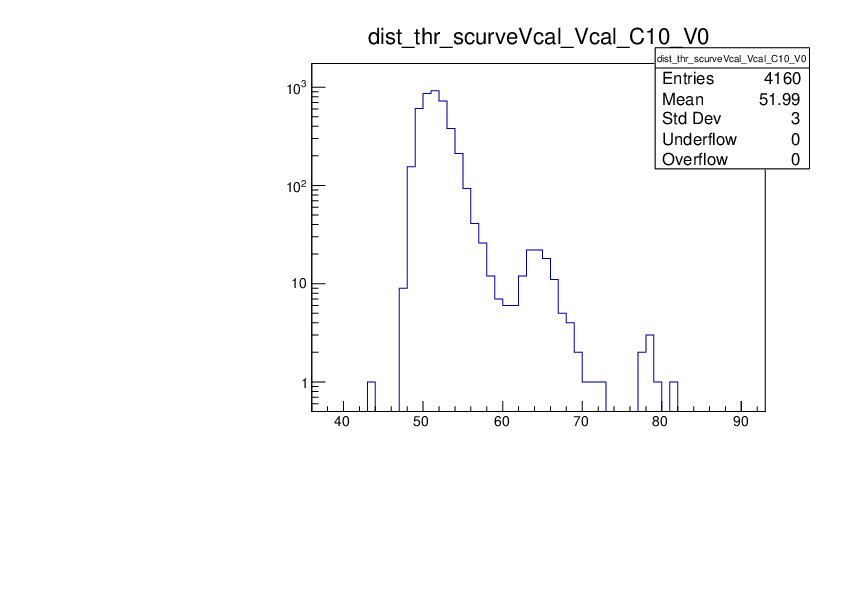

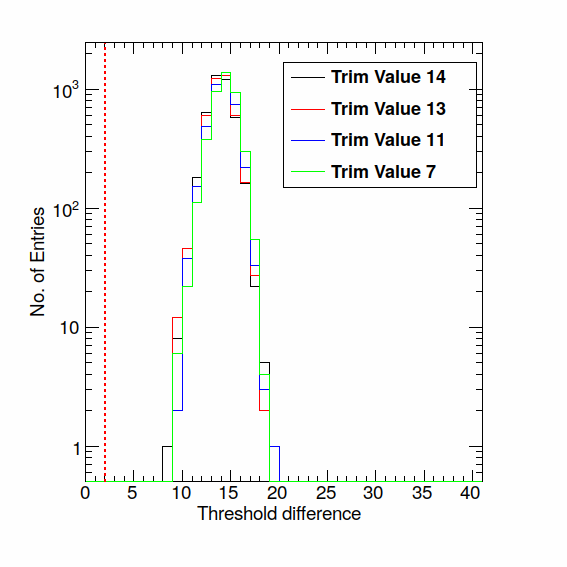

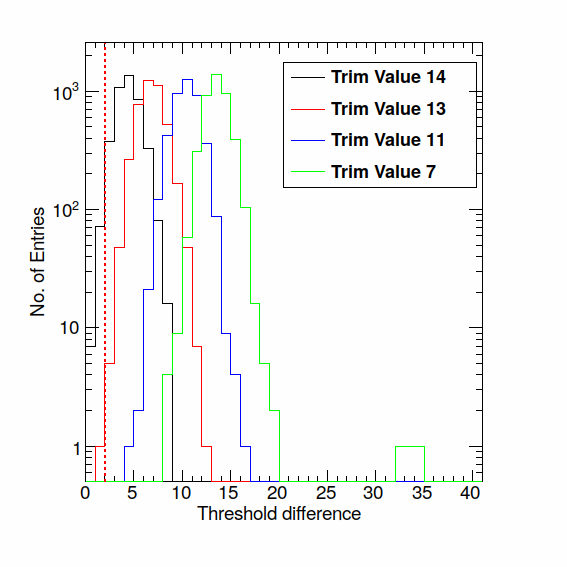

Most of all B gradings and one C are due to badly trimmed pixels. The threshold after trimming usually has 3(!) separated peaks.

We should understand this feature. |

|

139

|

Wed Mar 25 18:31:46 2020 |

Andrey Starodumov | Re-grading | Reanalised test results |

| Andrey Starodumov wrote: | Test resulsts of several modules have been re-analised without grading on trimbit failure.

M1614: C->B

M1613: C->A

M1609: C->B

M1618: B->A

M1612: B->B

M1610: B->B

M1608: C->B

M1606: C->C (too many badly trimmed pixels)

M1605: C->B

Most of all B grading and one C are due to badly trimmed pixels. The threshold after trimming usually has 3(!) separated peaks.

We should understand this feature. |

More modules have been re-analysed:

1604: C->B

1603: C->B

1602: C->B

1601: B->A

1545: C->C (too many pixels on ROC14 are badly trimmed, to be retested tomorrow) |

|

278

|

Fri May 29 13:56:35 2020 |

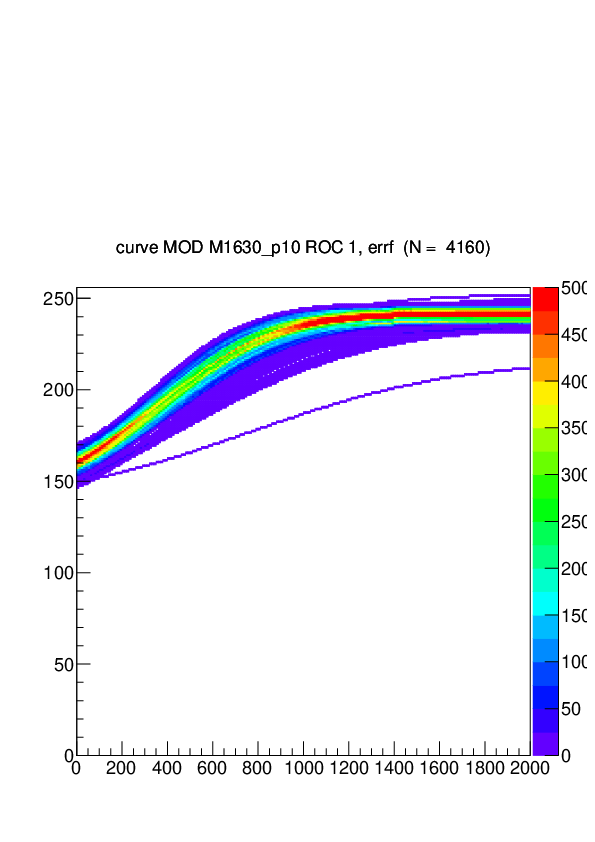

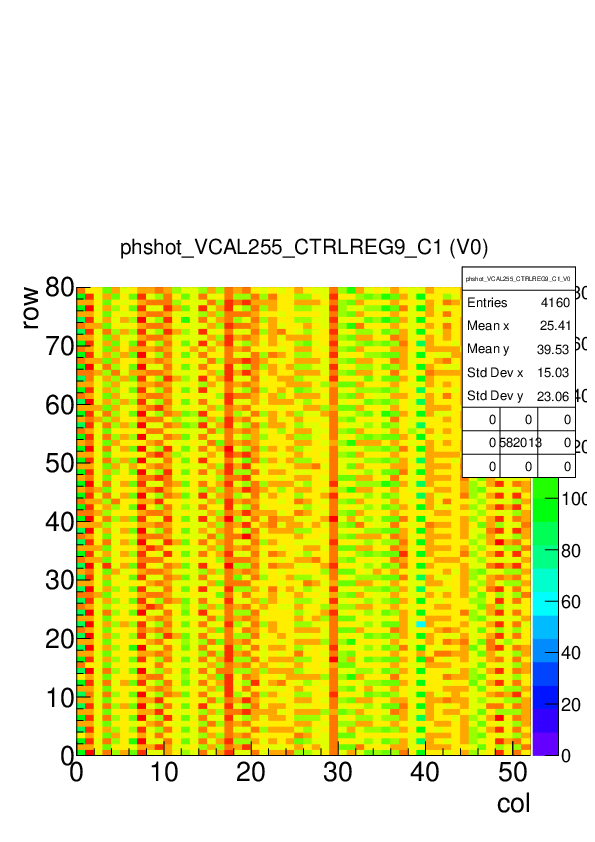

Andrey Starodumov | Re-grading | M1630 |

In ROC1 of M1630 gain calibration failed massively (3883 pixels) at +10C with CtrlReg 9.

A special test of M1630 only at +10C and with CtrlReg 17 showed no problem.

p10_1 from ~/L1_DATA/ExtraTests_ToBeKept/M1630_FullTestp10_2020-04-17_15h54m_1587131688/000_Fulltest_p10

copied to ~/L1_DATA/M1630_FullQualification_2020-04-06_08h35m_1586154934/005_Fulltest_p10

and original files from ~/L1_DATA/M1630_FullQualification_2020-04-06_08h35m_1586154934/005_Fulltest_p10

copied to ~/L1_DATA/ExtraTests_ToBeKept/p10RemovedFrom_M1630_FullQualification_2020-04-06_08h35m_1586154934/005_Fulltest_p10

This is done to have a clean ranking of modules based on # of defects. |

|

109

|

Mon Mar 16 11:04:41 2020 |

Matej Roguljic | PhQualification | PhQualification 14.-15.3. |

I ran PhQualification over the weekend with changes pulled from git (described here https://elrond.irb.hr/elog/Layer+1+Replacement/108).

14.3. M1554, M1555, M1556, M1557

First run included software changes, but I forgot to change the vcalhigh in testParameters.dat

The summary can be seen in ~/L1_SW/pxar/ana/T-20/VcalHigh255 (change T-20 to T+10 to see results for +10 degrees)

Second run was with vcalhigh 100.

The summary can be seen in ~/L1_SW/pxar/ana/T-20/Vcal100

15.3. M1558, M1559, M1560

I only ran 3 modules because DTB2 (WRE1O5) or its adapter was not working. Summary is in ~/L1_SW/pxar/ana/T-20/Vcal100

The full data from the tests are in ~/L1_DATA/MXXXX_PhQualification_... |

|

111

|

Mon Mar 16 15:20:03 2020 |

Matej Roguljic | PhQualification | PhQualification on 16.3. |

| PhQualification was run on modules M1561, M1564, M1565, M1566. |

|

295

|

Wed Jul 29 17:19:43 2020 |

danek kotlinski | PhQualification | Change configuration for PH qialification |

Preparing the new PH optimization I had to make the following modifications:

1) in elCommandante.ini

change ModuleType definition from tbm10d to tbm10d_procv4

in order to use CtrlReg=17 setting

2) in pxar/data/tbm10d_procv4

change in all dacParameter*.dat files Vsh setting from 8 to 10.

I hope these are the right locations.

D. |

|

298

|

Fri Aug 28 11:53:23 2020 |

Andrey Starodumov | PhQualification | M1555 |

There is no new PH optimisation for this module?!

To be checked! |

|

300

|

Fri Aug 28 14:07:24 2020 |

Andrey Starodumov | PhQualification | M1539 |

There is no new PH optimisation for this module?!

To be checked! |

|

302

|

Sat Sep 12 23:45:56 2020 |

Dinko Ferencek | POS | POS configuration files created |

pXar parameter files were converted to POS configuration files by executing the following commands on the lab PC at PSI

Step 1 (needs to be done only once, should be repeated only if there are changes in modules and/or their locations)

cd /home/l_tester/L1_SW/MoReWeb/scripts/

python queryModuleLocation.py -o module_locations.txt -f

Next, check that /home/l_tester/L1_DATA/POS_files/Folder_links/ is empty. If not, delete any folder links contained in it and run the following command

python prepareDataForPOS.py -i module_locations.txt -p /home/l_tester/L1_DATA/ -m /home/l_tester/L1_DATA/WebOutput/MoReWeb/FinalResults/REV001/R001/ -l /home/l_tester/L1_DATA/POS_files/Folder_links/

Step 2

cd /home/l_tester/L1_SW/pxar2POS/

for i in `cat /home/l_tester/L1_SW/MoReWeb/scripts/module_locations.txt | awk '{print $1}'`; do ./pxar2POS.py -m $i -T 50 -o /home/l_tester/L1_DATA/POS_files/Configuration_files/ -s /home/l_tester/L1_DATA/POS_files/Folder_links/ -p /home/l_tester/L1_SW/MoReWeb/scripts/module_locations.txt; done

The POS configuration files are located in /home/l_tester/L1_DATA/POS_files/Configuration_files/ |

|

305

|

Fri Nov 6 07:28:42 2020 |

danek kotlinski | POS | POS configuration files created |

| Dinko Ferencek wrote: | pXar parameter files were converted to POS configuration files by executing the following commands on the lab PC at PSI

cd /home/l_tester/L1_SW/MoReWeb/scripts/

python queryModuleLocation.py -o module_locations.txt -f

python prepareDataForPOS.py -i module_locations.txt -p /home/l_tester/L1_DATA/ -m /home/l_tester/L1_DATA/WebOutput/MoReWeb/FinalResults/REV001/R001/ -l /home/l_tester/L1_DATA/POS_files/Folder_links/

cd /home/l_tester/L1_SW/pxar2POS/

for i in `cat /home/l_tester/L1_SW/MoReWeb/scripts/module_locations.txt | awk '{print $1}'`; do ./pxar2POS.py -m $i -T 50 -o /home/l_tester/L1_DATA/POS_files/Configuration_files/ -s /home/l_tester/L1_DATA/POS_files/Folder_links/ -p /home/l_tester/L1_SW/MoReWeb/scripts/module_locations.txt; done

The POS configuration files are located in /home/l_tester/L1_DATA/POS_files/Configuration_files/ |

Dinko

I have finally looked more closely at the file you have generated. They seem fine exept 2 points:

1) Some TBM settings (e.g. pkam related) differ from P5 values.

This is not a problem since we will have to adjust them anyway.

2) There is one DAC setting missing.

This is DAC number 13, between VcThr and PHOffset.

This is the tricky one because it has a different name in PXAR and P5 setup files.

DAC 13: PXAR-name = "vcolorbias" P5-name="VIbias_bus"

its value is fixed to 120.

Can you please insert it.

D. |

|

306

|

Tue Nov 10 00:50:47 2020 |

Dinko Ferencek | POS | POS configuration files created |

| danek kotlinski wrote: |

| Dinko Ferencek wrote: | pXar parameter files were converted to POS configuration files by executing the following commands on the lab PC at PSI

cd /home/l_tester/L1_SW/MoReWeb/scripts/

python queryModuleLocation.py -o module_locations.txt -f

python prepareDataForPOS.py -i module_locations.txt -p /home/l_tester/L1_DATA/ -m /home/l_tester/L1_DATA/WebOutput/MoReWeb/FinalResults/REV001/R001/ -l /home/l_tester/L1_DATA/POS_files/Folder_links/

cd /home/l_tester/L1_SW/pxar2POS/

for i in `cat /home/l_tester/L1_SW/MoReWeb/scripts/module_locations.txt | awk '{print $1}'`; do ./pxar2POS.py -m $i -T 50 -o /home/l_tester/L1_DATA/POS_files/Configuration_files/ -s /home/l_tester/L1_DATA/POS_files/Folder_links/ -p /home/l_tester/L1_SW/MoReWeb/scripts/module_locations.txt; done

The POS configuration files are located in /home/l_tester/L1_DATA/POS_files/Configuration_files/ |

Dinko

I have finally looked more closely at the file you have generated. They seem fine exept 2 points:

1) Some TBM settings (e.g. pkam related) differ from P5 values.

This is not a problem since we will have to adjust them anyway.

2) There is one DAC setting missing.

This is DAC number 13, between VcThr and PHOffset.

This is the tricky one because it has a different name in PXAR and P5 setup files.

DAC 13: PXAR-name = "vcolorbias" P5-name="VIbias_bus"

its value is fixed to 120.

Can you please insert it.

D. |

Hi Danek,

I think I implemented everything that was missing. The full list of code updates is here.

Best,

Dinko |

|

308

|

Tue Jan 19 15:12:12 2021 |

Dinko Ferencek | POS | POS configuration files created |

M1560 in position bpi_sec1_lyr1_ldr1_mod3 was replaced by M1613.

The POS configuration files were re-generated and placed in /home/l_tester/L1_DATA/POS_files/Configuration_files/.

The old version of the files was moved to /home/l_tester/L1_DATA/POS_files/Configuration_files_20201110/. |

|

309

|

Mon Jan 25 13:03:22 2021 |

Dinko Ferencek | POS | POS configuration files created |

The output POS configuration files has '_Bpix_' instead of '_BPix_' in their names. The culprit was identified to be the C3 cell in the 'POS' sheet of Module_bookkeeping-L1_2020 Google spreadsheet which contained 'Bpix' instead of 'BPix' which was messing up the file names. This has been fixed now and the configuration files regenerated.

The WBC values were also changed to 164 for all modules using the following commands

cd /home/l_tester/L1_SW/pxar2POS/

./pxar2POS.py --do "dac:set:WBC:164" -o /home/l_tester/L1_DATA/POS_files/Configuration_files/ -i 1

This created a new set of configuration files with ID 2 in /home/l_tester/L1_DATA/POS_files/Configuration_files/.

The WBC values stored in ID 1 were taken from the pXar dacParameters*_C*.dat files and the above procedure makes a copy of the ID 1 files and overwrites the WBC values. |

|

4

|

Tue Aug 6 16:06:45 2019 |

Matej Roguljic | Other | Vsh and ctrlreg for v4 chips |

| The recommended default value for Vsh is 8, but the current version of pXar has it as 30. One should remember this when making new configuration folders from mkConfig. The recommended value of ctrlreg is 17. |

|

8

|

Tue Sep 10 15:18:20 2019 |

Matej Roguljic | Other | Modules 1504, 1505, 1520 irradiation report |

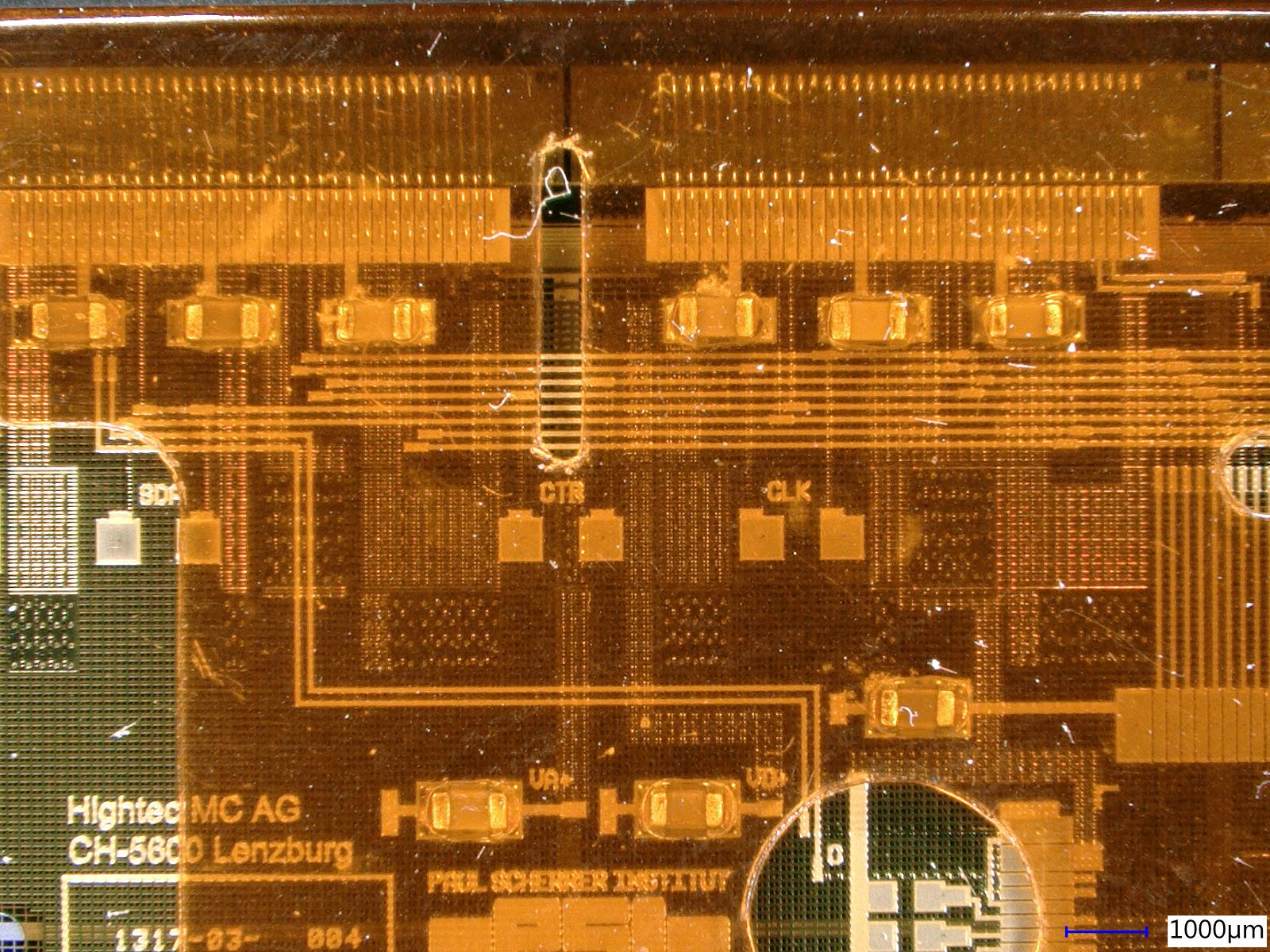

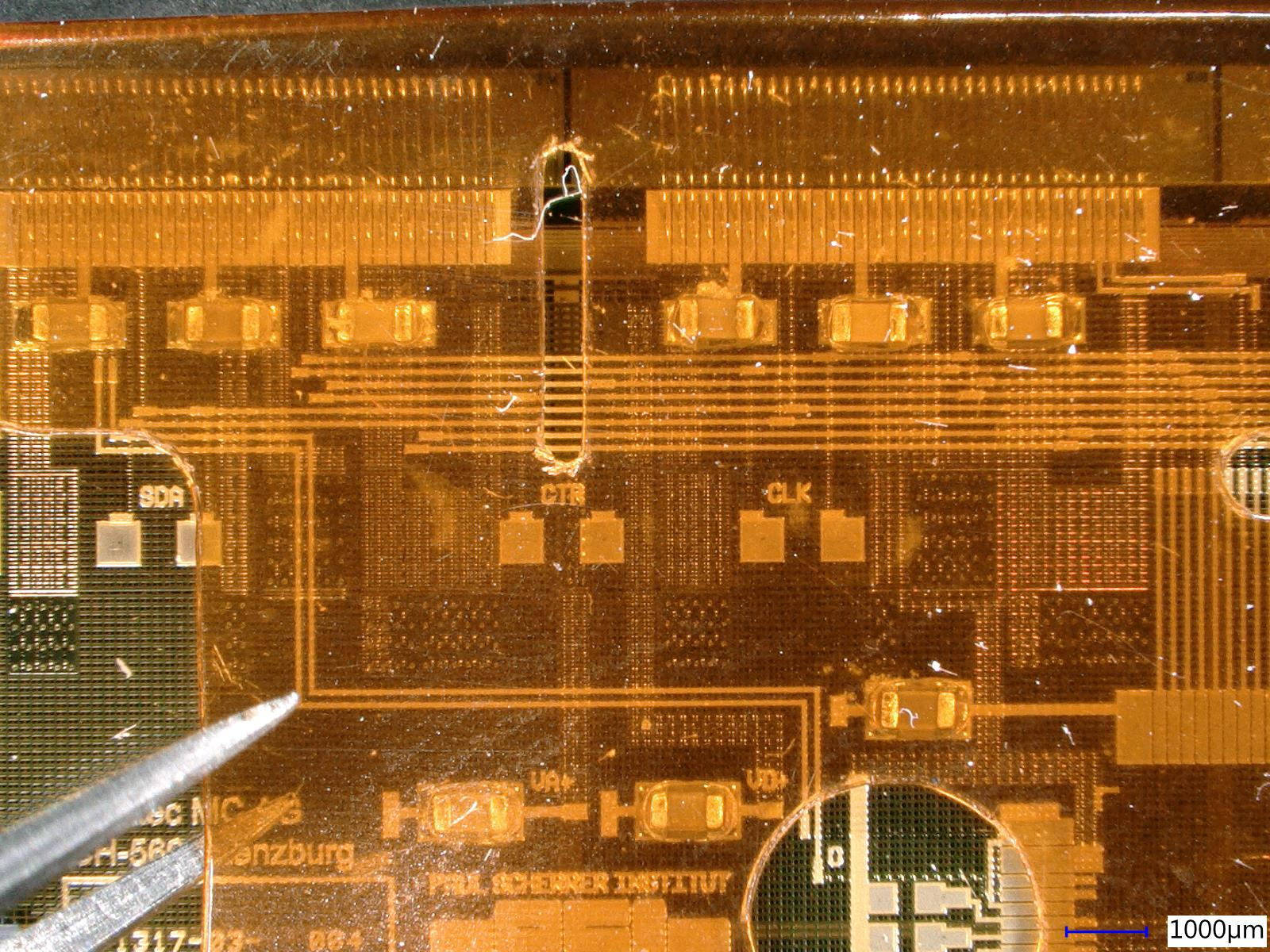

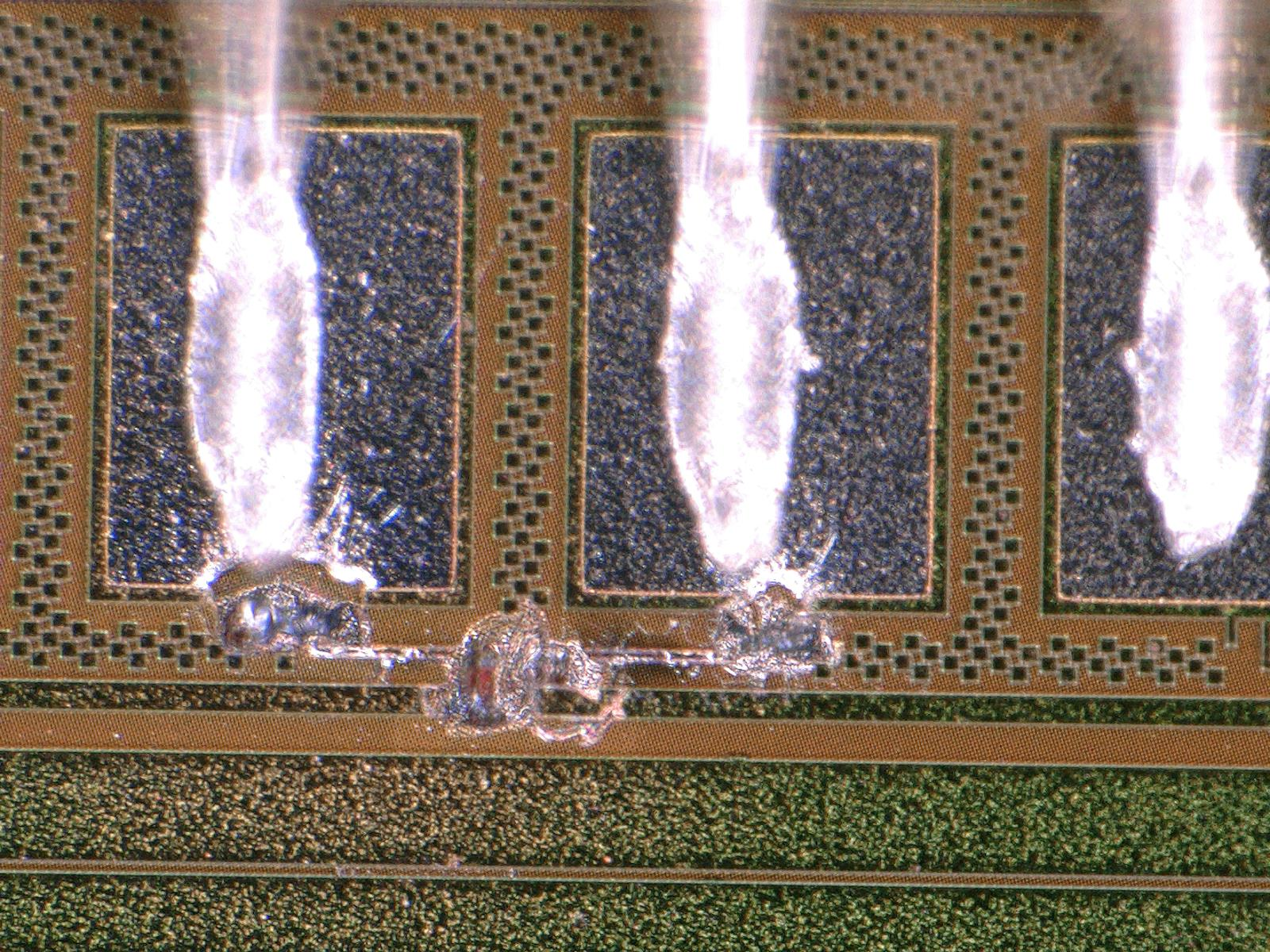

| Modules 1504, 1505 and 1520 were taken to Zagreb for irradiation on 13.08.19. The goal was to check the v4 behavior after irradiation. They were irradiated to 1.2 MGy and returned to PSI on 9th of September. Upon testing them at PSI, they all had issues. ROCs on M1504 and M1520 were not programmable at all, changing Vana had no effect. Vana could be set on M1505 while targeting Iana = 28 mA/ROC, however, no timing could be found for it and no working pixel could be found. Andrey and Matej took the modules under the microscope and saw a "greenish" deposits on HDI metal pads of unknown origin. There was also a bit of liquid near the HDI ID on M1520, but not on others. The residue could be shorting some pads causing issues on the module. It is still unclear whether the residue comes from the HDI, cap or is it introduced during irradiation by something in Zagreb. |

|

10

|

Fri Sep 13 15:06:51 2019 |

Andrey Starodumov | Other | Modules 1504, 1505, 1520 irradiation report |

It was discovered that these modules were stored in Zagreb in the climatic lab where T was about +22C. These modules have been transported from Co60 irradiation facility to the lab on open air with T>30C and RH>70%. Water in the air under the cap condensated on the surface of HDIs and diluted residuals (from soldering, passivation etc), that after remains liquid or crystallised.

The irradiation itself does not course any damage. This is also confirmed by the fact that after two previous irradiations in Jan and Jul 2019 of modules and HDIs, samples remained in a good shape without any residuals on HSI surfaces. These samples have been kept in an office where T and RH were similar to the outside and not in the climatic lab.

We consider the the case is understood and closed. |

|

12

|

Thu Sep 19 00:52:24 2019 |

Dinko Ferencek | Other | Modules 1504, 1505, 1520 irradiation report |

| Andrey Starodumov wrote: | It was discovered that these modules were stored in Zagreb in the climatic lab where T was about +22C. These modules have been transported from Co60 irradiation facility to the lab on open air with T>30C and RH>70%. Water in the air under the cap condensated on the surface of HDIs and diluted residuals (from soldering, passivation etc), that after remains liquid or crystallised.

The irradiation itself does not course any damage. This is also confirmed by the fact that after two previous irradiations in Jan and Jul 2019 of modules and HDIs, samples remained in a good shape without any residuals on HSI surfaces. These samples have been kept in an office where T and RH were similar to the outside and not in the climatic lab.

We consider the the case is understood and closed. |

Just to clarify. The modules were not transported from Co60 the irradiation facility to the lab in open air but inside a closed Petri dish. Otherwise, there would be no risk of water condensation if the air surrounding modules was allowed to quickly mix with the air-conditioned lab air. Here the problem arose from the fact that it was not only the modules that were brought inside the lab but they were brought inside a pocket of the outside air. A closed Petri dish is not airtight but it significantly reduces mixing of the air inside the Petri dish with the surrounding lab air making it slower than the rate at which the Petri dish and the module inside it were cooling down once brought inside the lab. This could have led to water condensation if the pocket of air trapped inside the Petri dish was warm and humid and had a dew point above the lab air temperature. To prevent this from happening, the solution should be relatively simple and it would be to open the Petri dish and uncover modules before bringing them inside the lab. That way the exchange of air will be faster and the risk of condensation will be basically gone because a warm module will be quickly surrounded by the lab air which will not condense on a warmer surface.

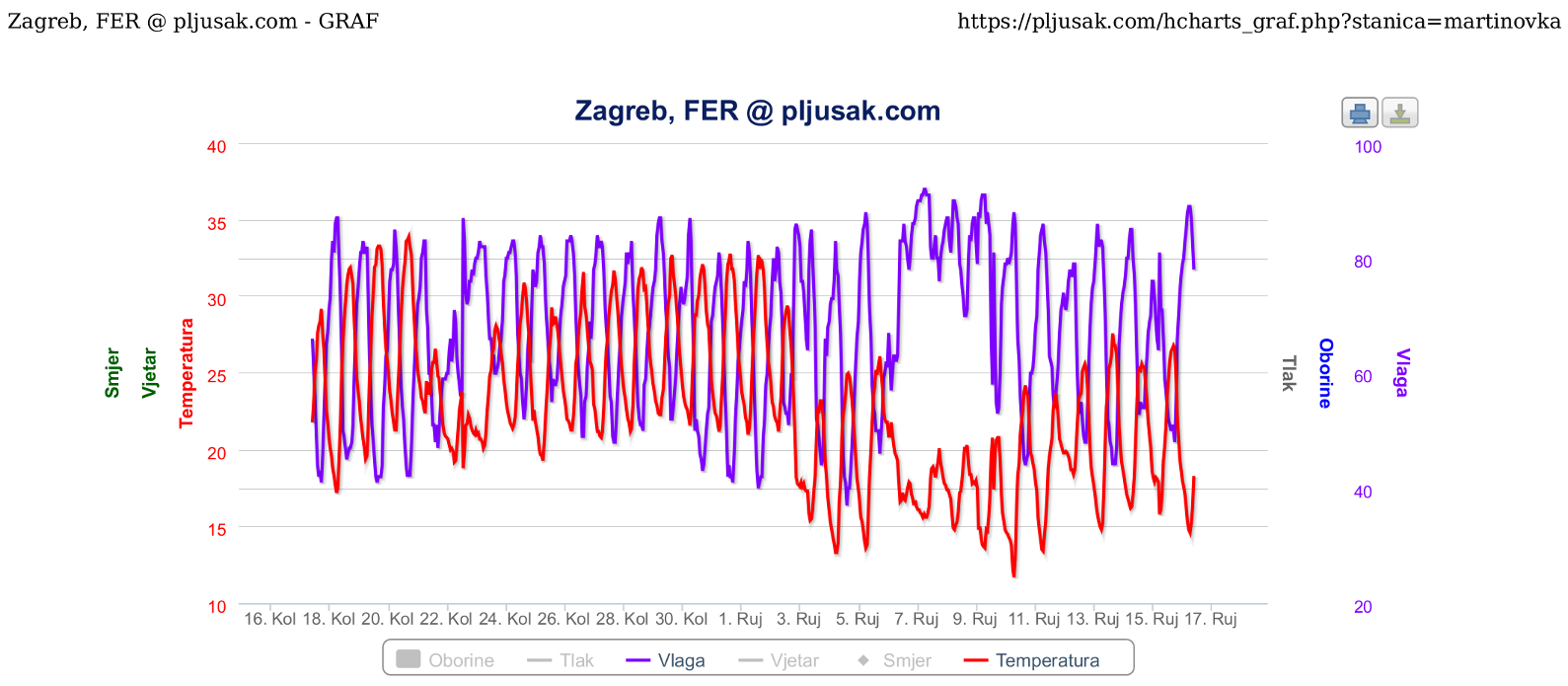

However, there is an additional twist in this particular case. On Aug. 22, when the modules were brought in the lab, there was a thunderstorm in Zagreb in the early afternoon (https://www.zagreb.info/aktualno/zagreb-je-zahvatila-oluja-munje-i-jaka-kisa-nad-vecim-dijelom-grada/227156) with temperature around 21.5 C and RH around 75% at the time the modules were transported (around 15:20), and the whole day was relatively fresh and humid. The outside air on that day would definitely not lead to water condensation in the lab. However, before being brought in the lab, the modules were sitting in a room in the building where the Co60 irradiation facility is located so the air inside the Petri dish was likely similar to the air inside that room (the modules were sitting there for a while and there was enough time for the air temperature and humidity to equalize) and there was not much time to mix with the outside when being transported from one building to another. Unfortunately, there are no measurements of the air temperature and humidity in that room. However, it is worth mentioning that the previous day, Aug. 21, was not very hot and humid with midday temperature around 26 C and RH around 50%. It is therefore likely that the air inside that room and consequently inside the Petri dish was not very hot and humid, making the hypothesis of water condensation in the lab, if not improbable, certainly less likely.

Either way, more careful handling of modules will be needed. |

| Attachment 1: ZG-FER_temp_hum_2019.08.16.-09.16.png

|

|

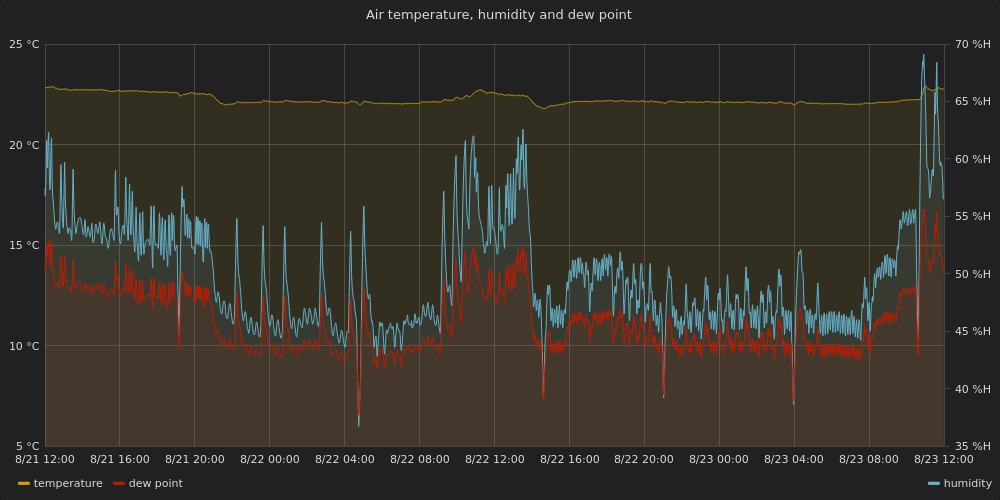

| Attachment 2: pixellab-main-room-1.png

|

|

|

99

|

Wed Mar 11 15:34:57 2020 |

Urs Langenegger | Other | M1586: issues with MOLEX? |

Module M1586 had passed the full qualification on 20/02/27. I had had to re-insert the cable in the Molex connector for it to become programmable.

On 2020/03/09, I tried to re-test M1586, but it was not programmable. Visual inspection revealed nothing to me. I did re-insert the cable once again, but this time this did not help.

Maybe one should try again re-inserting the cable.

Maybe these issues are an indication that the module (MOLEX) is flaky. |

|

100

|

Wed Mar 11 16:48:10 2020 |

Andrey Starodumov | Other | M1586: issues with MOLEX? |

| Urs Langenegger wrote: | Module M1586 had passed the full qualification on 20/02/27. I had had to re-insert the cable in the Molex connector for it to become programmable.

On 2020/03/09, I tried to re-test M1586, but it was not programmable. Visual inspection revealed nothing to me. I did re-insert the cable once again, but this time this did not help.

Maybe one should try again re-inserting the cable.

Maybe these issues are an indication that the module (MOLEX) is flaky. |

Most likely the cable contacts caused such behavior since they look damaged.

After changing the cable module do not show any more problems. |

|

155

|

Sun Mar 29 18:14:59 2020 |

danek kotlinski | Other | Modules 1544 & 1563 in gelpack |

The 2 bad modules:

M1544

M1563

Have been moved to gelpacks. |

|

175

|

Thu Apr 2 17:10:08 2020 |

Andrey Starodumov | Other | Cap glued to M1618 |

M1618 has been tested (FT ) on March 23 without a protection cap.

I have no idea how it's happened...

Today cap is glued and module passed Reception test again and it's A.

I think we do not need to repeat FT for this module.

I put it in a tray with good modules. |

|

210

|

Tue Apr 14 16:54:31 2020 |

Andrey Starodumov | Other | M1633 cable disconnected |

We do not have any more module holders.

M1633 was in "Module doctor" tray.

I took module off the holder and put it in a gel-pak. |

|

237

|

Wed Apr 29 08:48:06 2020 |

Urs Langenegger | Other | M1539 |

M1539 showed no readout. I tried, all without success,

- reconnecting the cable to the adapter multiple times

- connecting to the adapter in the blue box

- reconnecting the cable to the MOLEX on the module |

|

258

|

Mon May 11 14:14:05 2020 |

danek kotlinski | Other | M1606 |

On Friday I have tested M1606 at room temperature in the red cold box.

Previously it was reported that trimming does not work for ROC2.

In this test trimming was fine, only 11 pixels failed it.

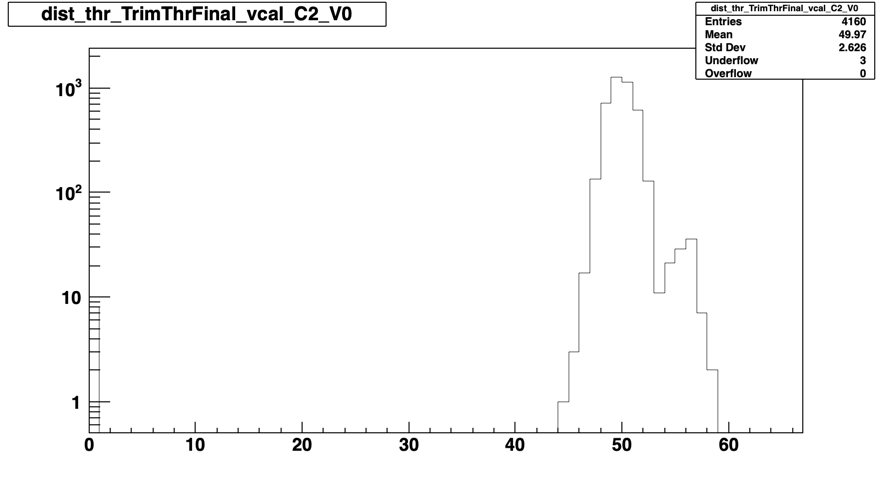

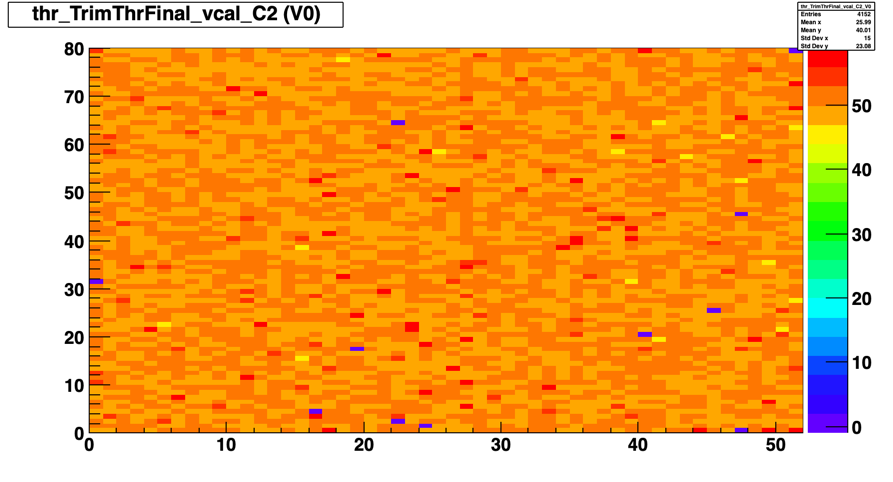

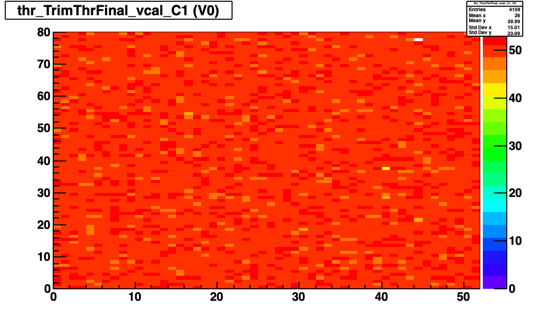

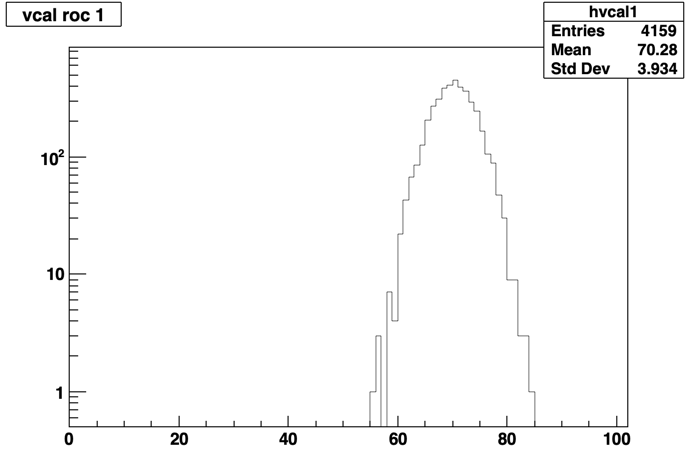

See the attached 1D and 2D histograms. There is small side peak at about vcal=56 with ~100 pixels.

But this should not be a too big problem?

Also the Pulse height map looks good and the reconstructed pulse height at vcal=70

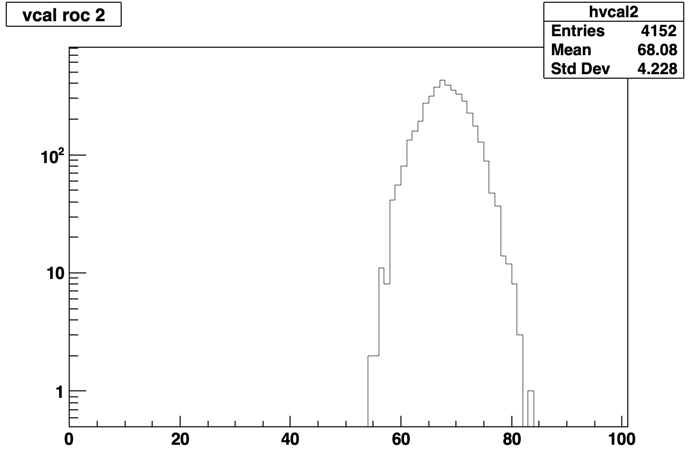

gives vcal=68.1 with rms=4.2, see the attached plot.

So I conclude that this module is fine. |

| Attachment 1: m1606_roc2_thr_1d.png

|

|

| Attachment 2: m1606_roc2_thr_2d.png

|

|

| Attachment 3: m1606_roc2_ph70.png

|

|

|

259

|

Mon May 11 14:40:16 2020 |

danek kotlinski | Other | M1582 |

On Friday I have tested the module M1582 at room temperature in the blue box.

The report in MoreWeb says that this module has problems with trimming 190 pixels in ROC1.

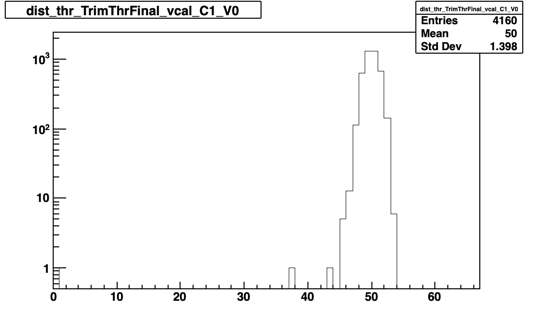

I see not problem in ROC1. The average threshold is 50 with rms=1.37. Only 1 pixel is in the 0 bin.

See the attached 1d and 2d plots.

Also the PH looks good. The vcal 70 PH map is reconstructed at vcal 70.3 with rms of 3.9.

5159 pixels have valid gain calibrations.

I conclude that this module is fine.

Maybe it is again a DTB problem, as reported by Andrey.

D. |

| Attachment 1: m1582_roc1_thr_1d.png

|

|

| Attachment 2: m1582_roc1_thr_2d.png

|

|

| Attachment 3: m1582_roc1_ph70.png

|

|

|

264

|

Wed May 13 17:57:45 2020 |

Andrey Starodumov | Other | L1_DATA backup |

| L1_DATA files are backed up to the LaCie disk |

|

275

|

Tue May 26 23:00:50 2020 |

Dinko Ferencek | Other | Problem with external disk filling up too quickly |

The external hard disk (LaCie) used to back up the L1 replacement data completely filled up after transferring ~70 GB worth of data even though its capacity is 2 TB. The backup consists of copying all .tar files and the WebOutput/ subfolder from /home/l_tester/L1_DATA/ to /media/l_tester/LaCie/L1_DATA/ The corresponding rsync command is

rsync -avPSh --include="M????_*.tar" --include="WebOutput/***" --exclude="*" /home/l_tester/L1_DATA/ /media/l_tester/LaCie/L1_DATA/

It was discovered that /home/l_tester/L1_DATA/WebOutput/ was by mistake duplicated inside /media/l_tester/LaCie/L1_DATA/WebOutput/ However, this could still not explain the full disk.

The size of the tar files looked fine but /media/l_tester/LaCie/L1_DATA/WebOutput/ was 1.8 TB in size while /home/l_tester/L1_DATA/WebOutput/ was taking up only 50 GB and apart from the above-mentioned duplication, there was no other obvious duplication.

It turned out the file system on the external hard disk had a block size of 512 KB which is unusually large. This is typically set to 4 KB. In practice this meant that every file (and even folder), no matter how small, always occupied at least 512 KB on the disk. For example, I saw the following

l_tester@pc11366:~$ cd /media/l_tester/LaCie/

l_tester@pc11366:/media/l_tester/LaCie$ du -hs Warranty.pdf

512K Warranty.pdf

l_tester@pc11366:/media/l_tester/LaCie$ du -hs --apparent-size Warranty.pdf

94K Warranty.pdf

And in a case like ours, where there are a lot of subfolders and files, many of whom are small, a lot of disk space is effectively wasted.

The file system used on the external disk was exFAT. According to this page, the default block size (in the page they call it the cluster size) for the exFAT file system scales with the drive size and this is the likely reason why the size of 512 KB was used (however, 512 KB is still larger than the largest block size used by default). The main partition on the external disk was finally reformatted as follows

sudo mkfs.exfat -n LaCie -s 8 /dev/sdd2

which set the block size to 4 KB. |

|

303

|

Fri Sep 18 15:45:21 2020 |

Andrey Starodumov | Other | M2211 and M2122 |

I made a mistake and instead M2122 used ID M2211 in the .ini file.

Hence now we do not have entry for M2122 but have 2 entries for M2211: one is of Sept 18 and another one called old of Sep 17).

Test results of Sep 17 are for 2211

Test results of Sep 18 are for 2122

To be corrected later |

|

307

|

Mon Jan 18 13:53:44 2021 |

Andrey Starodumov | Other | DTB tests |

M2217

--->DTB 154 (one of the red cold box setup):

- flat cable (with HV):

>adctest

clk low = -231.0 mV high= 212.0 mV amplitude = 443.0 mVpp (differential)

ctr low = -246.0 mV high= 235.0 mV amplitude = 481.0 mVpp (differential)

sda low = -250.0 mV high= 223.0 mV amplitude = 473.0 mVpp (differential)

rda low = -65.0 mV high= 48.0 mV amplitude = 113.0 mVpp (differential)

sdata1 low = -171.0 mV high= 155.0 mV amplitude = 326.0 mVpp (differential)

sdata2 low = -173.0 mV high= 149.0 mV amplitude = 322.0 mVpp (differential)

- twisted pairs cable:

several ERRORS during VthrCompCalDel and PixelAlive: ERROR: <datapipe.cc/CheckEventID:L486> Channel 2 Event ID mismatch: local ID (119) != TBM ID (120)

>adctest

clk low = -196.0 mV high= 177.0 mV amplitude = 373.0 mVpp (differential)

ctr low = -245.0 mV high= 236.0 mV amplitude = 481.0 mVpp (differential)